Outcome-based testing: The end of shallow AI validation

Here’s the brutal truth about AI deployment: Sounding helpful isn’t the same as being helpful. Your AI can respond with empathy, structure, and polish — and still fail entirely.

Because what matters isn’t how well the AI agent speaks, but whether it gets the job done.

Yet, most AI testing stops at semantic similarity. Checking if the AI’s response sounds close enough to a reference answer. It’s better than nothing, but it completely misses the point. An AI can sound correct, pass the test, and still fail to solve the customer’s problem.

Sendbird’s Outcome-Based Testing changes that. It goes deeper, measuring whether your AI understood the user’s intent, executed the right logic, and delivered the actual result. It’s how you move from “the bot sounded fine” to “the issue is resolved.”

The problem it solves

Traditional AI testing asks:

“Did the AI say something reasonable?”

But what you really need to know is:

“Did the AI actually help the user accomplish their goal?”

Let’s take a simple example:

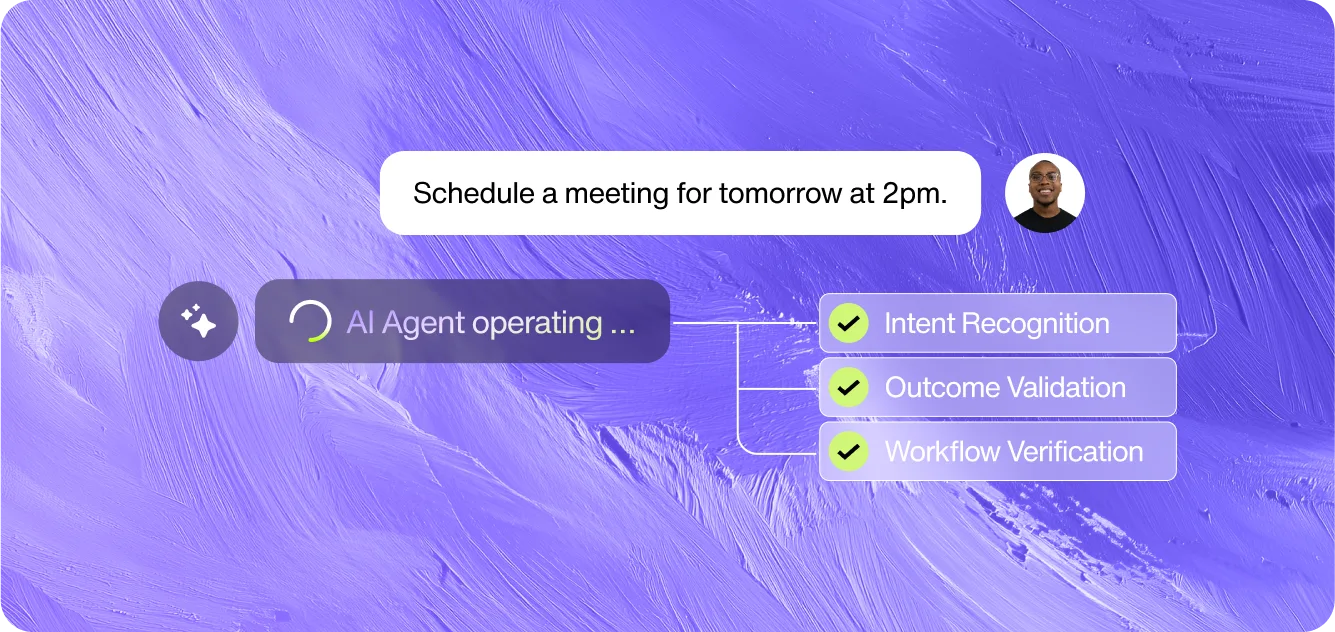

User intent: “Schedule a meeting for tomorrow at 2pm”

Traditional test: AI responds helpfully and discusses scheduling

Outcome-based test: AI actually creates the calendar event

In traditional testing, the AI passes if it talks about scheduling. But if it doesn’t actually create the calendar event, the task is still undone — and no one catches it.

Outcome-based testing doesn’t just evaluate how the AI sounds, it verifies whether the right action was taken, the right workflows triggered, and the user’s goal achieved.

How outcome-based testing works

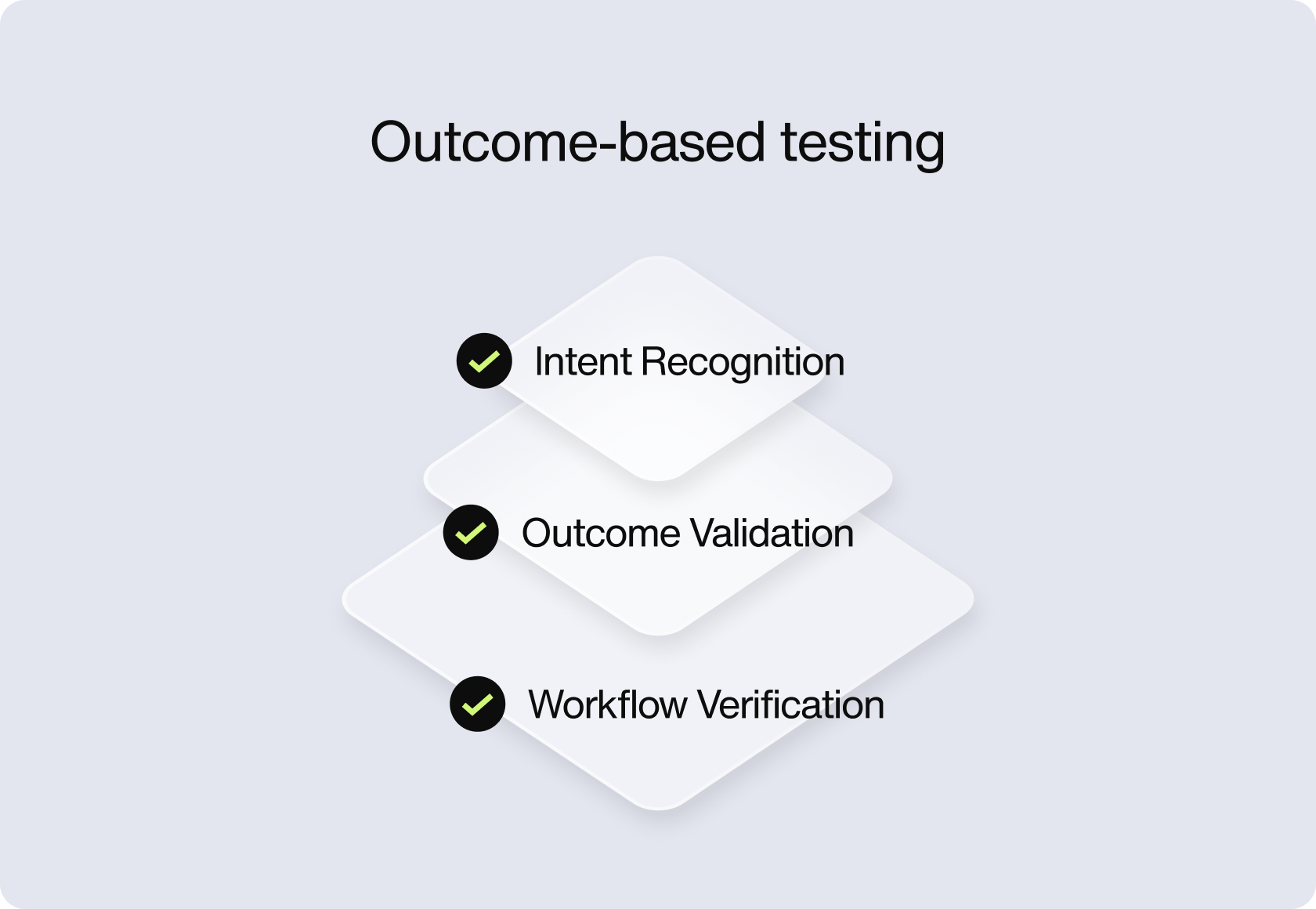

- Intent recognition

Understands the real goal behind the user messages

Can be defined manually or extracted via LLM

Goes beyond keywords to capture actual intent

- Outcome validation

Compares the AI’s result to what should’ve happened

Tracks if the correct outcome was delivered

Focuses on what the AI did, not what it said

- Workflow verification

Actionbook check: Was the right business logic triggered?

Tool check: Were the correct integrations used?

The business impact

Outcome-based testing turns AI quality from a guessing game into a measurable system. You’re no longer relying on surface-level responses, you’re validating whether the AI understood the user, triggered the right workflows, and delivered the actual result.

That means fewer silent failures, fewer escalations, and fewer customers left hanging.

For enterprise teams, it’s more than improved QA. It’s how you de-risk AI in production. You can now test your AI like any critical system: with clear goals, real metrics, and accountability. Advanced outcome-based testing now available. Contact us to learn more.