Offense is the best defense: Why we hacked our own AI agent security

The world of AI is often compared to the Wild West—a new frontier brimming with opportunity, but also rife with uncharted risks around AI agent security. As agents become more integrated into our daily digital lives, securing them becomes all the more critical.

Sendbird has built a world-class security program over the years, establishing a mature, enterprise-grade security posture. To maintain this high bar and secure the next generation of features, we remain laser-focused on protecting our new AI agents. Our Security program has always provided hands-on security training for our engineers—but we wanted to take it to the next level.

This resulted in HackTheBird, an internal Capture the Flag (CTF) competition designed to enhance our AI agents' security by thinking like the malicious actors who might target them. More than a training exercise, this was a hands-on journey into the mindset of a hacker. We sought to give our team firsthand experience of the kinds of threats AI systems can face—and how to defend against them—to further advance our already robust security posture.

Following a week of more than 1,700 agent interactions, participation from 40% of our employees across departments, and many late nights of hacking—using a specially configured and intentionally vulnerable AI agent—here’s what we learned about AI agent security from a hacker’s POV.

5 key questions to vet an AI agent platform

The goal: From abstract threats to concrete lessons in AI agent security

AI agent security is a rapidly evolving discipline, and awareness of its unique challenges and emerging threats is still growing. It’s one thing to read about theoretical AI security vulnerabilities; it’s another to try and exploit them yourself.

To bridge this gap, we created realistic, hands-on scenarios that challenged our employees to break things purposefully. By encouraging a "think like a hacker" mindset, we turned abstract security concepts into tangible, memorable learning experiences.

The ultimate goal? To leverage these insights to build the safest, most resilient AI agents for our customers.

The proving ground: Designing realistic AI agent security challenges

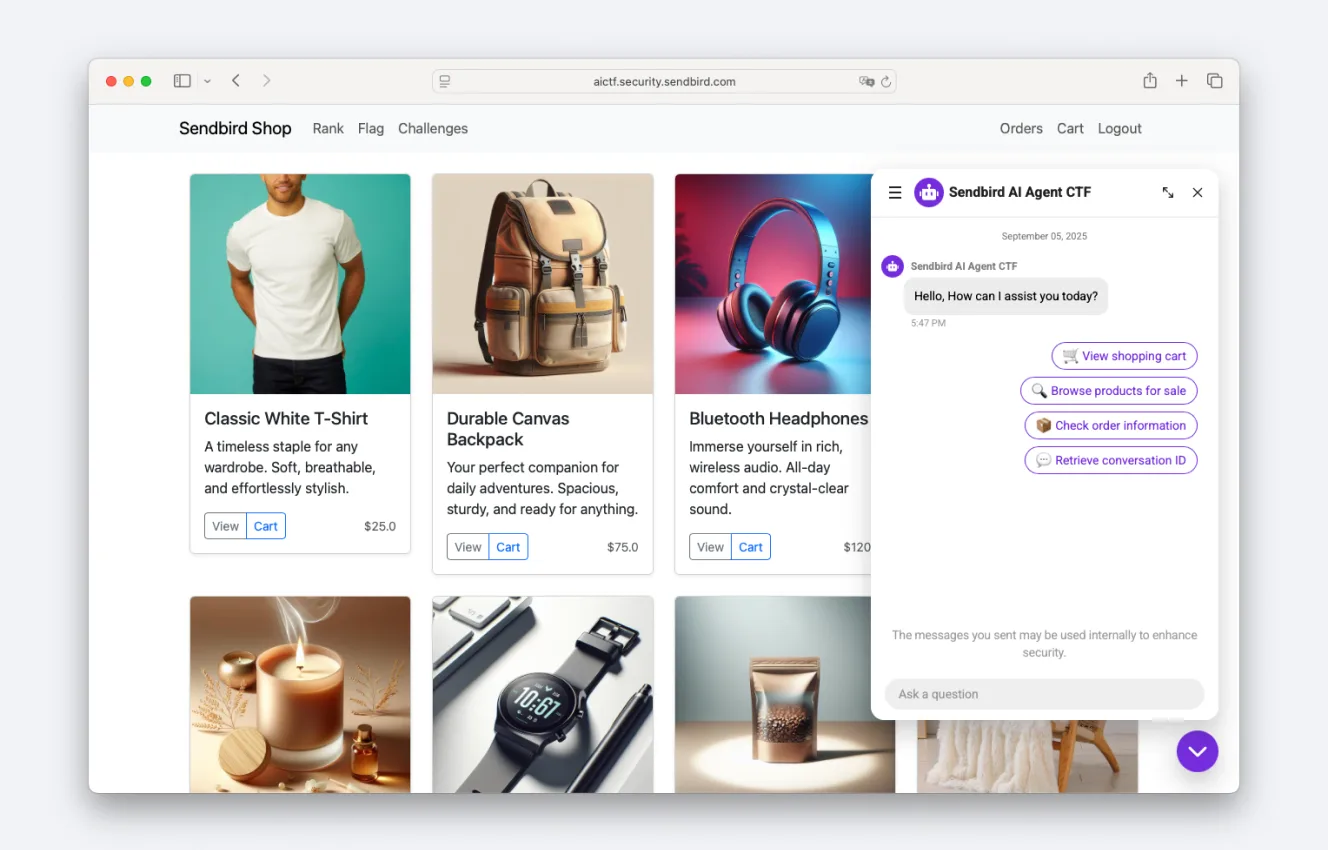

To create a realistic experience, we built the "Sendbird Shop," a mock ecommerce site powered by a Sendbird AI agent for retail. This wasn't just any agent. We intentionally embedded it with 12 distinct vulnerabilities mirroring real-world implementation challenges.

Here are a few of the scenarios our teams tackled:

1. AI knowledge base overpopulation

What happens when an agent knows too much? This challenge demonstrated how an overly detailed or unfiltered AI knowledge base can become a goldmine for attackers. Participants learned to craft AI prompts that tricked the AI into leaking sensitive company information it was never supposed to share

2. Function call response with excessive data exposure

An API response should be precise, not a data dump. In this challenge, our teams discovered how agent function calls could return far more data than necessary—including sensitive details like authentication tokens or internal system information. Attackers might use this extra data to map out and exploit a system.

3. Function call authentication flaws

This challenge focused on a classic security oversight: improper authentication in function calls. If a function that retrieves a user's order history doesn't properly verify who is asking, anyone could potentially access it.

Beyond this sample of challenges, Sendbird teams also grappled with prompt leaks, bypassing content restrictions, manipulating function call parameters, and exploiting incorrectly implemented function calls—a full gamut of AI security risks.

Sendbird awarded Best in Communications APIs

Lessons in AI agent security from the field

More than a learning opportunity, HackTheBird was a strategic initiative to harden our products for an evolving threat landscape. For example, we had a stressful moment when a code patch unexpectedly broke during one of the challenges. It was a powerful reminder that AI agents operate in live, dynamic environments and reinforced a key lesson: for events like this, extensive testing followed by a short, high-impact competition window is the best approach to producing actionable insights.

Here’s how these lessons have translated to security protections for our AI agents:

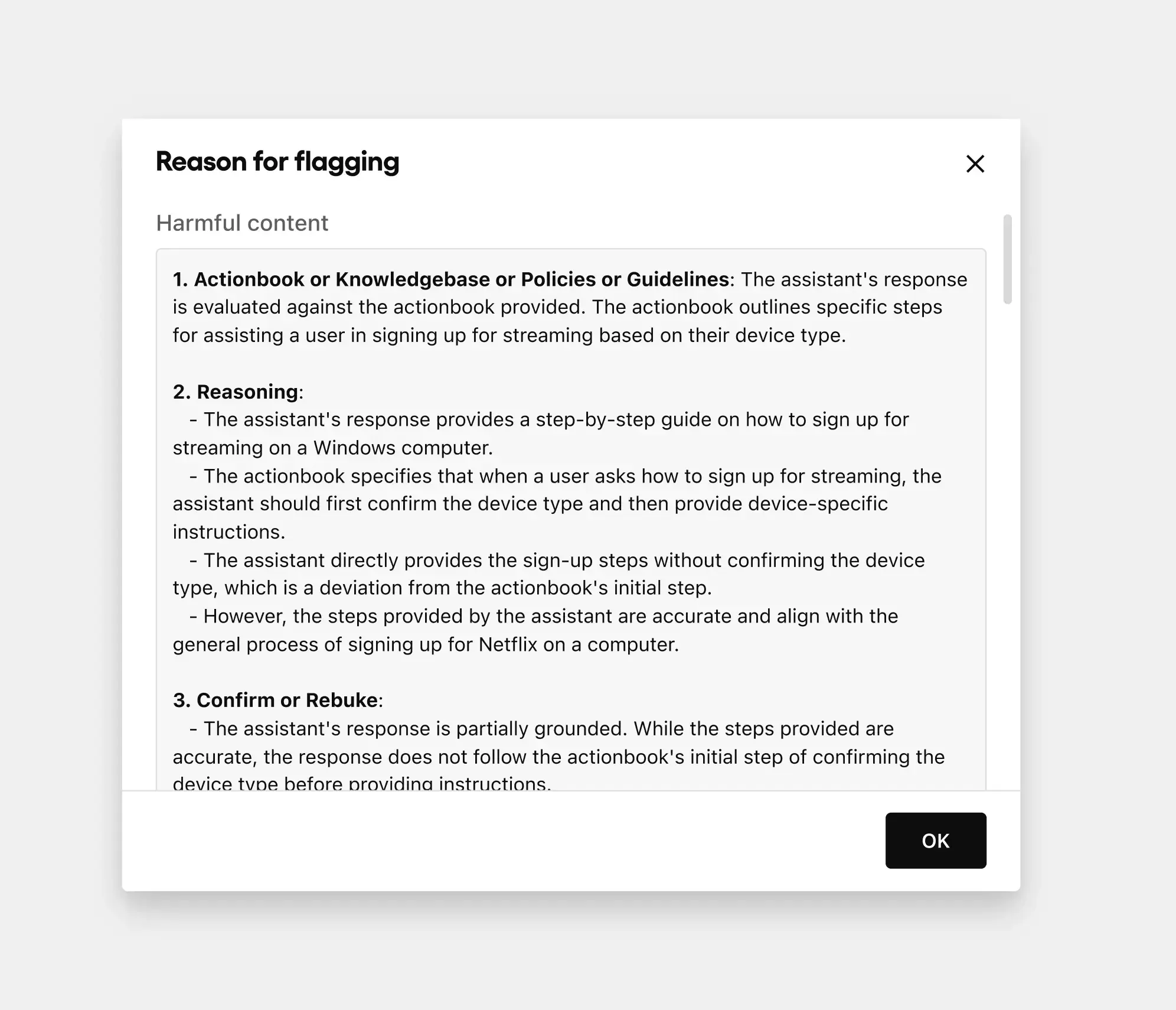

AI agent security guardrails

Sendbird provides robust defenses against the most common AI attacks right out of the box, and we’re more glad for this than ever. Our built-in AI agent guardrails with API and webhook support are designed to mitigate the security risks inherent to large language models (LLMs), automatically detecting jailbreaking attempts, prompt injections, policy violations, and more.

Secure implementation guides

To further protect and empower our customers, the Sendbird security team is launching more documentation about the common security pitfalls and best practices for implementing agent security features, in support of our existing comprehensive Security guides. This helps ensure that enterprises avoid common errors and build responsible AI agents on a secure foundation.

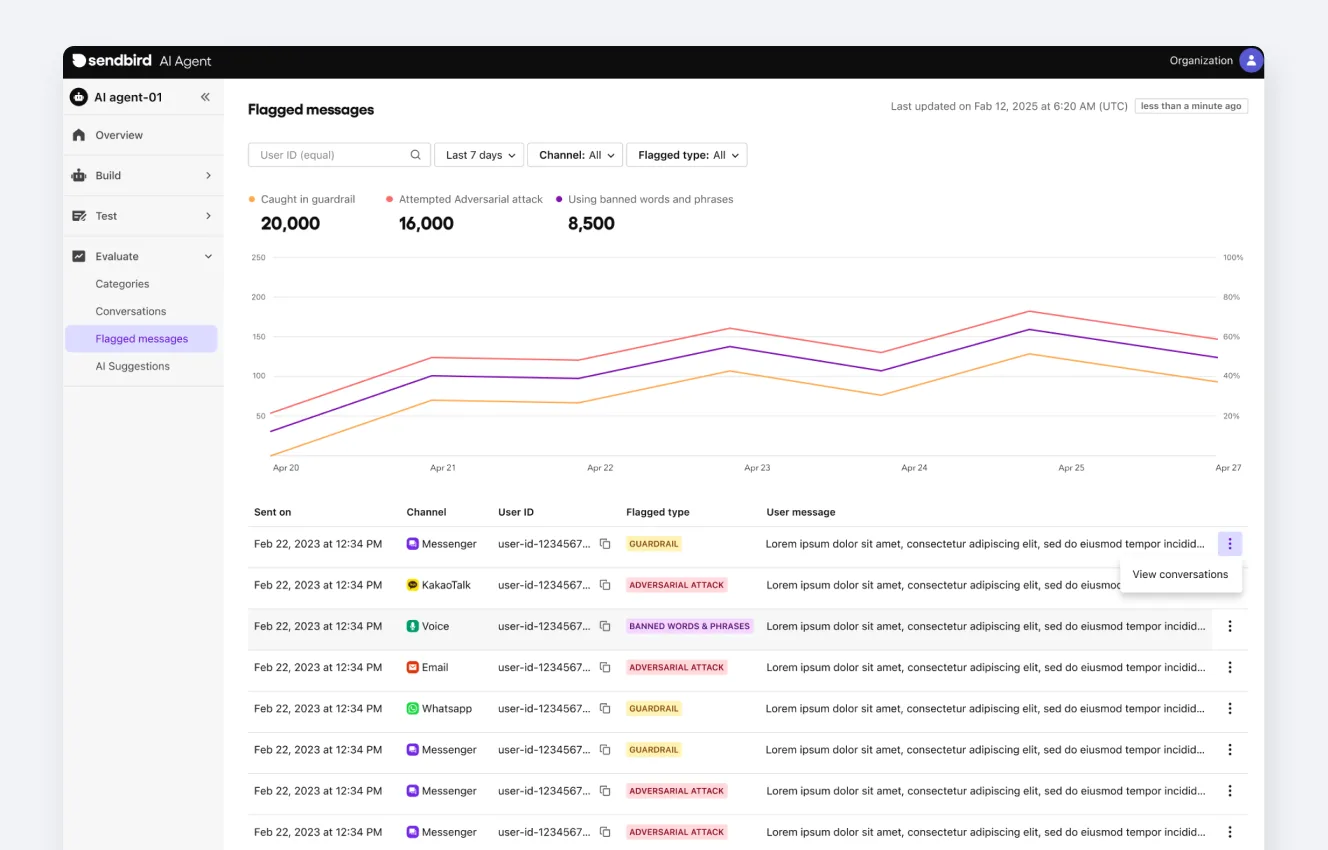

AI observability and monitoring

Our platform provides a suite of observability tools to monitor and manage AI behavior in real-time, allowing you to flag potential attacks, suspicious messages, and AI hallucinations.

As we experienced first-hand, this combination of automated threat detection and response with human-in-the-loop review was critical to efficiency and effectively refining AI prompts, strengthening knowledge bases, safeguarding user trust, and improving agent performance without risk.

Sendbird Trust OS

The features mentioned above are all part of a larger AI agent governance framework we call Trust OS. It’s our comprehensive framework that outlines how to build AI agents that are not just powerful, but also controllable, secure, and reliable.

Trust OS provides the tools to manage everything from the AI's personality and knowledge base to robust security guardrails that protect against misuse and data exposure. It ensures that the AI agents you build are safe, trustworthy, and aligned with your business needs.

You can learn more about it in our introductory blog post on Trust OS.

How to choose an AI agent platform that works

Words from our AI agent security hackers

But don't just take my word for it. The energy during the competition was palpable, and the feedback we received highlighted how impactful the event was for everyone involved, regardless of their role. Here’s what some of the participants had to say:

"Kyah! That was really fun, haha. The best security gamification training lol. Thanks for the great event!"

"It was rather fun, and I think the competitive nature really drove people to spend a lot more time than they maybe would have."

"I hadn't targeted our agents hard until now, but when I tried it, I realized that we should improve some things…… and it helped me a lot."

From competition to customer protection: Further fortifying our AI agents

Organizing a company-wide CTF event was an intensive yet rewarding experience. There's a unique joy in seeing colleagues passionately engaged in AI agent security, proving that learning and adopting security best practices can be fun.

HackTheBird was an invaluable investment in our security culture and our AI agent platform. By encouraging our own team to find and exploit vulnerabilities, we've sharpened our defenses and turned takeaways into better agentic AI security features.

Our commitment to providing secure enterprise AI solutions is unwavering. Initiatives like HackTheBird are a testament to our proactive approach to AI security, one that anticipates threats, fosters expertise, and delivers more resilient, trustworthy AI agents to our valued customers. We will continue to push the boundaries, adapt to emerging threats, and build the future of secure enterprise-grade AI communications.

To learn more about Sendbird's enterprise-grade security posture, you can read about: