15 AI risks to your business and how to address them

AI promises a wealth of opportunities for businesses, but realizing its transformative benefits also means addressing its potential risks.

Today, 72% of organizations are using AI in some form, yet only 20% have a formal AI risk strategy in place. As a result, stories of AI risks and costly missteps—from data privacy breaches to ethical lapses—are increasingly in the news. Without proper safeguards for AI, businesses face an increased risk of cybersecurity threats, regulatory violations, legal liability, and a loss of public trust.

For IT leaders and stakeholders, understanding these risks is vital to turning AI projects into safe, successful, and scalable solutions. It means tackling questions like:

-

How do you systematically identify and mitigate the risks associated with AI?

-

What guardrails can ensure your AI systems remain safe, ethical, and compliant over time?

This article explores the 15 greatest risks of AI to businesses and how to address them using effective AI risk management frameworks, AI governance, and AI risk management tools.

Specifically, we’ll cover:

Reimagine customer service with AI agents

Ethical and legal risks of AI

The ethical and legal risks of AI are among the most pressing for enterprises. With AI set to become the new IT bedrock for enterprises, it must be safe and legal—both for customers and companies to use—or the risks may outweigh the rewards.

While most executives consider AI ethics important, only 20% say their organization’s practices on ethical AI currently match these stated principles and values.

Here are five major pitfalls around AI ethics and legality and how to avoid them.

1. Bias

AI algorithms—or the rules AI systems use to make decisions—can inherit biases from the data they were trained on. These learned biases can be replicated across the AI lifecycle, leading not only to inaccurate outputs, but also outcomes that are potentially harmful, unfair, or discriminatory.

For example, iTutorGroup, which offers English language classes to Chinese students, recently settled an age discrimination hiring lawsuit after its AI-driven tutor software rejected female applicants over the age of 55. This makes mitigating AI bias a key step in avoiding financial loss, ensuring regulatory compliance, avoiding reputational damage, and maintaining user trust.

How to address:

Establish an AI governance framework that addresses bias mitigation as part of a comprehensive AI strategy.

Mitigate bias proactively by using representative datasets, evaluating fairness metrics, and building diverse AI teams.

Identify biased outputs by continuously monitoring AI model outputs, performing bias audits on data, post-deployment impact assessments, and model retraining.

Use bias detection tools like AI Fairness 360 (IBM), Fairlearn (Microsoft), or Google’s What-If Tool.

Leverage omnichannel AI for customer support

2. Lack of transparency and explainability

AI algorithms and models often function as “black boxes,” making it difficult to understand their reasoning and how they arrived at a certain output. This lack of transparency and explainability—or the ability to justify AI’s decisions—complicates efforts to take proactive measures that mitigate risk.

That said, businesses that fail to be transparent or accountable for their AI systems are at risk of losing public trust, especially in high-stakes areas like AI in healthcare or AI in finance. For example, as in the case of Facebook, an executive being unable to account for how its algorithm makes decisions that influence the public can lead to bad press and regulatory investigations.

How to address:

Adopt explainable AI techniques such as continuous model evaluation.

Assess the interpretability of AI results by auditing and reviewing AI outputs based on explainability standards within a clear AI governance framework.

Use explainable AI tools like LIME, SHAP, or AI Explainability 360 (IBM).

Learn more: How to make AI agents more transparent by reviewing their logs

3. Lack of accountability

As AI systems increasingly influence decision-making and real-life, it begs the question—Who's responsibility when AI systems make mistakes? Who's accountable when AI decisions cause harm?

Such questions are at the heart of issues with self-driving cars and facial recognition technologies. Currently, regulations around these questions are getting worked out. But in the meantime, at risk of legal disputes, loss of trust, and reputational damage, businesses must ensure there’s someone responsible for how these decisions are made and their knock-on effects.

How to address:

Maintain detailed logs and audit trails to facilitate reviews of AI’s behavior and decisions.

Implement role-based access controls for AI to ensure accountability.

Document decisions during AI design, deployment, and testing for inspection if needed.

Build accountability into AI using risk frameworks such as: EU Ethics Guidelines for Trustworthy AI, OECD AI Principles, or NIST AI Risk Management Framework.

4. Regulatory non-compliance

Businesses face steep fines and legal penalties should they fail to comply with government regulations such as GDPR or sector-specific guidelines. AI regulations are evolving fast and vary by region, requiring organizations to stay informed and compliant. A proactive and well-monitored approach can help to mitigate risk and stay current with the shifting regulatory landscape.

How to address:

Assign a legal team to track, interpret, and adapt to the evolving compliance landscape.

Align your AI governance strategy with the above frameworks.

Maintain standardized documentation and audit trails across teams.

5. Intellectual property (IP) infringement

Generative AI can produce art, music, text, and code that closely mimics the work of individual people. However, because these AI systems are trained on massive datasets pulled from the internet, they often ingest copyrighted content without proper licensing or attribution. This raises questions: Who owns the copyright to AI-generated content? What about content created from AI training data that includes some unlicensed material?

IP issues around AI-generated content are still evolving. However, this legal grey area around who owns and has permission to use AI-generated content leads to challenges and potential liabilities for enterprises. For example, Getty Images is suing the AI art generator Stable Diffusion for copying over 12 million images from its database to train its AI models.

How to address:

Implement checks that monitor AI training data for copyrighted content.

Be cautious about inputting sensitive IP into AI systems.

Audit AI model outputs to ensure compliance with IP regulations and avoid risk exposure.

Automate customer service with AI agents

Data risks of AI

The datasets that AI systems rely on can be vulnerable to cyberattacks, tampering, and data breaches. One study found that 96% of business leaders believe that adopting generative AI makes a security breach more likely, yet only 24% have secured their current generative AI projects.

As with other technology, businesses can mitigate the data risks of AI by protecting data integrity, security and privacy throughout the AI lifecycle.

Here are the three biggest risks around AI and data, and how to address them.

6. Data security

By breaching the datasets that power AI technologies, bad actors can launch cyberattacks on enterprises. This can result in unauthorized access, compromised confidentiality, data loss, and reputational damage—especially in regulated industries such as AI-driven healthcare.

AI tools can also be manipulated to clone voices, create fake identities, and launch phishing emails that put traditional security measures to the test. In fact, since 2022, there’s been a 967% increase in credible phishing attacks that use ChatGPT to create convincing phishing emails that bypass traditional email filters.

How to address:

Establish an AI security and safety strategy.

Conduct threat modeling and risk assessments to find security gaps in AI environments.

Assess vulnerabilities in AI models using adversarial testing.

Adopt a secure-by-design approach for the AI lifecycle to safeguard AI training data.

Consider vertical AI agents for cybersecurity that enable continuous monitoring and threat-response.

7. Data privacy

AI development environments can leak sensitive data if not secured properly. Large language models (LLMs)—which are the underlying models for many AI applications—are trained on massive volumes of data, which is typically sourced by scraping websites with web crawlers without users’ consent.

If this training data is exposed and happens to contain sensitive or personally identifiable information (PII), enterprises could face regulatory penalties, user backlash, and loss of public trust. Additionally, AI systems that handle customers’ sensitive personal data can be vulnerable to privacy breaches, leading to regulatory and legal issues.

How to address:

Inform users how data is collected, stored, and used.

Provide opt-out mechanisms.

Consider using synthetic data to preserve privacy.

Use data minimization and data anonymization techniques to mask PII.

8. Data integrity

AI models and systems are only as reliable as the data they’re trained on. Using outdated, unclean, corrupted, or incomplete data can lead to inaccurate outputs, false positives, or poor decision-making that puts the enterprise at risk of legal or regulatory issues and financial loss. This makes it critical to ensure the accuracy, currency, and trustworthiness of data throughout the AI lifecycle.

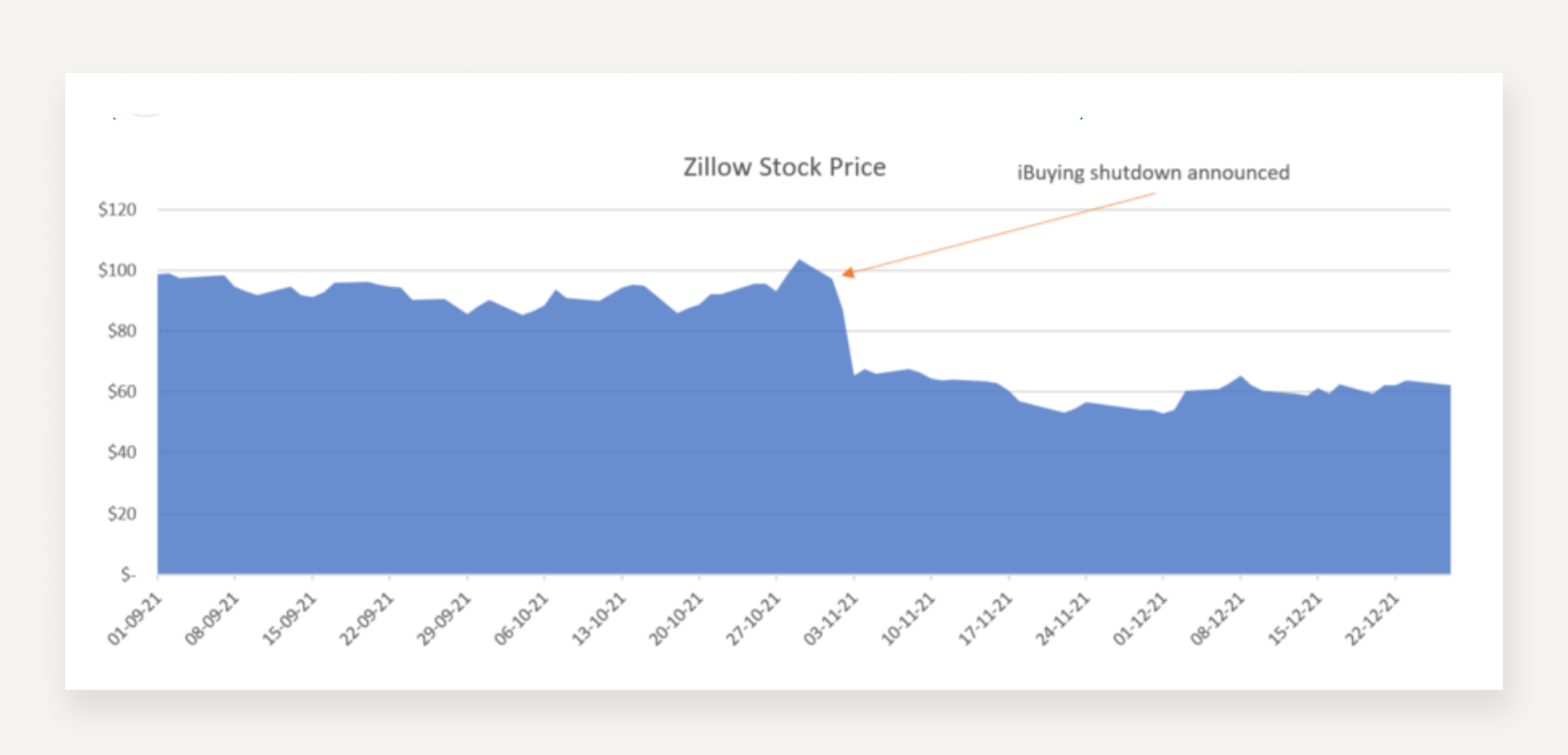

Zillow, for instance, used an AI model to predict home values in its iBuying program. However, the model’s training data didn’t account for sudden market shifts or hyperlocal variables, and so overestimated prices. These faulty AI outputs led the company to overbuy inventory, resulting in a $500 million loss.

How to address:

Vet third-party data sources for quality and documentation.

Audit AI models after deployment to assess data quality and impact.

Regularly retrain models using the latest data and reevaluate model performance for accuracy.

Leverage omnichannel AI for customer support

Operational risks of AI

Like any technology, AI models and systems are susceptible to operational risks. If left unaddressed, these risks can lead to system failures, cybersecurity breaches, and costly decisions.

Here are the seven major operational AI risks, and how to address them.

9. Misinformation and manipulation

As with cyberattacks, bad actors can exploit AI technologies to spread misinformation, create deepfakes, and impersonate individuals. For example, a finance worker was tricked into wiring $25 million to scammers after joining a Zoom call with what he thought were real executives—but all were deepfake AI-generated replicas.

AI hallucinations—or when AI confidently posits error as truth—also open the door to misinformation and manipulation. If spread across social media, AI-generated falsehoods can be used to harass individuals, create liabilities, and damage the business's reputation.

How to address:

Train users and employees how to spot misinformation and disinformation.

Validate and fact-check AI outputs before taking action.

Prioritize high-quality data and rigorous model testing to ensure accurate outputs.

Use human oversight to detect hallucinations and errors.

Follow latest research in deepfake detection and media authentication.

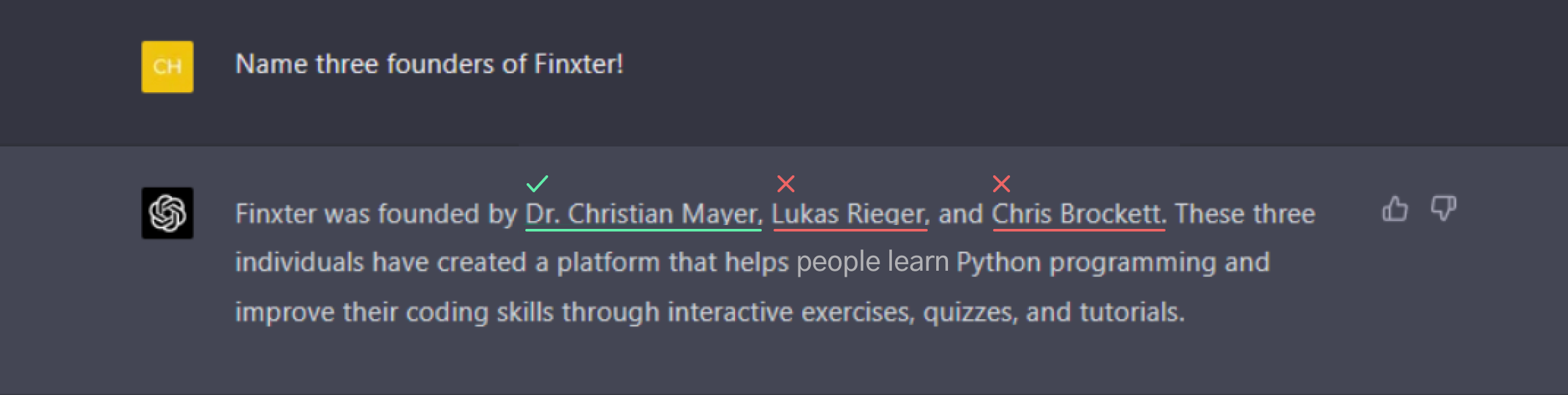

10. Model risks

Bad actors can also target AI models, compromising the model’s integrity by manipulating its architecture, weights or LLM parameters, and other core components that determine its performance and behavior. For example, with AI-driven self-driving cars, hackers could tamper with how an AI model classifies objects, causing it to misread a stop sign and operate unsafely.

How to address:

Monitor models for unexpected changes in performance using machine learning observability tools.

Safeguard models at rest with containerization and isolated environments.

Conduct adversarial testing to check for model vulnerabilities.

11. Model drift

AI model performance can degrade over time as the relationship between its training data and the real world changes. This process, known as model drift, results in outputs that are potentially less accurate, less relevant, or even harmful. If left unaddressed, outdated AI models can lead to compliance violations, costly decisions, and harmful outcomes.

There are two main types:

Data drift: The input data distribution changes, but the task stays the same.

Example: The way people phrase search queries changes over time.Concept drift: The relationship between inputs and outcomes changes.

Example: What was once considered a high-value customer no longer behaves the same way.

How to address:

Continuously track model performance for drift using AI drift detection and observability tools.

Retrain models regularly to ensure accurate outputs based on fresh data.

Document model drift incidents to inform team learning, model updates, and maintain AI transparency.

12. Sustainability and scalability

AI systems are new and complex, so it can be a challenge to move from experimentation to operational AI that delivers long-term business value. In a recent study on AI leaders from Boston Consulting Group, 74% of respondents said they struggle to achieve and scale value from AI initiatives.

If scalability and sustainability aren’t part of a long-term AI strategy, enterprises risk losing money on their efforts due to inconsistent AI performance, elevated operating costs, and lack of buy-in from teams and leadership.

How to address:

Invest strategically a few high-priority AI opportunities then scale to maximize AI’s value.

Focus on integrating AI into cost reduction and revenue generation efforts to offset costs.

Reshape core business process and support functions as part of a dedicated AI transformation.

Learn more: What is AI orchestration: A beginner's guide

13. Integration challenges

Integrating AI with existing infrastructure is complex and resource-intensive. In fact, according to Deloitte, 47% of AI adopters cite integration with existing systems as a top barrier to success.

Businesses often encounter issues with compatibility, data silos, and system interoperability during AI integration, which can lead to malfunctions or system failures that disrupt operations, increase IT maintenance, or cause financial setbacks. Over time, this can result in a loss of trust in AI projects and their champions.

How to address:

Invest in scalable, interoperable architecture for AI systems.

Develop AI in cross-functional teams that include data scientists and machine learning engineers.

Avoid vendor lock-in by using open-source, modular AI when possible to avoid potential vulnerabilities or disruptions.

Learn more: Why enterprise-grade infrastructure is key in the AI agent era

14. Scarcity of AI talent

There's a shortage of skilled personnel to design, deploy, and manage AI systems at scale. In a study by Gartner, 56% of organizations cited a lack of AI talent as the biggest barrier to AI adoption. This can lead to stalled projects, wasted investments, and increasing reliance on costly third-party vendors.

AI isn’t a plug-and-play solution, so even the most promising AI initiatives can fall short of their true potential without the right talent to support them throughout the AI lifecycle.

How to address:

Upskill and reskill employees by launching training programs on AI, machine learning, and data science.

Recruit AI talent with competitive compensation, flexible work, and clear career paths.

Partner with AI experts and consultants to accelerate projects and transfer knowledge before bringing maintenance back in-house.

15. Lack of adoption

Even well-integrated AI systems will fail to live up to their potential if users don’t understand or trust them. Employees may fear job displacement, fail to grasp AI-driven roles, or distrust AI decisions, all of which can undermine adoption efforts and return on investment (ROI).

AI is more than a tech shift—it’s a people shift. If organizations don’t invest in change management to realign around AI, they risk investing in AI tools and practices that teams don’t use or trust, and so fall short of success.

How to address:

Involve stakeholders early, framing AI tools as a way to enhance roles instead of replacing them.

Explain the vision for AI, emphasizing the opportunities for upskilling to diminish resistance and uncertainty.

Invest in training and enablement to help employees adapt and realize the value of AI workflows and an AI workforce.

Empower your support agents with AI

What is AI risk management?

AI risk management is the process of systematically identifying, assessing, and mitigating the potential risks associated with AI technology to ensure safe and ethical use. It aims to minimize AI's negative impacts and maximize its benefits while fostering trust among customers, stakeholders, and regulators.

AI risk management includes these key aspects:

- Proactive approach: AI risk management is about anticipating and preventing AI risks, rather than just reacting to them.

- Systematic process: It involves a structured approach to identifying, analyzing, and addressing risk across the AI lifecycle.

- Focus on trustworthiness: The goal is to ensure that AI systems are safe, reliable, fair, and accountable in the eyes of users and stakeholders.

- Comprehensive: AI risk management accounts for multifaceted risks, including ethical, security, and operational.

By addressing AI risks early on, businesses can minimize the potential negative consequences of AI development and use. This helps to foster a culture of innovation without fear of costly missteps, and can ultimately ensure AI systems are more reliable, effective, and competitive.

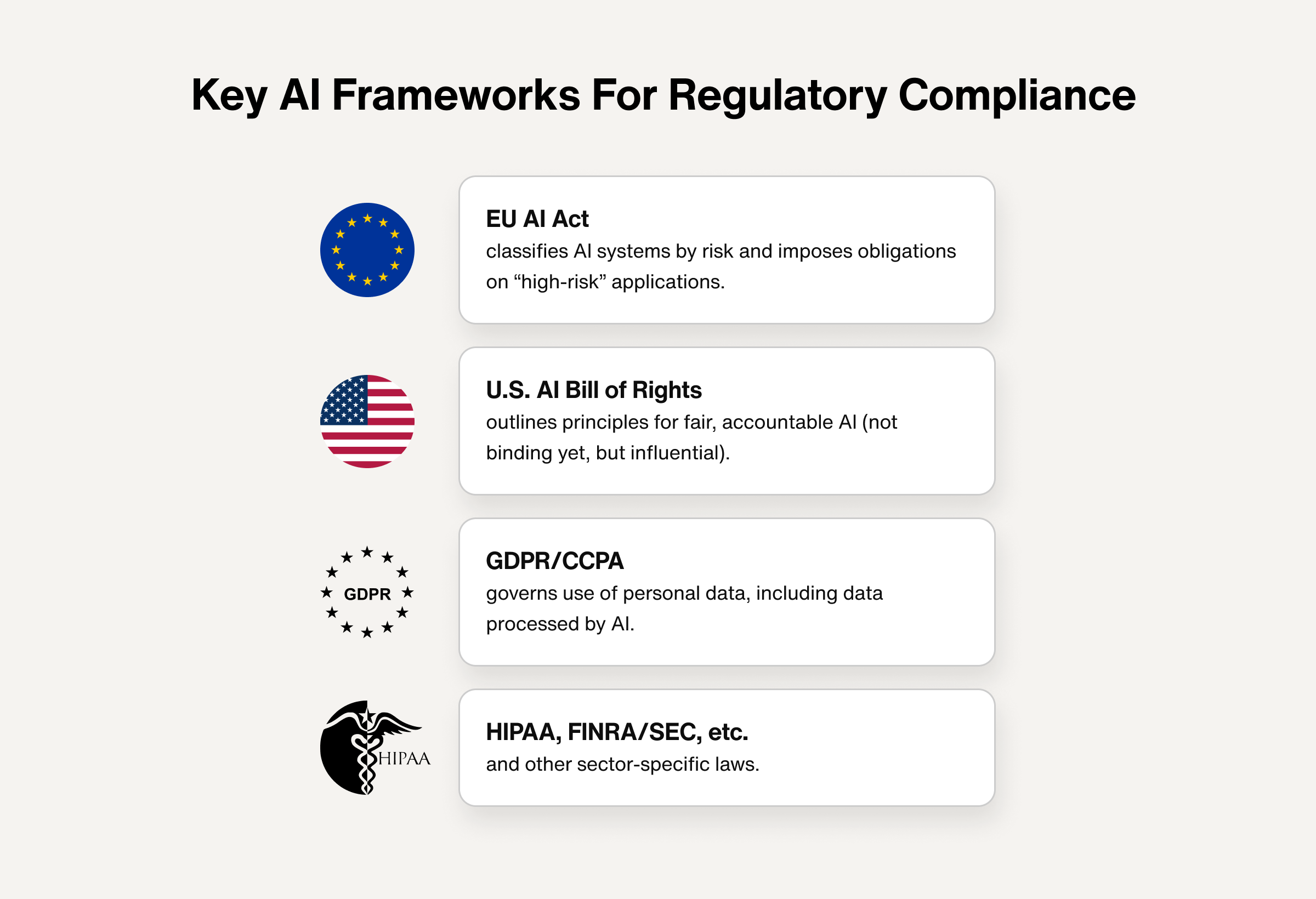

3 top AI risk management frameworks

AI risk frameworks are sets of guidelines and practices for managing risks across the AI lifecycle. They outline the roles, responsibilities, policies, and procedures that organizations follow as they develop, design, deploy, and maintain AI systems.

These AI risk frameworks are the playbooks on how to use AI in a way that minimizes risks, ensures ongoing regulatory compliance, and upholds ethical standards.

The most trusted AI risk management frameworks include:

The NIST AI risk management framework: The National Institute of Standards and Technology’s (NIST) AI Risk Management Framework is the benchmark for AI risk management. Its four stage approach was developed in collaboration with public and private sectors, and released in 2023.

The EU AI Act: The EU Artificial Intelligence Act (EU AI Act) is a law that governs the development and use of artificial intelligence in the European Union (EU).

ISO/IEC standards: The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) has developed standards for various aspects of AI risk management across the AI lifecycle.

The top AI risk management tools

If you’re ready to start mitigating AI risks, there’s a growing set of AI-driven tools that can help to identify, assess, and mitigate these issues to ensure the most secure, ethical, and compliant operations.

A few of these include:

Comprehensive risk assessment and compliance management: RiskWatch, LogicGate

Financial risk assessment and fraud detection: Previse, Quantifind

Incident management and compliance reporting: Resolver, Compliance.ai

AI risks: Inevitable but not intractable

As adoption accelerates, so does the need for thoughtful governance, ethical safeguards, and proactive risk management. By recognizing these AI risks and challenges early, business leaders can build robust AI risk management strategies from the start—allowing them to harness AI’s full potential while minimizing its liabilities.

If you’re looking for help with creating your AI risk strategy, Sendbird can help. Our team of AI experts includes machine learning engineers, data scientists, and more are skilled and ready to help enterprises craft and deploy an effective AI risk strategy at scale.

Our robust AI agent platform makes it easy to build AI agents for cybersecurity and risk on a foundation of enterprise-grade infrastructure that ensures optimal performance with unmatched adaptability, security, compliance, and scalability.

Contact our team of friendly AI experts to get started.

If you want to learn more about the future of AI, you might enjoy these related resources: