AI metrics: How to measure and evaluate AI performance

AI metrics are where the guesswork of AI strategy meets scientific and operational rigor. These measurements provide the framework to measure AI performance across technical execution and business outcomes, ensuring AI operations are safe, transparent, and successful.

For leaders under pressure to prove return on investment (ROI), AI metrics are proof of progress—showing how AI-powered automation, hyper-personalization, or customer support translates into measurable value—and pinpointing where to focus next.

For compliance, legal, and governance teams, they’re the foundation of AI transparency and trust, ensuring AI operates in a way that aligns with internal standards, regulatory frameworks, and societal responsibility.

For customers, AI metrics are a bond of trust, representing organizations’ efforts to deepen relationships without compromising hard-won loyalty.

Yet according to a 2024 IBM study, only 35% of enterprises track AI performance metrics, even though 80% say reliability of AI operations is their top concern.

In this guide, you’ll learn the key AI metrics across domains, why they matter, and how to connect them to real-world success using business AI KPIs. Plus, a few best practices to help you get started.

Key takeaways

Measuring AI requires various metrics that span the domains of business, technical performance, user experience, and AI responsibility.

AI metrics must align with specific business goals and industry needs, as certain metrics are associated with distinct AI use cases.

Combine quantitative metrics with user feedback to get a fuller picture of AI-powered customer service, support, and CX.

Look beyond traditional business metrics to gain a true understanding of technical AI performance.

What are AI metrics?

AI metrics are the quantitative and qualitative measures that evaluate an artificial intelligence (AI) model's or system’s effectiveness, efficiency, reliability, and alignment with broader strategic objectives. Their purpose is to provide visibility into AI performance, ensuring AI is effective, trustworthy, and generates business value.

8 major support hassles solved with AI agents

Why are AI metrics important?

AI metrics are essential because traditional measurements don’t capture how AI systems arrive at or enable outcomes. Unlike traditional deterministic IT systems, AI is probabilistic. Measuring its performance requires new ways to account for its inherent uncertainty and how its unique capabilities for reasoning, generation, and autonomous action can influence business outcomes.

For example, a voice AI concierge powered by natural language processing (NLP) and machine learning (ML) can automate thousands of daily customer interactions. But to evaluate it solely in terms of traditional metrics like average handle time or customer satisfaction (CSAT) offers an incomplete picture. To truly understand its performance requires technical AI performance metrics—such as model accuracy—that reveal its real impact on customer experience, trust, and growth.

In short, AI’s complexity and dynamism demand a dedicated measurement framework. Only by expanding the scope of AI evaluation can organizations ensure AI outputs and decisions are safe and effective. This makes AI metrics crucial for effective AI governance and secure, scalable AI operations, which regulators, stakeholders, and users increasingly expect.

So, what exactly should you measure? Here’s an overview of the most essential categories of AI metrics.

1. Technical AI performance metrics

These metrics enable organizations to measure the accuracy and reliability of an AI model or system in performing its core tasks. They measure whether AI meets technical standards, operates consistently, and highlight where data scientists, engineers, and AI teams can make improvements.

Accuracy: Measures how often the AI provides the correct output. High scores translate to fewer errors and better CX. For example, in AI customer service, a 5% increase in model accuracy can result in fewer repeat customer inquiries and higher CSAT scores.

Precision: Shows how many of AI’s positive predictions (e.g., when to escalate a support ticket) were correct. Reduces false positives and correlates with operational productivity.

Recall: Measures how many real issues or user intents AI identifies successfully. This ensures critical errors (e.g., billing errors, delivery complaints) aren’t missed.

F1 score: Combines the precision and recall metrics into a single number. A high score signals the AI avoids too many false positives (wrong flags) or false negatives (missed issues).

AUC-ROC (Area Under the ROC Curve): Evaluates how well AI distinguishes between categories, such as “frustrated” vs “neutral” customer sentiment. High scores reflect a level of nuanced discernment suitable for critical or customer-facing applications.

MAE (Mean Absolute Error): Reflects how far off AI’s predictions are, on average, from the actual values. Useful when AI predicts numbers, such as sales forecasts.

Pro tip: Regularly monitor technical metrics to ensure AI solutions are reliable and guide model improvements, helping address risk and performance at each level.

2. AI operational efficiency metrics

These metrics show how well your AI handles workloads, processes user inputs, and scales under pressure. By helping to manage and ensure AI operations are efficient and reliable, these metrics are key to ensuring AI reduces costs and improves business outcomes.

Response time (latency): The time it takes for AI to produce an output after receiving an input. Fast responses are critical in real-world applications (e.g., payment processing, voice-enabled AI support) as delays degrade user experience and engagement. Low latency (fast responses) is typically defined as 0.1-1.0 seconds.

Throughput: The number of tasks or operations AI can perform in a given unit of time (e.g., 10,000 requests/minute). High scores are crucial if AI must handle large data volumes without degrading service and UX quality. This is key in ecommerce, for example, where retail AI agents must handle surging holiday ticket volumes without slowdowns to preserve customer loyalty.

Error rate: The percentage (%) of incorrect or failed AI outputs relative to total inputs. Low rates suggest reliability, critical in high-stakes industries like finance or healthcare, and customer-facing AI applications where trust and brand reputation are on the line.

Compute usage: Measures how efficiently AI uses computing resources (e.g., tokens, API calls, memory). High efficiency leads to lower operating costs. For example, an AI support solution that handles more customer queries per dollar of compute is more likely to show ROI.

System uptime: The % of time the AI system operates without outages. High uptime shows reliability, while low uptime often translates to loss of sales, trust, or brand loyalty. The industry-standard uptime of 99.99% helps mitigate SLA penalties and ensure performance.

Scalability: Evaluates AI’s ability to maintain speed and accuracy as it processes more data, requests, or users. High AI scalability leads to lower operating costs, while low numbers can result in service crashes if traffic spikes.

Pro tip: AI isn’t a set-it-and-forget-it technology. AI models and systems require regular fine-tuning to operate optimally, as they can drift (or deviate from their training data of reference) over time.

2 major pitfalls to dodge when converting to AI customer service

4. Generative AI metrics

Generative AI (genAI) models can produce text, code, images, or audio for many applications. According to Gartner, genAI is now the most widely adopted form of AI. The following metrics help organizations evaluate how relevant, inclusive, reliable, and human-like these AI-generated outputs are using a mix of quantitative measures, qualitative reviews, and human-in-the-loop evaluation.

The top metrics for measuring success in AI-generated responses include:

Content accuracy and relevance metrics: Show how closely AI-generated outputs align with correct information in a specific context. This helps ensure AI is accurate and meaningful for users, as well as business-critical operations. These include:

BLEU/ROUGE scores: Compares AI-generated text (like customer answers) with high-quality human examples to evaluate word overlap and sequences. Higher scores correspond to more accurate and relevant outputs. Commonly used in AI customer service, ecommerce, and retail.

METEOR (Metric for Evaluation of Translation with Explicit Ordering): Evaluates text quality beyond simple word matching like BLEU, accounting for synonyms, grammar, and fluency to better reflect alignment with human speech. Useful in evaluating multilingual AI systems like chatbots or AI-driven global banking.

Factuality and hallucination rate: AI can confidently posit false information as true (hallucinate). This metric tracks how often the AI provides verifiably true information, rather than fabricated or false details. High factuality is key. For instance, a customer who receives faulty guidance about a company’s return policies from a chatbot is unlikely to return.

Language naturalness metrics: These assess the coherence and fluency of language outputs generated by AI, ensuring they are natural and conversational to users:

Perplexity: Indicates how well AI predicts the next word or phrase in a sentence. Lower is better, and especially key for conversational AI. For example, with voice-enabled AI support agents, low perplexity suggests that users find the AI's auto-generated answers to be organic and helpful.

Tone and empathy: Evaluates how well the AI-generated output reflects the intended tone of voice. A high score indicates that customer-facing AI is emotionally resonant and aligned with users. Tone-optimized AI custom service can reduce negative customer sentiment, and one study shows improvements of 30%.

Visual quality metrics: These enable organizations to evaluate the realism, clarity, and detail quality in AI-generated imagery or videos. They include:

FID (Fréchet Inception Distance): Evaluates the quality and verisimilitude of AI-generated imagery by comparing features and diversity in AI imagery to that of real images. A lower FID score indicates more realistic and diverse images that accurately represent the real world.

Human feedback metrics: These involve humans in the loop (HITL) to assess AI-generated outputs for real-world performance:

Human preference score: This is direct human feedback that rates AI outputs on quality, tone, and helpfulness. Helps ensure customer-facing AI consistently meets customer expectations.

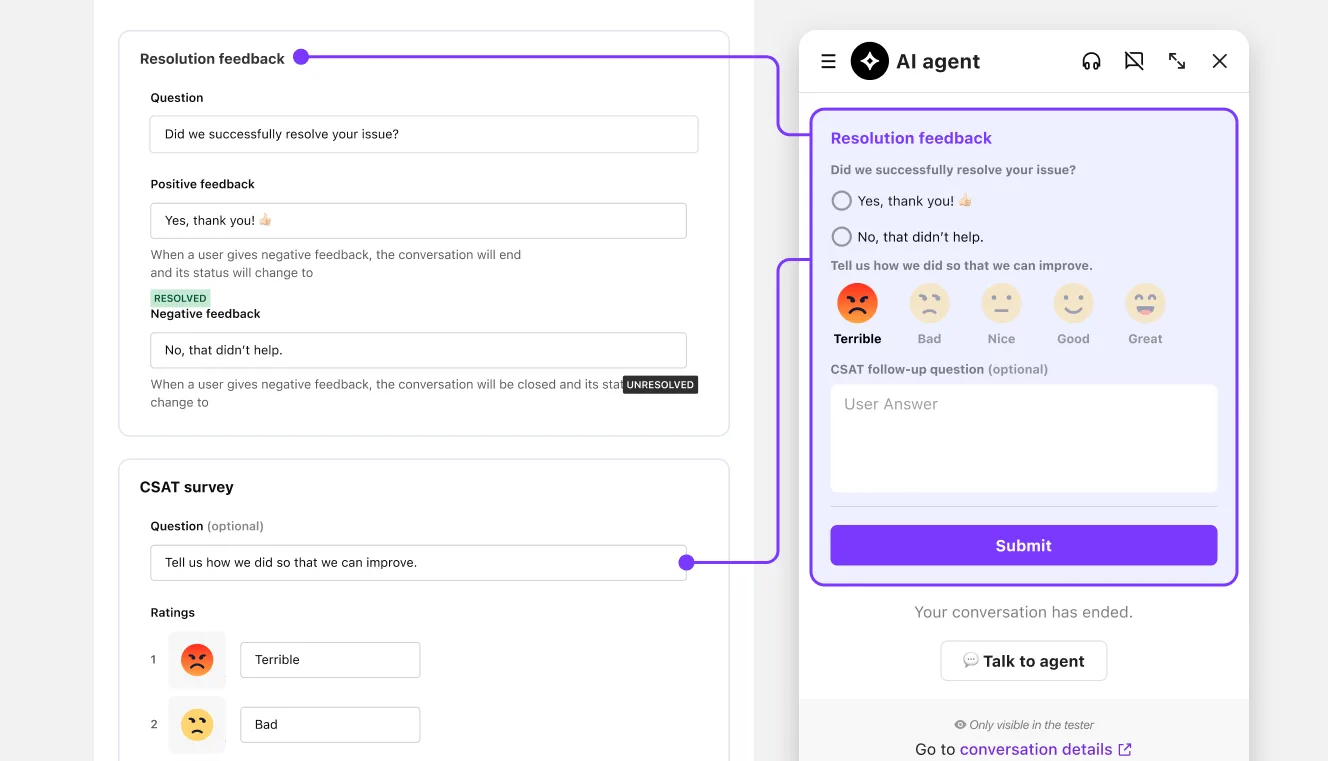

User feedback loop: The continuous collection of user ratings or reactions (such as a thumbs up, thumbs down) on the AI response. Integrating these insights enables real-time model fine-tuning, a virtuous cycle that helps boost CSAT and retention over time.

5. AI agent evaluation metrics

AI agents (also known as agentic AI) are autonomous systems empowered to act on behalf of users or other systems. They operate in a cycle (or chain) of observation, reasoning, and action, which enables them to execute complex tasks end-to-end. While these autonomous capabilities offer new opportunities to businesses, they also require new metrics to track.

The following agenic AI evaluation metrics can help you assess their performance end-to-end:

Goal completion rate: The % of tasks or objectives an AI agent executes successfully from start to finish. Higher completion shows the agent's predictions or decisions are more reliable and production-ready. For instance, using AI agents to automate 80-90% of support queries can meaningfully reduce average resolution time and CSAT.

Autonomy score: Shows how often the agent acts without human intervention. Higher scores indicate a greater potential for task automation, which can free up human resources for higher-value work and enhance operational efficiency.

Action accuracy: The ratio of correct vs. failed (or irrelevant) actions taken by the agentic AI system. Higher ratios signal lower error rates, suggesting operational trust, as each avoided mistake prevents potential rework or customer frustration.

Agent reliability index: This is a weighted measure that combines AI agent uptime, accuracy, and success rate across sessions. A high index is a strong indicator that customers consistently receive quality experiences with AI agents.

Multi-step reasoning score: Measures how effectively an agent performs chained tasks (e.g., verify order status –> check return policy → issue refund). A higher score indicates a logical and reliable agentic workflow that enables efficient task resolution. In AI for CX, this can lift first-contact resolution (FCR) and reduce human escalations.

Learning efficiency: Tracks how quickly the AI agent improves from feedback and outcome data. High scores reflect accelerated model optimization cycles, driving innovation and competitive advantage in a market that rewards velocity to value.

Adaptability: Measures the agent’s ability to adjust to new inputs, customer intents, or real-world conditions without re-training. AI agents can operate in real-time environments, using live data to dynamically tailor and target experiences, driving gains in revenue and CX—but only if they show high adaptability scores.

5 key questions to vet an AI agent platform

6. Responsible AI metrics

The AI machine learning (ML) models that power artificial intelligence are human-made and therefore imperfect. They can perpetrate unfair, incorrect, and potentially harmful outputs based on biased training data or flawed algorithm design. These metrics must be measured to ensure AI operates in a way that aligns with policies and regulations around trust and safety, compliance, and societal well-being.

Fairness and ethics metrics: These help detect and eliminate algorithmic bias, ensuring historically marginalized groups experience AI in ways that are equitable, compliant, and risk-free.

Bias/fairness ratio: Measures whether AI treats all groups equally across demographic lines (e.g., race, gender). Shows whether each group receives different treatment, especially key in high-stakes scenarios like loan approvals. A balanced ratio indicates alignment with policies, standards, and laws that uphold brand equity and public trust.

Demographic parity: Evaluates if AI outcomes (e.g., response priority, discount eligibility) are equally distributed across user groups. For example, if an AI concierge gives preference to certain groups over others, this metric will be low.

Equal opportunity: Tests whether users with similar needs but different backgrounds receive the same quality of AI output. This helps to prevent bias in hiring systems or support triage systems that could favor users in historically privileged geographies over others.

Accountability, transparency, and compliance metrics: These help ensure AI predictions and decisions are transparent and explainable to stakeholders and users, mitigating the risks associated with AI use and ensuring AI operates in accordance with internal policies, regulations, and societal values.

Explainability index: Indicates how clearly the AI’s reasoning can be understood and communicated by human teams. A higher score equates to more interpretable outputs. If a customer is wrongfully denied a refund by AI, for instance, this helps improve accountability and guide improvements.

Accountability/audibility: Assesses how traceable AI’s decisions are from input to output. High scores suggest AI teams can clearly see why AI gave a particular response to the user. Key for error correction and AI compliance.

Human oversight ratio: Tracks how many AI-driven interactions are reviewed or approved by humans. Key in high-stakes use cases like healthcare or finance. A balanced ratio indicates a lack of errors in AI logic and workflows.

Compliance score: Tracks whether AI outcomes align with compliance regulations and internal governance policies. A high score shows AI operations meet global standards for fairness, safety, and transparency.

Guardrail compliance: AI guardrails are mechanisms designed to prevent harmful, offensive, or non-compliant AI outputs. High numbers reflect that AI outputs adhere to global safety, compliance, and organizational guidelines, upholding user trust, brand reputation, and legal standing. Low numbers indicate a need for more defined guardrails and enforcement mechanisms.

Pro tip: Responsible AI metrics can become a competitive advantage. In the AI-driven future, demonstrating—not just declaring—your AI is safe and ethical can elevate customer trust and loyalty.

7. AI customer service metrics

AI-powered customer service is increasingly showing ROI, with statistics showing metrics like 20-30% reduction in service costs and 50% lower cost per conversation. The following metrics evaluate AI customer service, enabling teams to monitor both performance and CX, ensuring AI chatbots, support agents, or conversational AI deliver on its promise of reduced costs and improved efficiency—without compromising customer trust and safety.

Resolution Rate (FCR): The percentage of support queries resolved by AI in a single interaction without human intervention. High FCR correlates with fast, satisfying outcomes that drive customer satisfaction and internal productivity.

Containment Rate: The % of AI-driven interactions resolved in full without requiring a human handoff. High containment signals AI successfully handles customer issues and provides a positive experience while reducing workload for human teams. Low numbers indicate a need to improve AI logic and workflows to avoid churn.

Average handling time (AHT): The average time it takes to resolve a support case when AI is involved. Low AHT indicates efficient operations. For example, AI support tools can automatically retrieve or summarize company policies or customer context for human agents by analyzing natural language cues to lift efficiency.

Customer sentiment shift: Tracks the change in customer sentiment before and after AI-assisted interactions. Positive shifts (e.g., from frustrated to satisfied) signal AI’s ability to adjust its tone and behavior to real-time context—a key predictor of operational efficiency and customer loyalty.

Escalation rate: The % of cases transferred from AI to human agents. Fewer escalations signal health in support operations. High rates suggest the need for additional AI training or prompt engineering to fix workflows or improper tool use.

Response quality index: Evaluates how relevant, accurate, and empathetic AI-generated responses are. A high score implies better efficiency and CSAT scores, while a low score means AI routinely provides inaccurate, unhelpful, or repetitive answers that frustrate customers and drive up escalations and ticket count.

Pro tip: Use a hybrid approach to AI support metrics, combining automated tracking with human QA review to ensure consistent, on-brand customer care at every touchpoint.

Build lasting customer trust with reliable AI agents

AI metrics vs AI KPIs: What’s the difference?

AI metrics and AI KPIs are both essential to effective AI governance and operations, but they evaluate AI performance and impact at different levels.

AI metrics track the granular technical performance of AI systems day to day (e.g., model accuracy) to show how well AI functions.

AI KPIs (Key Performance Indicators) measure whether AI systems are driving business outcomes, such as revenue growth, customer satisfaction, or cost savings.

In short—AI KPIs measure business impact while AI metics measure machine performance and impact. Together, they provide a full picture of how granular aspects of AI system performance are directly tied to business outcomes.

AI KPIs: How to show measurable ROI from AI

AI KPIs are defined by leadership to align with the overarching strategic business objectives and strategies in their industry. Data scientists and analysts on AI teams then define, monitor, and report on the metrics that show how AI performance aligns with these broader strategic objectives.

Here are some of the most critical AI KPIs:

Return on AI investment (ROAI): Measures the financial return produced from AI projects compared to their costs, showing AI is profitable and justifies continued investment. According to the Wharton School’s 2025 Report, Accountable Acceleration: Gen AI Fast-Tracks Into the Enterprise, 75% of enterprises are seeing positive ROI from AI.

Revenue growth from AI: Tracks the increase in sales directly linked to AI-driven initiatives, showing AI’s contribution to top-line expansion. For example, Amazon’s AI recommendation algorithm is now reportedly responsible for 35% of total sales.

Cost reduction by AI automation: Measures the total operating expense saved by AI-driven process automation and optimization. Show AI’s impact on improving productivity and reducing overhead. For instance, Amazon’s AI robotics for its warehouses is estimated to save $4 billion annually by reducing human labor.

Revenue per AI interaction: Shows the average sales, upsell, or cross-sell value produced per AI-assisted interaction, showing AI’s ability to turn engagement into revenue across the AI customer journey. Reports show AI recommendation agents can increase conversion rates from 15% to 30% on average.

Time to value (TTV): Tracks the time it takes for an AI project to deliver measurable business value post-deployment. Low TTV reflects agile innovation and alignment in execution. Reports show that AI minimum viable products typically deliver ROI in three to six months, while custom solutions are in the 12-24 month range.

Curious about your ROI with AI agents?

Best practices for getting started with AI metrics

AI metrics turn AI from a black box into a business lever. By measuring what matters, leaders and teams can understand exactly what’s driving ROI, where to optimize next, and how to manage each project so it delivers real value.

Here are a few best practices to get you started:

Launch with imperfect metrics. Don’t wait for perfect data to start measuring AI impact. Embed AI observability mechanisms early in the lifecycle, track what you have, establish baselines—then refine metrics as the project evolves and generates more data.

Create a phased roadmap. Define your business objectives for AI, then map onto your roadmap the metrics aligned with those goals. Be sure to implement a qualitative and quantitative feedback loop that links technical performance to business outcomes.

Define roles and ownership. Executives, product managers, and data scientists all need their own AI metrics. Enterprise-grade AI platforms can help here, as they provide no-code, customizable dashboards and reporting for the AI metrics relevant to each stakeholder.

Track employee-related AI metrics. Even the best AI can fall short without employee uptake. Track metrics like adoption rate, frequency of use, and satisfaction to see usage rates, where resistance remains, and identify champions. Pair quantitative insights with direct employee feedback to guide retraining and upskilling, celebrate AI wins, and turn skepticism into enthusiasm.

Turning AI metrics into measurable ROI with Sendbird

Whatever AI metrics align with your role, performance means little without control, responsibility, and security in AI operations. That’s why the Sendbird AI customer experience platform is built with Trust OS, our enterprise-grade AI governance framework.

With built-in features for AI observability, real-time monitoring, and analytics in a single, unified custom AI dashboard, Trust OS enables organizations to track and manage AI metrics in real-time with ease and confidence — ensuring innovation never comes at the cost of velocity, compliance, or customer trust.

To learn more, contact sales.