AI governance frameworks: A leader’s guide to navigating adoption with minimal risk

With pressure mounting on enterprise leaders and teams to prove ROI from AI, the need for formal AI governance has never been greater. The rise of agentic AI, which is capable of acting autonomously on behalf of users, underscores the need to develop AI in ways that are safe, secure, and compliant at scale.

This is where an AI governance framework comes in. It’s a structured system that guides AI development and mitigates the myriad risks, security threats, and ethical issues associated with AI use while preserving user and stakeholder trust.

To help simplify this process, Sendbird built our own AI governance framework, Trust OS. Designed by our AI experts and engineers, Trust OS is a unified governance layer that brings enterprise-grade oversight and control to our AI agent platform, enabling everyone involved to easily monitor and adjust AI concierges at a granular level.

In this guide, you’ll learn what AI governance frameworks are, why they matter, and how to use one effectively. Plus, the tools and templates you need to turn AI policy into practice in 2026.

What is AI governance?

AI governance refers to the processes, standards, and guardrails that ensure artificial intelligence (AI) systems are developed and maintained in a way that’s ethical, safe, and transparent.

A governance framework defines how organizations manage AI across the lifecycle, from AI development to testing to production. Enterprise AI involves a variety of stakeholders—developers, ethicists, policymakers, and users—and governance frameworks help to ensure AI is designed and used in accordance with the requirements of each respective group.

Beyond guiding development, effective AI governance mitigates the inherent flaws and risks of AI, a technology built from complex code and the predictive capabilities of machine learning (ML) algorithms. Strong oversight is vital to aligning AI’s behavior and outcomes with business needs, ethical standards, and societal expectations. It also protects against various AI-related risks, including cybersecurity threats like data breaches, model bias and drift, and regulatory noncompliance.

Why is an AI governance framework important?

An AI governance framework is a key strategic resource for achieving compliance, trust, and operational efficiency when developing AI technologies. Past failures—from biased financial models to chatbots offering to sell new cars for $1—illustrate what can happen if governance is flimsy or overlooked. In fact, NVIDIA’s recent report shows that nearly 95% of generative AI pilots fail to produce meaningful returns, sometimes due to a lack of oversight or poor alignment.

Governance frameworks are critical because they enable human oversight and control of AI systems, which can operate as “black boxes,” making it difficult to understand, explain, correct, and account for their decisions. AI influences a growing range of real-world outcomes, so understanding how it makes decisions is crucial for enabling responsible AI governance that aligns with organizational and regulatory standards. This operational visibility is the foundation of ethical AI: being able to show, not just say, that your AI behaves responsibly, so you can scale AI without unnecessary risk.

In addition to addressing long-term concerns, governance frameworks also help protect against the immediate risks associated with AI use. For example, they can mitigate biased outputs, data misuse, or privacy breaches as part of AI risk management. AI models can also drift, leading to changes over time in their output quality and reliability. Modern governance also extends into social responsibility, upholding ethical practices to protect users from harm and organizations from reputational damage.

An effective governance framework provides the structure and visibility needed to balance technological progress with safety and human dignity—at both the policy and technology levels. Beyond outlining an organization’s approach in theory, it involves embedding oversight and control mechanisms directly into enterprise workflows. This enables AI transparency, explainability, and accountability for all parties concerned, without stifling innovation and slowing development.

Build lasting customer trust with reliable AI agents

Advantages of AI governance frameworks

AI governance frameworks are a scalable way for the enterprise to safely operationalize AI and unlock its full value, as they establish clear principles for cross-functional teams to innovate and operate around.

According to Gartner's research on AI trust, risk, and security management (AI TRiSM), enterprises that implement AI TRiSM controls will see significant improvements in their AI outcomes and a reduction of faulty data by 2026.

Other key business benefits include:

Governance framework benefit | Operational outcome |

Reduces immediate risks | Prevents bias, data misuse, and privacy violations through continuous monitoring |

Ensures regulatory compliance | Aligns AI with global laws and regulations such as GDPR or the EU AI Act |

Provides transparency | Makes AI decisions explainable and auditable across the lifecycle |

Improves system reliability | Supports performance consistency via detection, identification, and retraining of issues |

Builds trust | Demonstrates responsible AI use to customers, regulators, and stakeholders |

Promotes fairness and ethics | Ensures AI reflects organizational and societal values to mitigate bias and harm |

Drives operational efficiency | Standardizes reviews, documentation, and versioning for faster AI deployment |

Future-proofs AI strategy | Enables agility with evolving technologies by establishing a stable, secure foundation |

8 key principles of an AI governance framework in 2026

As governance frameworks have been developed by various entities and governments to help ensure the responsible use of AI technologies, a handful of themes have emerged. By operationalizing these principles, AI leaders can transform their theoretical policies into measurable AI oversight and control that upholds the organization's ethics, compliance, and reputation. These principles include:

1. Human oversight and accountability: Clearly define roles and responsibilities throughout the AI lifecycle to ensure meaningful human oversight so organizations remain aware of and accountable for AI-driven outcomes.

2. Transparency and explainability: Design AI systems so their decisions, logic, and data use can be understood, traced, and explained to users, auditors, and regulators when required.

3. Fairness: Implement safeguards to detect, prevent, and mitigate bias in AI systems to ensure fair and equitable treatment across all user groups.

4. Risk management: Proactively identify, assess, and address potential risks (e.g., bias, security vulnerabilities, safety failures) before they impact users or operations.

5. Data governance and security: Protect the quality, confidentiality, and integrity of data used to train and operate AI systems, while ensuring responsible handling of personal or sensitive user information.

6. Ethics and safety: Ensure AI systems are developed and deployed in accordance with ethical principles, respecting human rights, dignity, and societal well-being.

7. Regulatory and policy compliance: Align AI practices with applicable laws, industry standards, and regulatory frameworks to ensure lawful and responsible use.

8. Continuous monitoring and improvement: Establish mechanisms for ongoing AI evaluation, performance tracking, and iterative updates to ensure AI systems remain reliable, safe, accurate, and aligned with governance objectives over time.

How to choose an AI governance framework

Currently, there is no single, universal AI governance framework. Instead, enterprises are drawing from a range of established and evolving frameworks developed by governments, standards bodies, and industry groups—then adapting them to fit their specific needs.

Each framework emphasizes different priorities. For example, the NIST AI Risk Management Framework centers on security, resilience, and trustworthy AI practices, while the UNESCO Recommendation on the Ethics of AI focuses on environmental sustainability and gender equality.

This multi-framework environment underscores the need for formal AI governance that extends beyond ad hoc audits or reactive compliance. Effective policy must be paired with adaptable tools and infrastructure that integrates multiple standards, operationalizing governance across organizations and adapting as the regulatory landscape continues to evolve.

2 major pitfalls to dodge when converting to AI customer service

Top AI governance frameworks for 2026

Today’s regulatory landscape includes a mix of global, national, and industry-led AI governance frameworks. Each offers a distinct lens—risk management, ethics, transparency, or accountability—but all share the goal of enabling safe and trustworthy AI. Here’s a quick overview:

Framework/ | Focus | Governance implications |

EU AI Act | Legally binding, risk-based regulation categorizes AI uses as unacceptable, high, limited, or minimal. | Requires documentation, transparency, and strict controls for high-risk systems. Noncompliance can incur major fines. |

UK Pro-Innovation AI Framework | Non-statutory, principle-based guidance emphasizing fairness, transparency, accountability, safety, and contestability. | Offers flexible alignment without heavy compliance burdens; supports agile, context-driven governance. |

U.S. Executive Orders on AI (EO 14110 & 14179) | Federal initiatives directing safe, secure, and trustworthy AI while preserving U.S. leadership. | Guides public-sector oversight of AI in civil rights, national security, and public services; promotes innovation with accountability. |

NIST AI Risk Management Framework (AI RMF) | Voluntary, risk-based framework structured around Govern, Map, Measure, and Manage. | Provides adaptable standards for trustworthy AI to support AI security and risk management best practices. |

AI Bill of Rights (U.S.) | Non-binding blueprint outlining fairness, privacy, and human oversight principles. | Establishes ethical guardrails that informed later U.S. regulation; promotes transparency and user protection. |

U.S. State Regulations (e.g., Colorado AI Act) | Local laws enforcing accountability and transparency for high-risk AI. | Mandate bias audits, explainability, and impact assessments; drive enterprise focus on fair AI use. |

OECD AI Principles | Non-binding international guidelines for human-centric, transparent, and accountable AI. | Promote global policy alignment and interoperability across jurisdictions. |

UNESCO AI Ethics Framework | Global ethical standard promoting inclusion, sustainability, and human rights. | Encourages socially responsible and sustainable AI development. |

G7 Code of Conduct for Advanced AI (2023) | Voluntary standards for safe, responsible development of foundation and generative AI. | Supports cross-border cooperation and transparency in model development. |

Singapore Generative AI Framework (2024) | Governance framework for private-sector use of generative AI. | Emphasizes risk-tiering, model audits, and cross-functional governance practices. |

Who’s in charge of AI governance?

AI governance is a shared responsibility across the organization. Collaboration between technical teams, domain experts, legal, and leadership is essential to balance innovation with accountability. Each function plays a role in ensuring that AI systems are developed, deployed, and maintained responsibly, meeting both regulatory and operational requirements laid out in the framework.

Executive leadership sets the organizational culture and treats AI accountability as a strategic priority.

Legal and compliance teams interpret and enforce regulatory frameworks, ensuring alignment with data-protection and risk-management standards.

Audit and risk functions validate that models perform as intended without introducing bias or operational risk.

Finance leaders (CFOs) monitor cost–benefit trade-offs, investment efficiency, and exposure from AI deployments.

AI ethics councils or boards establish and oversee ethical guidelines, helping to anticipate and mitigate emerging risks.

Operational teams ensure responsible day-to-day AI use and adherence to governance policies across projects using AI monitoring tools.

Pro tip: Look for AI tools that give each stakeholder an accessible view of AI accountability through shared user-friendly dashboards with role-based permissioned access. This visibility supports the “three lines of defense” model, where AI developers, risk managers, and executives all participate in ensuring governance remains accountable and effective.

5 key questions to vet an AI agent platform

How to enact an AI governance framework

The true value of AI governance frameworks lies in effective AI implementation. Success depends on collaboration across teams, from data science to legal to compliance. It also requires embedding oversight and accountability functions early in the AI lifecycle, not “bolting on” compliance post-deployment.

However, according to The Economist Impact 2024 survey of 1,100 technology leaders, 40% say their AI governance programs fall short of ensuring organizational safety and compliance. The challenge arises from the absence of universal regulation, which leaves enterprises to define their own AI governance. Each organization has its own risk tolerance, infrastructure, and AI maturity level, so AI governance isn’t one-size-fits-all. Organizations must create or adopt a framework that aligns AI development with their specific requirements. Complicating things further, existing frameworks such as NIST often lack clear implementation guidance for the principles they provide.

To help bridge this gap, the roadmap below outlines how to create and launch an AI governance program for your organization.

Building an AI governance process in 10 steps

1. Appoint an AI governance lead

The first step is to designate a single owner to coordinate cross-functional AI collaboration. In smaller organizations, this can be a shared role across compliance, engineering, or data teams. This lead will manage the roadmap, ensuring task accountability, overseeing deliverables, and tracking governance metrics to validate trust and performance.

2. Choose a governance framework of orientation

As a reference point, select an existing framework that’s most aligned with your industry, region, and operational model. For example, AIGA provides actionable guidance with EU AI Act compliance integrated where applicable, while NIST AI RMF offers a flexible, risk-based structure adaptable across sectors.

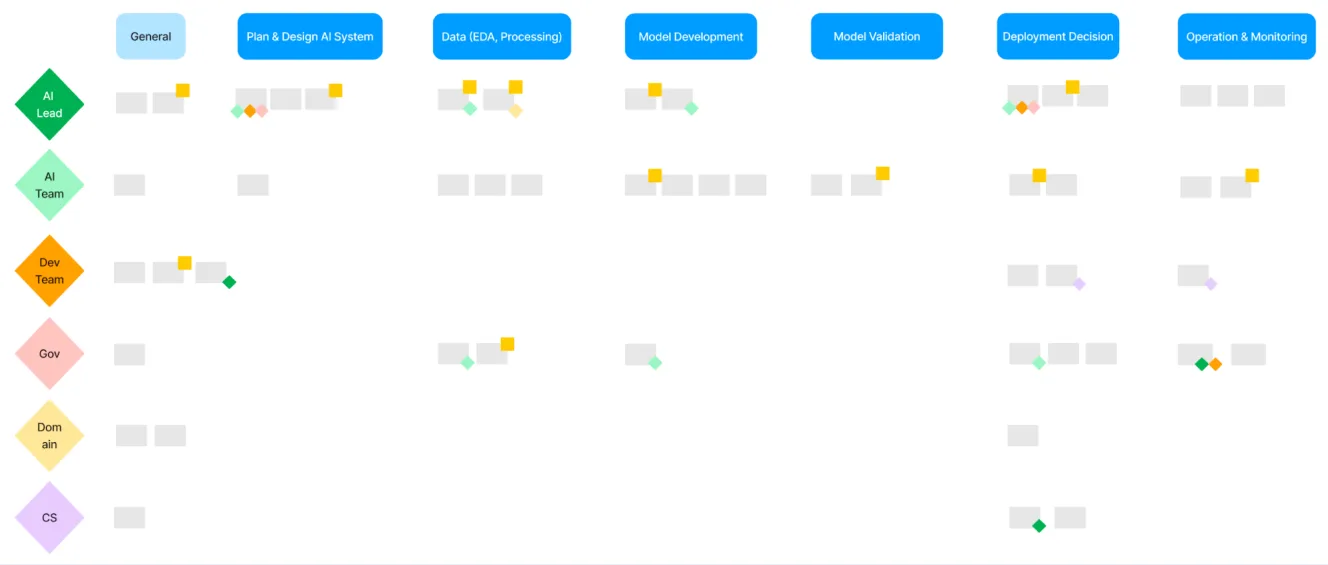

3. Map the AI lifecycle and stakeholders

Plot your AI lifecycle from design to retirement along a horizontal axis, then list all relevant stakeholders on the vertical axis. Creating this matrix helps to identify ownership, dependencies, and accountability for each governance task in a clear visual way.

4. Define and allocate tasks

Next, assign governance responsibilities across each lifecycle stage. For example:

Data governance → data owners and compliance leads

Bias monitoring → ML engineers

Audit readiness → risk and legal teams

5. Operationalize tools and documentation

Create the necessary documentation—such as project charters, risk registers, validation reports—and centralize them in a secure, accessible repository to support learning, collaboration, and innovation.

Pro tip: Most frameworks do not prescribe specific toolsets. Pair your documentation with explainability frameworks (e.g., SHAP, LIME) and monitoring software integrated into your MLOps pipeline.

6. Automate oversight where possible

Implement systems for AI risk management that monitor your various AI technologies for performance, safety, and compliance in real time.

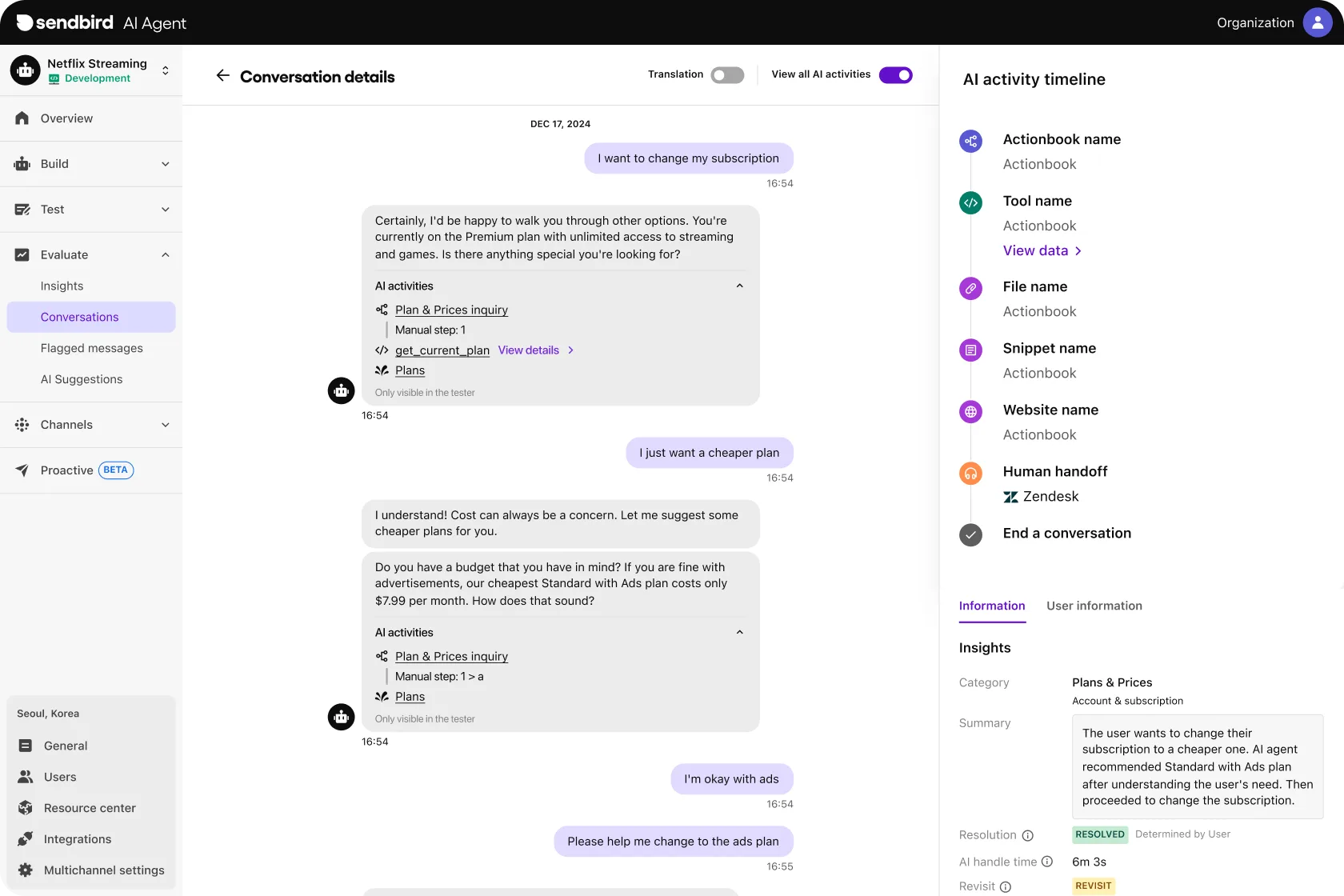

Sendbird’s AI agent builder, for instance, provides observability features for real-time AI agent monitoring with API support and webhooks, enabling instant detection of hallucinations and policy violations with human-in-the-loop oversight.

7. Schedule regular health check-ups

Establish a cadence for reviewing AI metrics such as model accuracy, fairness, and data governance. Continuous assessment helps ensure that AI systems remain compliant and aligned with organizational ethical standards as conditions evolve.

8. Centralize AI governance documentation

Store all governance materials—policies, checklists, and audit logs—in a centralized platform or knowledge base (e.g., internal wiki or secure cloud environment) to support traceability and access control.

9. Onboard and educate stakeholders

Ensure every team understands their unique governance responsibilities. Integrate these into day-to-day workflows through internal training and operational dashboards. Emphasize that clear communication and team-level ownership are vital to turning governance from a compliance checkbox to an innovation enabler.

10. Iterate, audit, and mature

Lastly, treat governance as a living process. Use insights taken from audits and AI performance reviews to continuously refine your governance practices, update documentation, and improve stakeholder collaboration.

Related resource: Consider an AI policy template from the Responsible AI Institute or other sources to help you get started.

Key AI governance capabilities for enterprises

Getting started on AI governance can feel overwhelming, given the range of existing and emerging frameworks. The following AI tool capabilities can help organizations to effectively implement their governance framework across existing workflows:

Visual dashboards: Aggregate system-health metrics and performance indicators for core AI models and technologies.

Health score metrics: Measure the reliability, fairness, and data integrity of AI models and technologies.

Custom AI metrics: Align governance monitoring with business-specific KPIs.

Audit trails: Maintain immutable, detailed records for compliance, error handling, and optimization.

Open source compatibility: Consider AI integration with existing MLOps ecosystems for added flexibility.

Seamless integration: Embed governance workflows within existing enterprise systems to avoid silos using AI integrations.

Automated monitoring: Detect model bias, drift, or anomalies like hallucinations using continuous evaluation practices.

Performance alerts: Notify teams in real-time across configured systems when AI deviates from expected parameters.

How to choose an AI agent platform that works

The future of responsible AI governance with Sendbird Trust OS

AI governance is a new pillar of enterprise operations, making these frameworks crucial to uniting AI policy, technology, and accountability in the real world. When it comes to scaling and managing AI safely, both leaders and teams benefit from AI tools that turn theoretical policy into enforceable technical controls.

Sendbird's own AI governance framework, Trust OS, does just this—helping organizations to build and scale enterprise-grade AI agents they can trust. Trust OS makes AI governance accessible, measurable, and scalable, helping stakeholders and operational teams chart a course to safe and effective adoption. It provides:

A unified governance layer with API and webhook support for real-time AI agent observability, control, and transparency.

A user-friendly dashboard complete with no-code agent management, knowledge management, and performance reporting.

14 built-in features for four key governance pillars: Observability, Control, Oversight, Scalability & Infrastructure. Includes audit logs, versioning, end-to-end testing, and more.

In short, Trust OS is how Sendbird’s AI agent platform makes it not just easy, but safe, to build, scale, and govern AI agents without sacrificing trust, safety, or compliance.

Contact sales or request a demo to learn more. Or, check out this video explaining Sendbird Trust OS.