Introducing AI agent activity trails for AI transparency

Why AI transparency matters for LLM agents

As AI agents take on more customer-facing tasks, one question is increasingly critical: How did the AI agent arrive at that response?

AI often operates like a “black box,” generating outputs without revealing its reasoning. As a result, product and support teams are left guessing about which knowledge sources, tools, or workflows shaped the agent’s actions.

This lack of visibility makes it difficult (or downright impossible) to test the AI agent, check its performance, troubleshoot issues, preserve user trust, and ensure regulatory compliance.

At Sendbird, we’re solving this challenge with a new feature: Activity trails.

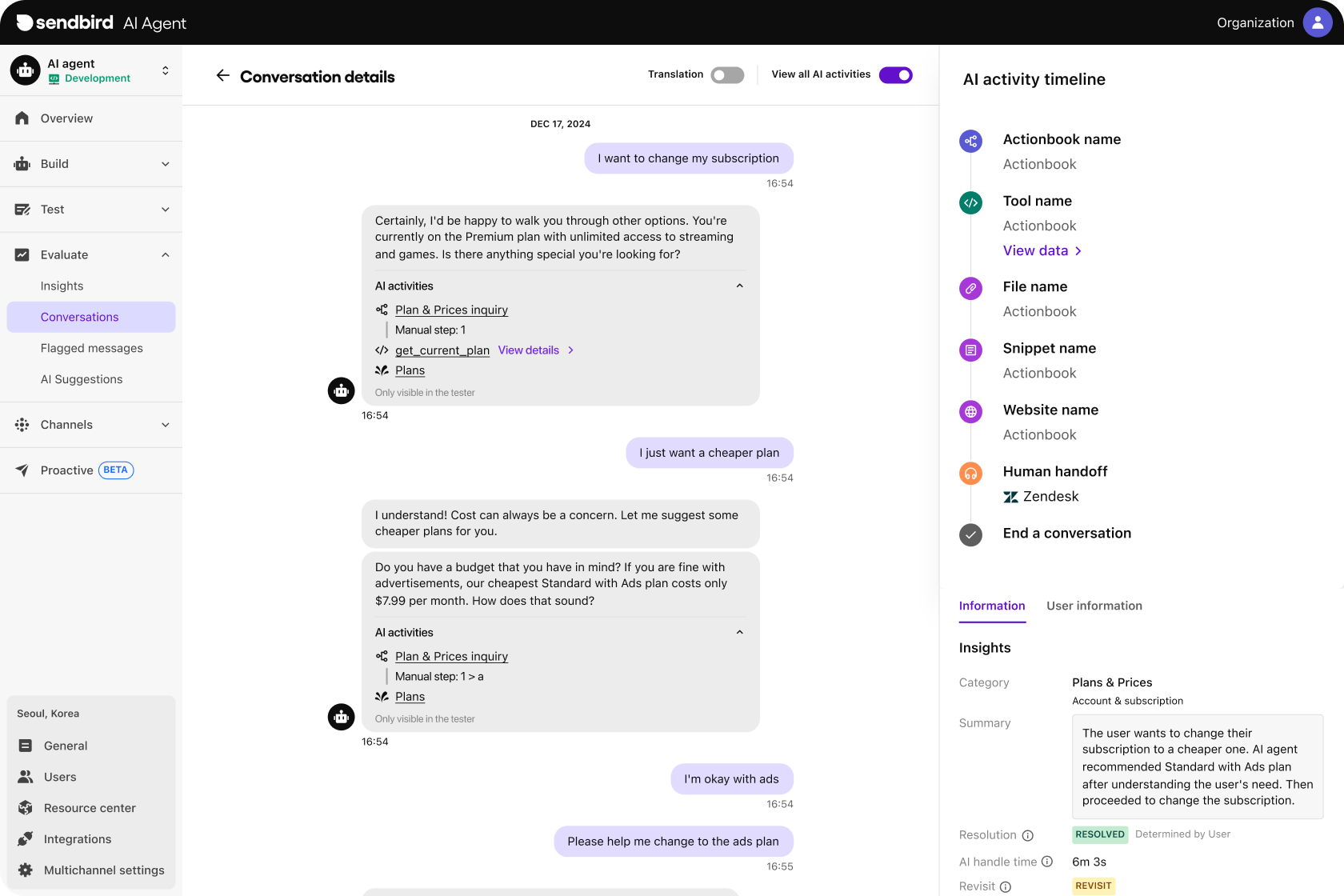

Activity trails make your AI concierge fully transparent. Now you can trace the entire decision process of your agent step-by-step, from its first welcome message to tool activations to human handoff.

With complete visibility into how and why agents make decisions, teams can effectively optimize, evaluate, and test agents to ensure their reliability at scale, leading to absolute trust and confidence in AI customer service.

Leverage omnichannel AI for customer support

What are Sendbird's AI agent activity trails?

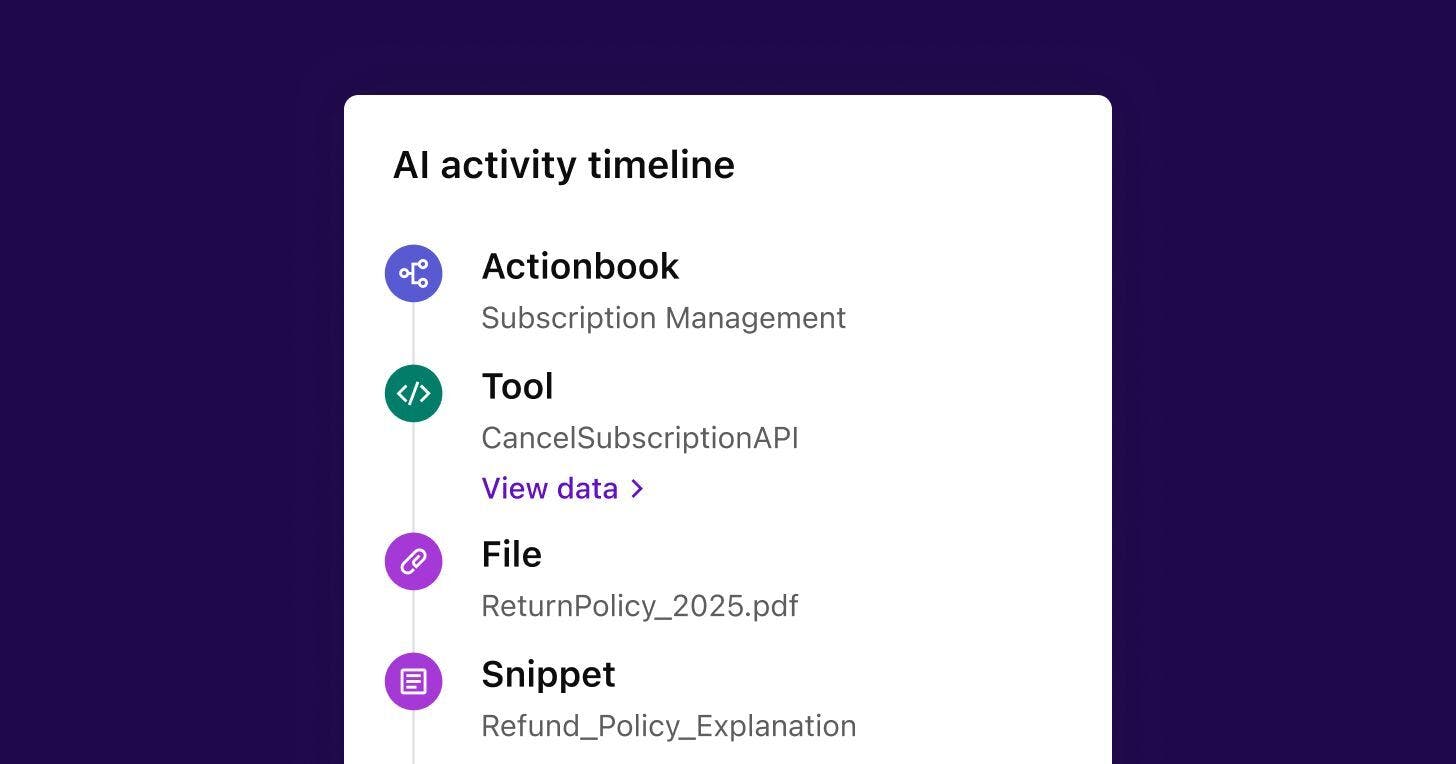

The Sendbird AI agent activity trails feature provides an auditable, step-by-step record of the data sources, tools, and other inputs that shape the responses generated by your AI agent.

For example, whether your agent pulled data from an integrated knowledge base like Confluence Salesforce, or Sprinklr, used an AI agent actionbook, or responded based on location-specific user data—the activity trail shows you the exact input that influenced each specific output.

Activity trails account for the following data points:

Data type | What it tells you | |

Default instructions | The goal, communication style, and guidelines set in the AI prompt | |

Knowledge base(s) consulted | Content sources consulted (e.g. Salesforce) | |

Actionbook(s) or tools used | Agentic AI workflows used in conversation, API requests/responses | |

User context and metadata | Locale, user ID, and any custom user attributes passed by the client | |

System messages | Welcome messages, feedback requests, CSAT triggers, invite human agent, etc. |

All AI agent activity data appears in a “timeline view” alongside each closed agent conversation within your AI agent dashboard. This gives non-technical teams instant visibility into the agent’s decision path, as well as any associated performance issues.

To be clear, activity trails don’t explain the underlying decision logic of the AI model (LLM) that powers the AI agent. It provides transparency into the inputs that influence a specific output from your AI concierge—enabling continuous human-in-the-loop (HITL) monitoring, AI agent testing, agent evaluation, and optimization without needing technical expertise.

8 major support hassles solved with AI agents

From AI agent observability to optimization

Activity trails show you exactly where the AI agent went off course, helping you to take precise action to prevent future issues, ensure compliance, and preserve user trust.

Using these targeted insights into AI agent behavior, you can:

Improve the AI agent performance: Identify which instructions, actionbooks, or tools deliver better resolution outcomes as part of the AI agent evaluation processes.

Validate accuracy: Trace every output back to the original data source or automated workflow that shaped it.

Build trust: Share the logic behind the AI with internal teams or compliance stakeholders to gain confidence and trust.

Optimize faster: See if a hallucinated answer came from the wrong knowledge base, or if no source was used at all, to prevent future issues.

Additionally, to ensure safe, ethical outputs that minimize AI risks, every AI agent response is automatically flagged if it:

Contains a hallucination (inaccurate information)

Triggered an AI-powered safeguard (e.g., harmful content)

Violates your communication policies

When trust matters, AI transparency matters

The success of AI for customer support hinges on trust among customers, stakeholders, and regulators. Trust comes from knowing how your AI works and having the control to make it safe, ethical, and reliable at scale.

This level of control leads to confidence in AI customer care—the confidence to launch without fear, scale without concern, and know your AI is working as intended—no black boxes, no surprises.

Ready to learn more? Activity trails are available on the Sendbird AI customer experience platform.

Contact sales or your CSM to learn more.