Why Model Context Protocol (MCP) is the new infrastructure for AI agents and the A2A economy

Everyone's talking about MCP (Model Context Protocol), from developer circles to social media to AI podcasts. But beyond the hype, most people still don’t understand what it is—or why it may quietly become the backbone of the emerging A2A (Agent-to-Agent) economy, where autonomous agents transact, negotiate, and operate across networks on behalf of humans and businesses.

Let’s get straight to the point.

What is Model Context Protocol (MCP)?

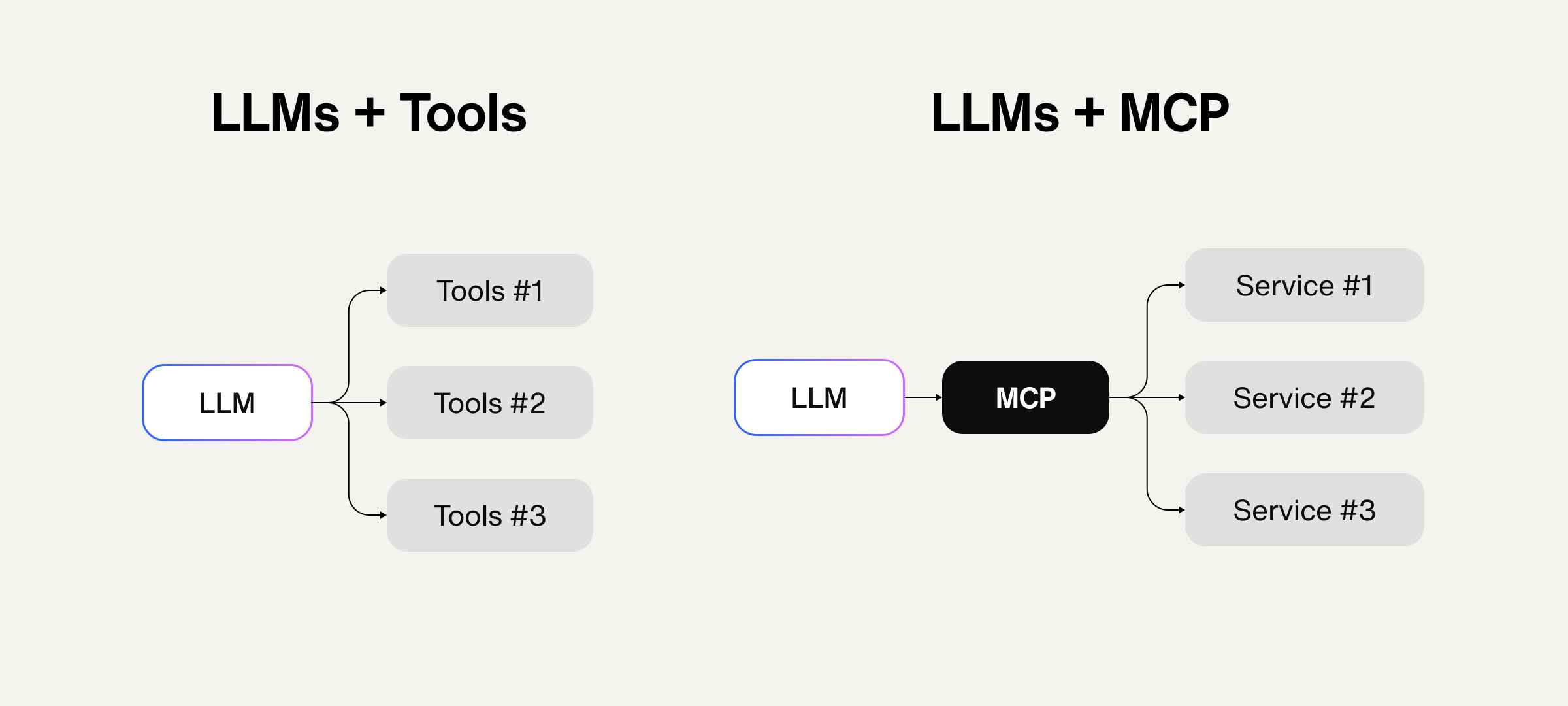

MCP (Model Context Protocol) is a proposed standard to solve the growing complexity and fragility of integrating tools with LLM-based agents.

In technical terms, it’s an open protocol that allows AI models to communicate with external data and tools by standardizing how applications provide context to large language models (LLMs).

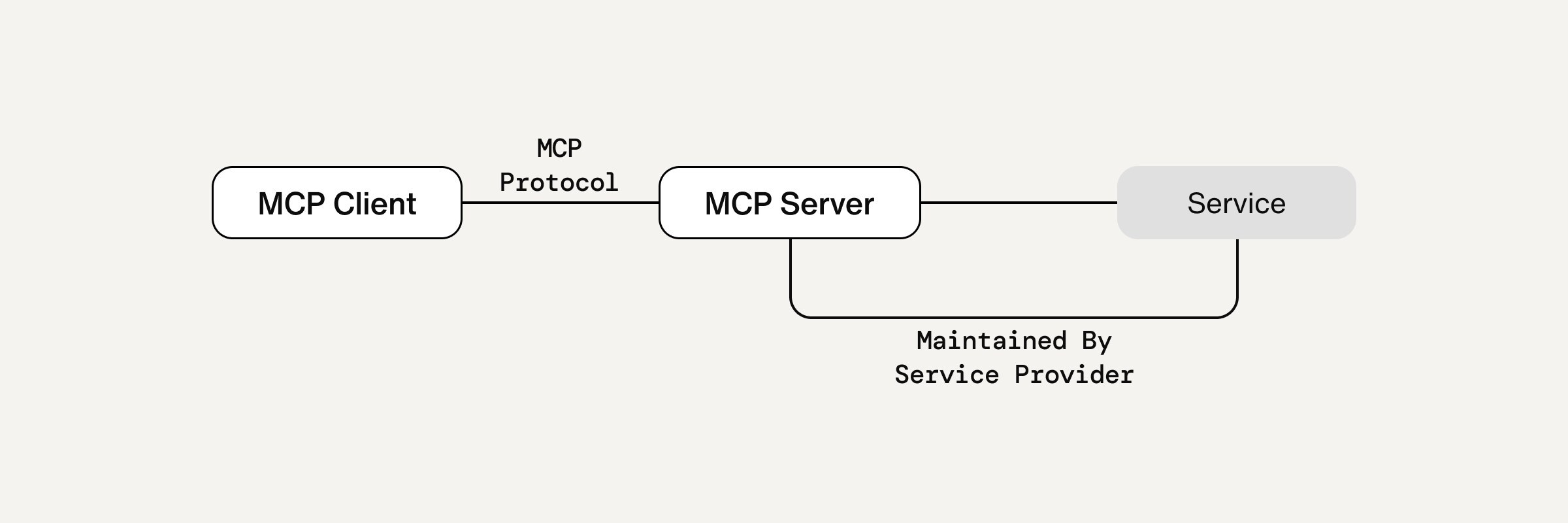

MCP defines a clean, consistent way for LLMs—and the AI agents built using them— to interact with external tools and services. Instead of developers writing custom logic for every new integration, tools expose themselves through an MCP server, which acts as a centralized gateway. Through this gateway, the LLM can discover a tool’s capabilities, get context, and trigger actions—all without bespoke code.

Think of MCP as a payment gateway in fintech. Just as a payment gateway connects a business to multiple payment processors—like Visa, Mastercard, or PayPal—through a unified interface, MCP connects AI agents to various tools and services through a standard protocol.

Leverage omnichannel AI for customer support

Why does Model Context Protocol (MCP) matter?

While LLMs are the foundation of current conversational AI applications, they’re only good at one thing: predicting text. They’re great at summarizing documents, writing stories, and answering questions. But on their own, they don’t actually do anything. LLMs don’t send emails, update databases, or interact with real systems because they aren’t inherently connected to any external tools, services, or workflows.

To make LLMs more useful, developers have started building AI agents—wrapping models with tools and APIs to trigger actions. Want an AI that can schedule meetings, pull in CRM data, or respond to Slack messages? That’s an AI concierge.

Here’s the problem: every tool you connect to your agent today is a one-off integration. Every service has its own API, its own data structure, and its own failure modes. You end up writing glue code—lots of it. And every time something changes, it breaks. This doesn’t scale. Not for startups. Not for enterprises.

As a result, connecting tools today is like trying to get systems to talk while they each speak a different language. Slack is in English, Twilio is in Spanish, and your database is in Japanese. You can brute-force your way through it, but it’s painful—and completely unsustainable at scale.

Learn more: How to build an AI agent: The 8 key steps

What does Model Context Protocol (MCP) enable?

MCP offers a simple mechanism to aid in the creation of harmonious AI ecosystems. It brings structure, interoperability, predictability, and a path to real modularity in AI infrastructure. This is what allows ecosystems to form and makes agent infrastructure maintainable.

With MCP in place, we unlock a number of things:

Developers can build agents that work across services without rewriting integrations.

Enterprises can deploy agents that scale and interoperate without massive engineering overhead.

Toolmakers can expose capabilities once, in a standard format, for use by any AI agent platform.

Imagine every time you get an email, your agent logs it into a spreadsheet. Sounds trivial—but if Slack changes its API, or your Twilio integration fails, the system breaks. MCP abstracts this risk. It makes tools modular and the agents that use them resilient.

This is how we move toward the vision everyone wants: autonomous agents that can handle workflows, take initiative, and operate safely within constraints.

Harness proactive AI customer support

Model Context Protocol (MCP) as infrastructure for the A2A economy

The A2A economy—where autonomous AI agents represent people and organizations to interact and transact—requires a shared language for tools and agents. MCP provides just that.

In this future, your AI concierge doesn’t just reply to messages (Agent-to-Consumers)—it pulls data from your CRM (Agent-to-Business), checks order statuses, negotiates delivery terms with a vendor’s agent (Agent-to-Agent), and settles invoices—all autonomously.

For that to work, agents must interoperate across tools, platforms, and organizations. That’s the infrastructure MCP enables.

Without a standard like MCP, every agent-to-agent interaction remains a custom job, prone to failure, fragile under change. With it, AI agents can form ecosystems—markets, value chains, and autonomous workflows.

Learn more:

Startup opportunities in the MCP ecosystem

Just like SMTP created SendGrid and HTTP led to Cloudflare, MCP opens the door to a new layer of startups:

1. MCP app store: Imagine an interface for browsing and deploying ready-made MCP servers. Developers click to deploy, get a URL, and paste it into their agent.

2. Context orchestration engines: MCP standardizes interfaces but not memory or decision-making. Meta-layers that route context, manage prompts, or optimize tool sequencing are possible.

3. Tooling & dev infra: SDKs, CLIs, debuggers, tracing tools. Imagine a Postman or Vercel for MCP agents.

4. Observability platforms: Once agents go operational, teams need to monitor not just outputs but tool invocations. Where did the failure occur? What context was passed? MCP makes this possible.

5. Verticalized MCP Libraries: Think: prebuilt MCP servers for finance, healthcare, logistics, legal, and ecommerce—built with domain-specific constraints in mind.

Automate customer service with AI agents

Open questions and early friction of MCP

Of course, as a new technology, MCP isn’t perfect. Setting up MCP today is still awkward. You’ll be copying files, wiring up local servers, and dealing with early-stage tooling. Not exactly plug-and-play.

While Anthropic is leading the charge, there’s no guarantee MCP will become the dominant standard. OpenAI, Meta, or Mistral could roll out their own. Standards are political, and it’s still early days.

But the underlying problem isn’t going away. We need a standardized way for LLMs to use tools. MCP might not be the final answer, but it’s the best version we’ve seen so far.

Strategic takeaway: MCP and the path to A2A

Right now, agentic AI development is in a fragile and fragmented phase. Even promising AI agent platforms like Manas still rely on glue code to make multi-tool agents function. Not the most scalable.

MCP changes that. It introduces structure, predictability, and interoperability—everything real software systems need to scale. It offers a mental model for building AI agents that can reliably talk to external tools, scale across domains, and survive change.

But more than that, MCP could be laying the foundation for the agent-to-agent (A2A) economy, where the lines between B2B, B2C, and C2C start to blur as AI agents take over transactions, negotiations, and operations. In this world, your personal travel agent won’t browse websites— it will coordinate directly with other business AI agents to plan your entire trip, from flights and hotels to local tours and dining.

You don’t need to implement MCP tomorrow. But if you’re building agentic infrastructure—or betting on agents to transform your business—you need to understand it. Because when the standard hardens, it’ll be too late to catch up.

MCP isn’t just another dev tool. It’s infrastructure—for agents, ecosystems, and the future A2A economy.