Long polling: What it is and when to use it

Note: This article explains the concepts of long polling. It is not a guide to how Sendbird works. If you are looking for technical documentation, please see the docs.

What is long polling?

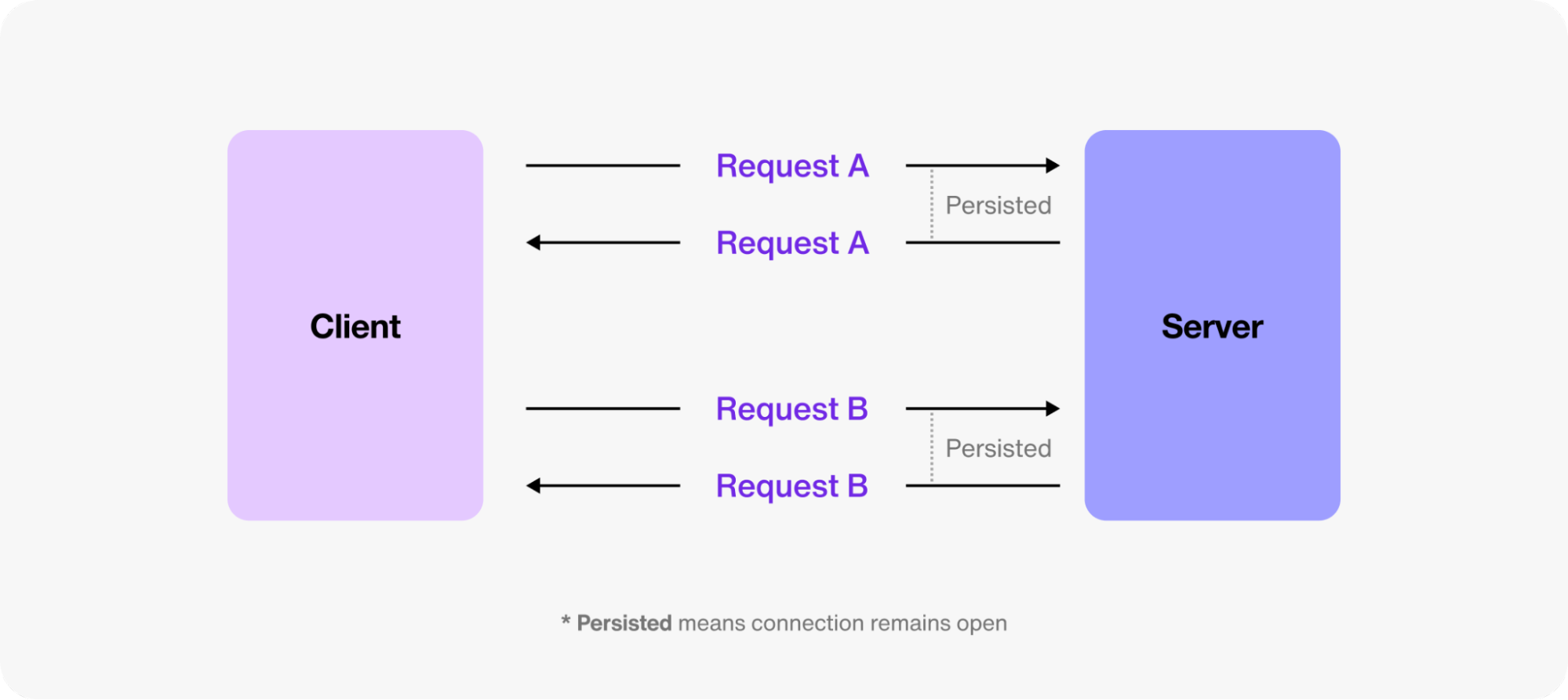

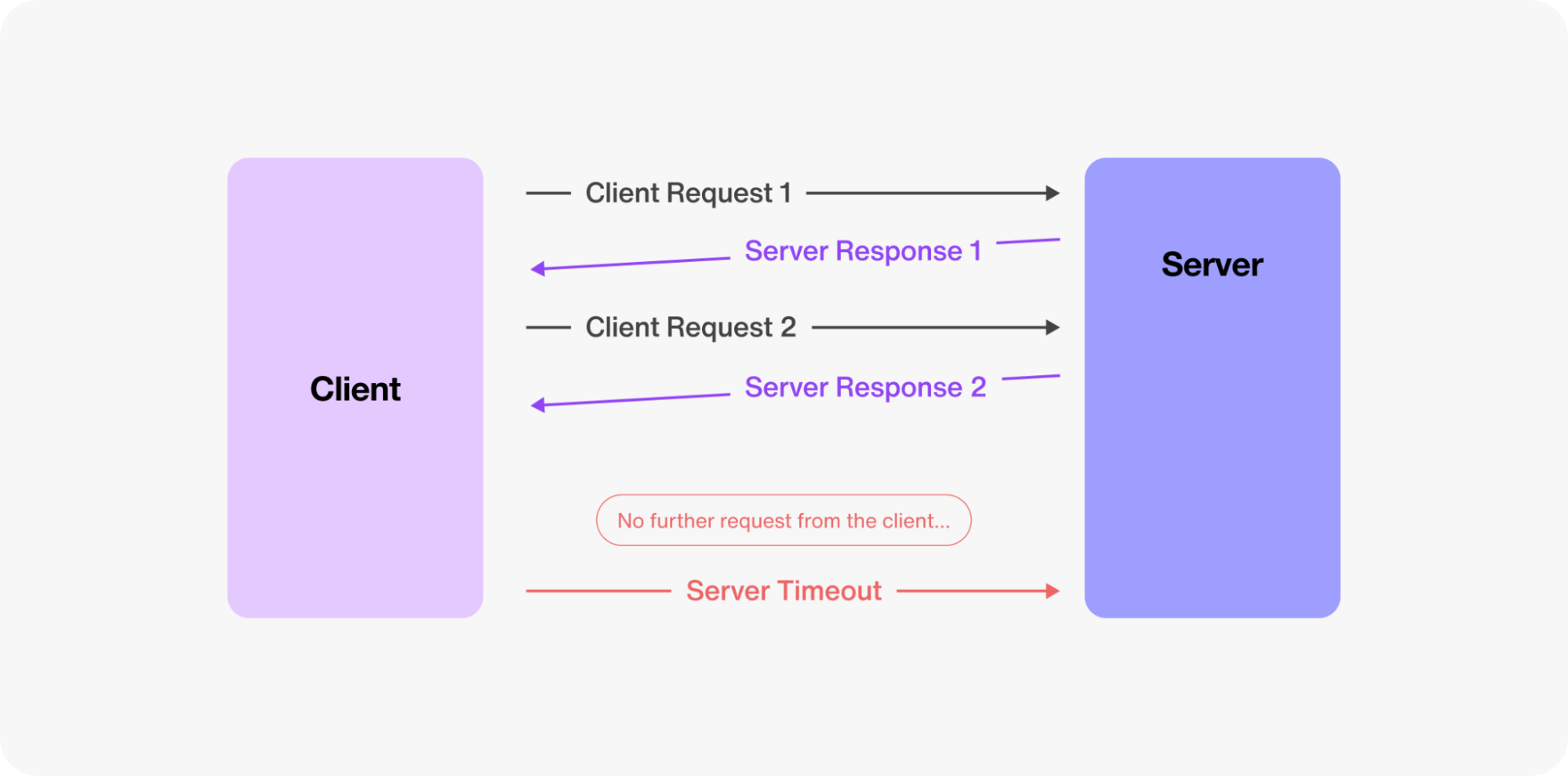

Long polling is a web communication technique in which a client (such as a web browser) requests information from a server, and the server holds the connection open until it has new data to send. Unlike regular polling, in which the client repeatedly sends requests at fixed intervals, long polling reduces the frequency of requests and server load by keeping the connection open longer. When the server has new information, it sends the data and closes the connection, prompting the client to immediately re-establish the connection and wait for more updates. This method effectively simulates real-time communication without the need for continuous request cycles.

Before WebSockets (pre-2011), long polling was a workaround for real-time communication over HTTP. Long polling was one of the few available methods for keeping clients updated without requiring them to manually refresh the page. Long polling was important pre-2011 as it allowed real-time updates to clients, without maintaining a constantly open connection (like WebSockets). Today, WebSockets are well suited to real-time applications such as in-app chat, business messaging, and voice or video calls.

In this article, you’ll learn all about long polling, how it works, as well as the difference between long polling and short polling. You’ll also explore related technologies like server-sent events (SSE) and WebSockets in more detail. Let’s dive in!

How long polling works

In long polling, begin with the client request:

Once the server receives the request, it keeps the connection open until new data is available or a timeout occurs. Resources are allocated to maintain the connection, which can impact server performance if not managed properly. However, proper optimization techniques (such as connection pooling) help mitigate this issue.

When new data is available, the server responds to the client with the requested information and closes the connection. If no new data is available, the server sends an empty response upon timeout. The empty response signals the client to make a new GET request. The client then immediately sends a new request to the server, essentially repeating the cycle. This allows for near-real-time updates while still operating over conventional HTTP connections.

Step-by-step long polling process workflow

We have seen what long polling is and how it works. Why is it so important in applications today?

Client sends request: The client (browser or app) sends an HTTP request to the server asking for updates or new data.

Server checks for data: The server receives the request and checks if new data is available.

Server waits if no data: If no new data is available, the server holds the request open and waits for a defined period.

New data or timeout: If new data becomes available, the server immediately responds with the data. If no data arrives within the waiting period, the server responds with a timeout.

Client processes response: The client processes the server's response, updating the UI if new data is received or preparing for the next request if not.

Client sends another request: The client immediately sends a new request to the server, continuing the cycle for real-time updates.

This cycle repeats as long as updates are needed, providing near real-time communication without overwhelming the server with constant requests.

Long polling example

In an in-app chat use case, long polling can be used to deliver new messages promptly without frequent requests to the server. When a user opens the chat, their app sends a long polling request to the server, essentially saying, "Tell me if there are any new messages." The server holds this request until a new message arrives. Once a message is available, the server sends it to the client, and the client immediately sends another request to wait for the next update. This process ensures that users receive new messages almost instantly while minimizing the number of requests sent to the server, providing a smooth and efficient chat experience.

Your app, your data. Drive engagement, conversion, & retention with the most trusted chat API.

In a nutshell, long polling reduces the number of requests needed to provide near-instant updates, lowering network overhead and minimizing latency when compared to short polling. Long polling provides the responsiveness needed for conversational applications like chat and collaboration tools.

Long polling vs. short polling

The distinction between long polling and short polling lies in how the client handles the delay.

In short polling, the client sends regular requests at periodic intervals, which can lead to a high number of HTTP requests and increased server load. In long polling, the client waits until the server has new information before the server closes the connection, and the client reinitiates the request. Long polling effectively reduces the number of requests and helps utilize server resources.

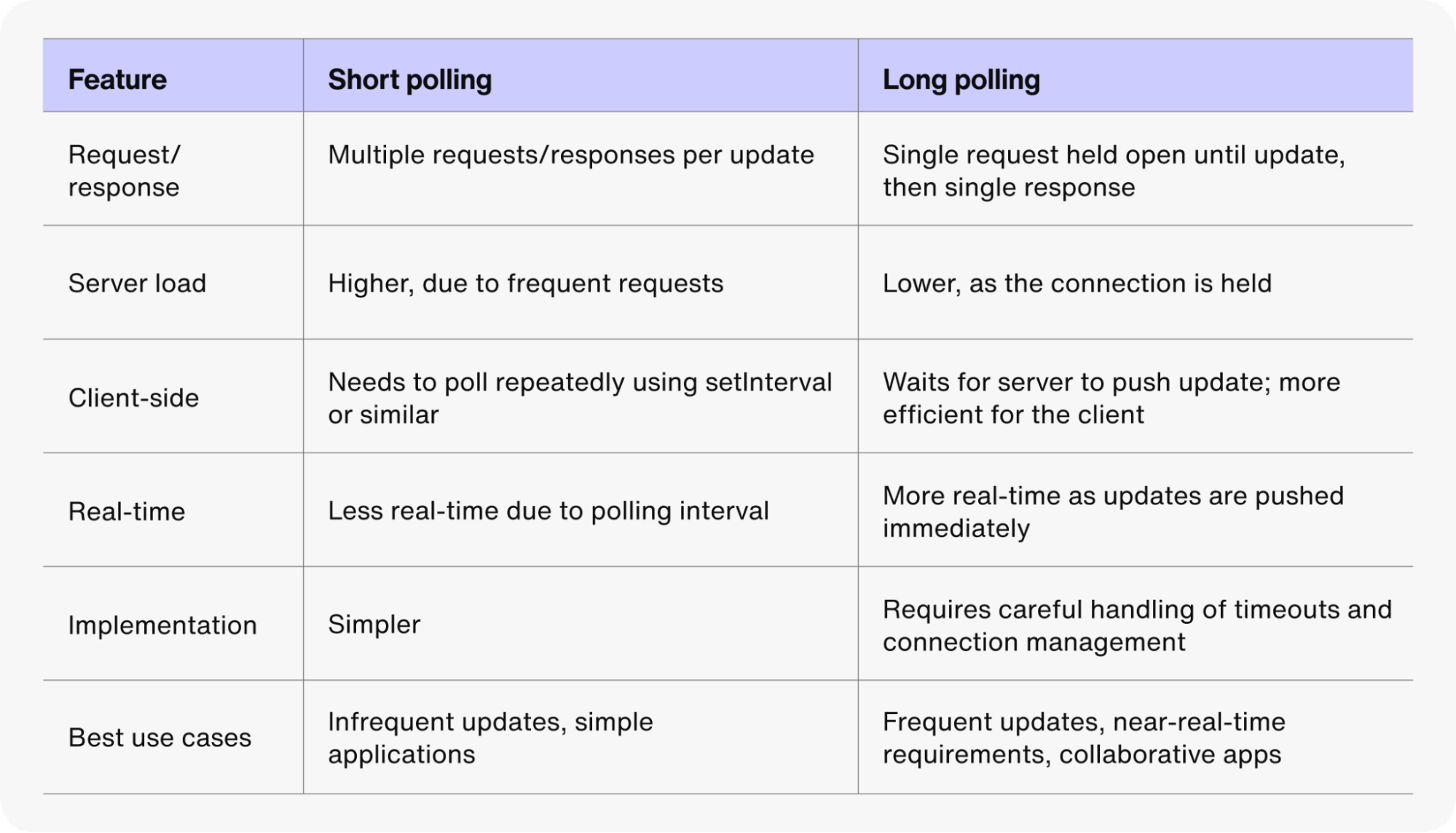

The table below summarizes the differences between HTTP long polling and short polling.

Remember that implementing long polling can be more complex than implementing short polling due to the persistent nature of the connection. Developers need to consider various factors, such as connection management, fallbacks for connection issues, and handling timeouts. Despite these complexities, the benefits of reduced latency and improved performance make it a worthy endeavor for many applications.

Moreover, since the server holds the connection open until it has new information, long polling tends to be more bandwidth-efficient than short polling, which involves frequent checks. However, optimization techniques like data compression are crucial in maintaining minimal bandwidth usage. If you allow too many ongoing connections or connections with no set timeouts, then your long polling mechanism may not provide a great reduction in bandwidth usage from short polling. You can implement configurations to detect unnecessary open connections, time out old connections, and throttle the number of connections for your preferred balance of responsiveness and bandwidth conservation.

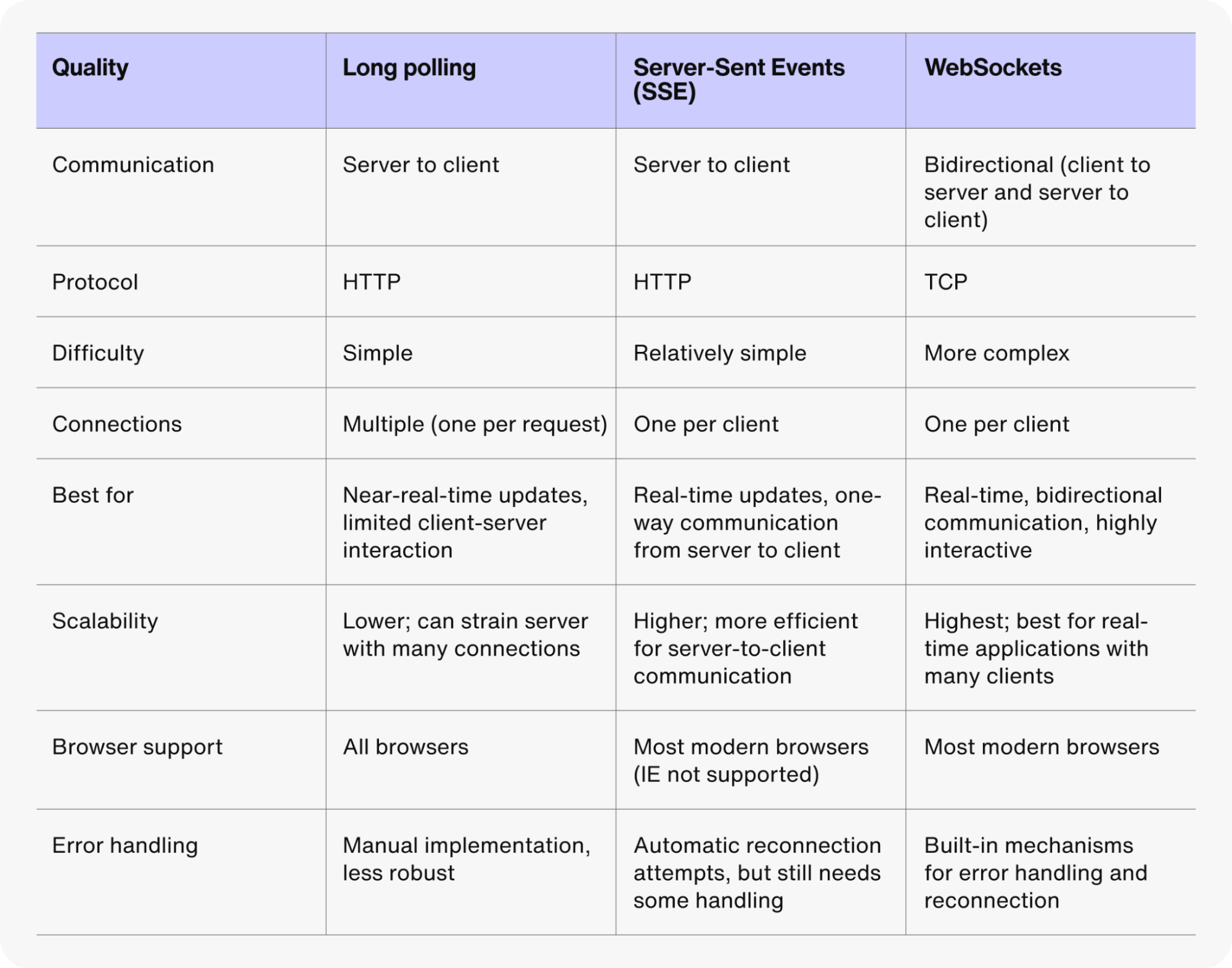

Understanding Server-Sent Events (SSE) and WebSockets

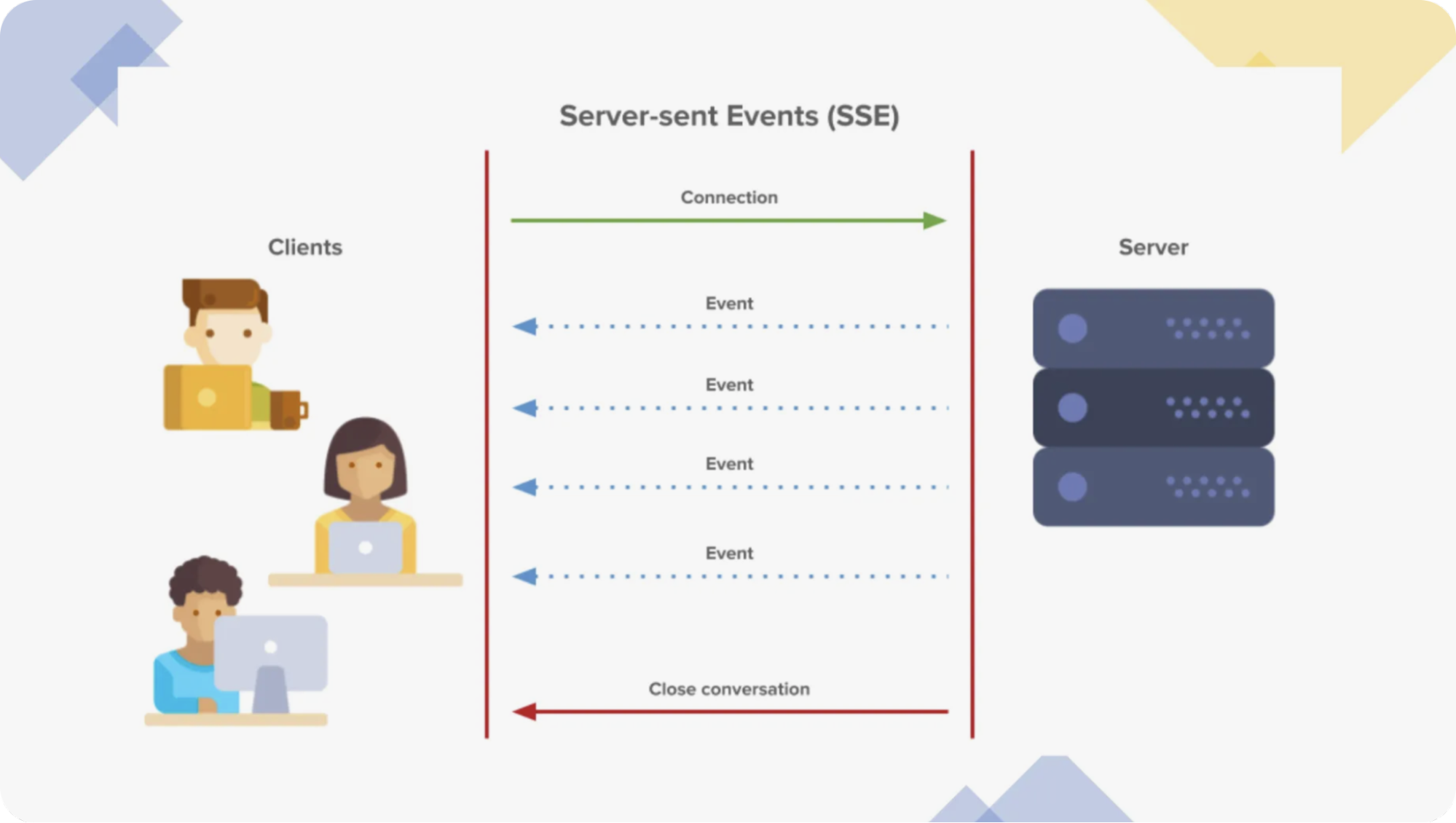

Server-sent events are a unidirectional communication model where updates come from the server to the client over a single, long-lived connection. Since the client can only receive updates and cannot send data back, SSE is unsuitable for applications that require client-side updates, such as chat or video games.

SSE doesn’t work well with HTTP/1.1, primarily because of connection limitations. Additionally, TCP Head of Line blocking means that if packets are lost, the following packets in the message wait indefinitely for transmission.

In contrast, WebSockets provide bidirectional, full-duplex communication over a single TCP connection with a long timeout. Unlike short or long polling, the client does not need to initiate communications and wait for a response. Full-duplex means that the client and server can send messages to each other at any time, making it useful for highly interactive applications like live chat or gaming.

However, WebSockets create persistent connections that place an additional load on servers. This is because the connection stays open, and the connection information is retained in memory as long as the connection is open. Proper scoping for your application infrastructure can mitigate these effects, but WebSocket connections are best reserved for cases in which they are truly needed.

Lastly, “Comet” is an umbrella label for techniques that allow a web server to send data to the client without an explicit request from the client. This allows the client to open the request, and then the server may continue to push updates to the client. It’s not a technology in itself, but a term for how the connection is meant to work. Comet is a combination of long polling and SSE. WebSockets does not fall under the Comet model.

Long polling vs. WebSockets: Similarities & differences

Let’s talk about the similarities and differences between long polling and WebSockets in more detail.

Similarities between long polling and WebSockets

Real-time communication: Both long polling and WebSockets enable real-time data exchange between the client and server, allowing applications to receive updates as soon as new data becomes available. They are commonly used for applications requiring timely data updates, such as chat apps, notifications, or live feeds.

Client-initiated requests: In both technologies, the client initiates the communication. With long polling, the client repeatedly sends HTTP requests, and in WebSockets, the client establishes a persistent connection.

Event-driven data delivery: Both methods support event-driven interactions. In long polling, the server sends data when it becomes available in response to a client's request. Similarly, WebSockets allow the server to push data to the client in real-time without the need for continuous polling.

Differences between long polling and WebSockets

Connection model

Long polling: Works by keeping the HTTP connection open until the server has data to send or the request times out, after which a new request is initiated. Each communication cycle starts with a new request.

WebSockets: Establishes a single, persistent connection between the client and server. Once the WebSocket connection is open, data can flow bidirectionally without reopening a new connection.

Bidirectional communication

Long polling: Primarily supports unidirectional communication, where the server sends updates in response to the client’s requests. While the client can send requests, it generally does not receive unsolicited data.

WebSockets: Provides full-duplex communication, meaning both the client and server can send messages to each other independently and continuously once the connection is established.

Resource efficiency

Long polling: Less efficient due to repeated request-response cycles, which create additional overhead by constantly reopening HTTP connections. This can increase server load and network traffic, especially in high-frequency use cases.

WebSockets: More efficient because the connection is kept open, reducing the overhead associated with HTTP handshakes and allowing continuous, low-latency communication with minimal resource usage.

Latency

Long polling: Typically experiences higher latency due to the need to reopen connections and wait for server responses, especially when there is no new data to send immediately.

WebSockets: Lower latency because the persistent connection allows immediate, real-time data transfer in both directions without the need to reestablish connections.

Use cases

Long polling: Best suited for applications that need real-time updates but where full-duplex communication isn't required, such as notification systems or live data feeds.

WebSockets: Ideal for interactive, bidirectional applications like chat apps, multiplayer games, or collaborative tools, where constant communication between client and server is crucial.

Long polling vs SSE (Server-Sent Events): Similarities & differences

Similarities between long polling and SSE

Real-time updates: Both long polling and SSE are used to deliver real-time updates from the server to the client. They enable applications to receive timely information as soon as it is available, which is essential for live data applications like chat or notifications.

Unidirectional communication: In both technologies, communication is unidirectional, meaning that data flows from the server to the client. The client initiates the communication, but once established, the server sends data when new information is available.

HTTP-based: Both long polling and SSE operate over standard HTTP protocols, making them compatible with most web infrastructure without requiring special configurations. This means that they can be implemented on existing web servers and are supported by firewalls and proxies.

Connection handling: Both long polling and SSE involve keeping the connection open for extended periods of time. In long polling, the connection stays open until the server has data to send or times out, while in SSE, the connection remains open continuously for data streaming.

Use in event-driven applications: Both technologies are suitable for event-driven applications where the server pushes updates as events occur, rather than the client polling for new data at regular intervals. This makes them efficient for applications that require asynchronous data delivery.

Differences between long polling and SSE

Connection model

Long polling: Operates by keeping an HTTP connection open until the server has new data to send or the request times out. Once the server responds, the connection closes, and the client initiates a new request.

SSE: Establishes a single, persistent HTTP connection that remains open for the server to continuously send updates to the client without needing to reopen the connection after each update.

Unidirectional communication

Long polling: Allows unidirectional communication, with the server responding to client requests. The client can initiate new requests, but communication is primarily server-to-client once a request is made.

SSE: Also unidirectional, but designed specifically for server-to-client communication. The server can send multiple updates continuously through the open connection, without needing the client to request each one.

Efficiency and overhead

Long polling: Less efficient because each update requires reopening an HTTP connection after the previous one is closed, which introduces additional overhead.

SSE: More efficient for server-to-client updates, as the connection is kept open, reducing the overhead of repeatedly establishing and tearing down connections.

Protocol support

Long polling: Supported by all browsers and does not require any specific server-side support beyond standard HTTP.

SSE: Built into modern browsers and natively supports real-time data streaming, but it is not supported by older browsers (e.g., Internet Explorer) and requires a server that supports the text/event-stream content type.

Use cases

Long polling: Commonly used in scenarios where updates are less frequent or where more control is needed over when to initiate communication, such as notification systems or applications that require some form of client-driven interaction.

SSE: Ideal for applications requiring continuous, real-time updates from the server, such as live feeds, stock tickers, or event-driven systems where data flows primarily from server to client without frequent client requests.

Now let’s talk about error handling in long polling.

Long polling error handling: Key concepts and strategies

In long polling, error handling ensures that communication between the client and server remains reliable even when issues arise. Errors can occur due to timeouts, network disruptions, or server-side failures. Handling these errors effectively is crucial for maintaining a stable and responsive application.

This is important because errors can interrupt the flow of data between the client and server, leading to poor user experience or application downtime. Proper error handling ensures that the system can recover gracefully and continue functioning, even in the face of connectivity issues or server failures.

Key strategies for long polling error handling

1. Connection timeout and response handling

Timeouts: Set a reasonable connection timeout on the server to prevent requests from hanging indefinitely. If no new data is available by the timeout, the server should return a status like 204 No Content or an appropriate message indicating no updates.

Empty responses: When no data is available, return an empty response or specific status codes, ensuring the client understands the situation and doesn’t misinterpret the response as a failure.

2. Automatic retry mechanism

Automatic retries: If a long polling request fails due to a network issue or server error, implement automatic retries on the client side. Clients should wait a short time before retrying the request to avoid flooding the server with back-to-back requests.

Exponential backoff: For repeated errors, use an exponential backoff strategy, where the retry interval increases progressively (e.g., 1 second, 2 seconds, 4 seconds). This helps prevent overwhelming the server while giving it time to recover.

3. Handling HTTP status codes

Ensure the server returns proper HTTP status codes to help the client handle different error scenarios.

500 (Internal server error): Signals a server issue; the client should retry after a delay.

404 (Not found): Indicates that the requested resource is not available; the client may stop retrying.

408 (Request timeout): The client should retry immediately, as this indicates a timeout due to slow server response.

4. Connection health monitoring

Regularly check the status of the connection. If the client detects a loss of connection or repeated failures, it should attempt to reestablish communication by restarting the long polling cycle.

Monitoring ensures that temporary issues are resolved, and the client remains connected once the server is available again.

5. Fallback mechanisms

If long polling repeatedly fails (e.g., due to persistent server errors or network issues), implement fallback strategies like switching to traditional polling. This ensures the client continues to receive updates, even if it’s less efficient than long polling. Having alternative communication methods helps maintain service continuity.

6. Error logging and monitoring

Both client and server should log errors and monitor long polling interactions. Tracking failed requests, timeouts, and server responses helps identify patterns and diagnose problems before they escalate.

Proactive monitoring and logging enable faster issue resolution and better long-term system stability.

By following these strategies, you can ensure reliable communication in your application, even when errors occur, improving both user experience and system resilience.

How to optimize long polling

If you include long polling in your client/server communication options, you can minimize server-side resource issues with configurations that minimize resource consumption. These options can minimize unnecessary connections and reduce the load of fetching and sending data:

Connection timeouts: Implement sensible connection timeouts to avoid resource wastage. This helps close idle connections promptly, ensuring that resources are available for active users and processes.

Throttling and rate limiting: Limit the amount of bandwidth that long polling can use to prevent excessive requests from overwhelming the server.

Connection pooling: Maintain a pool of reusable connections. This approach reduces the overhead associated with establishing new connections and ensures quicker response times.

Heartbeat messages: Send periodic “heartbeat” signals to confirm that the client is still connected and responsive.

Chunking data: Before transmission, break down large pieces of data into smaller, manageable chunks. When the server can send data in smaller increments, the client starts processing data without waiting for the entire payload.

Caching: Cache frequent requests and responses to reduce the load on your server and speed up client response times.

Scalability and load balancing: Distribute incoming requests across multiple servers to improve performance and ensure no single server becomes overwhelmed.

Async operations: By using background workers for slow tasks, you can keep the main thread free, ensuring that your application remains responsive even during heavy processing periods.

Batched responses: Wherever possible, collect multiple updates and send them at once. This reduces the frequency of requests and responses, thus enhancing performance and reducing server load.

Selective polling: Consider using selective polling to minimize unnecessary data transfer. Simply put, selective polling allows the server to respond only when specific conditions are met. This approach prevents the server from continuously polling for changes, which can help reduce server load and bandwidth consumption and improve overall performance.

Retry mechanism: Develop a robust retry mechanism to handle failed requests and ensure continuous real-time updates despite network disruptions.

Compression: Compressing data before sending lowers latency and reduces the amount of bandwidth consumed.

7 programming languages compatible with long polling

JavaScript (Node.js): JavaScript, particularly when used with Node.js on the server side, is highly compatible with long polling due to its non-blocking, event-driven architecture. Node.js handles asynchronous requests efficiently, making it ideal for applications that require continuous communication like long polling. It also has strong support for HTTP and WebSockets, making it a versatile choice for real-time applications.

Python: Python, with frameworks like Flask or Django, is another strong option for long polling. Python’s simplicity and ease of use, combined with the ability to handle asynchronous tasks through libraries like

asyncioandaiohttp, make it suitable for long polling. However, Python may not be as efficient as some other languages for handling a very high number of simultaneous connections without optimizations.Ruby: Ruby, particularly when using the Rails framework with libraries like

ActionController::Liveor event-driven servers like Puma, can support long polling. While Ruby is known for its simplicity and developer productivity, it may require additional tools (e.g., Redis, sidekiq) to manage high concurrency in long polling efficiently.Java: Java is widely used in enterprise applications and has excellent support for long polling through servlet-based frameworks like Spring Boot or Java EE. Java’s multithreading capabilities and robust server-side frameworks make it well-suited for handling numerous client connections with long polling. It is known for scalability and stability in large-scale applications.

Go: Go is a highly efficient and performant language for network-based applications, including long polling. Its built-in support for concurrency through goroutines makes it particularly good at handling many simultaneous connections with minimal overhead. Go’s speed and efficiency make it an excellent choice for real-time applications.

PHP: PHP can be used for long polling, particularly in environments that leverage asynchronous libraries like

ReactPHP. While traditionally, PHP is blocked, using it with these libraries or external event-driven servers can allow PHP to handle long polling. However, it may not be as efficient for handling very high-concurrency scenarios compared to languages like Node.js or Go.C#: C# with ASP.NET provides robust support for long polling via its asynchronous programming features, such as async/await. ASP.NET’s SignalR framework, while more commonly used for WebSockets, can also implement long polling when WebSockets are not available. It’s highly scalable and well-suited for enterprise-level applications.

When choosing a language, consider your resources, requirements, documentation, and need for support.

5 long polling frameworks and libraries

Here are five useful frameworks and libraries for implementing long polling:

Socket.io (JavaScript, Node.js): Socket.IO is widely used and automatically manages the transition between WebSockets and long polling. It’s highly versatile and has strong community support, making it ideal for real-time applications like chat, live updates, and notifications.

Flask-SocketIO (Python): Flask-SocketIO provides seamless integration with Flask and supports long polling as a fallback for WebSockets. It's an essential tool for Python developers building real-time apps that need reliable communication channels.

SignalR (C#, ASP.NET): SignalR is a robust framework for ASP.NET that handles real-time communication efficiently. Its automatic fallback to long polling ensures reliability, making it a go-to choice for enterprise-level applications.

CometD (Java): CometD offers a scalable and reliable solution for real-time messaging in Java, using the Bayeux protocol. It’s ideal for developers who need long polling or other Comet-style messaging in high-concurrency environments.

AIOHTTP (Python): AIOHTTP provides low-level, flexible control over HTTP communication with built-in support for asynchronous programming. It's particularly useful for Python applications that require fine-tuned long polling functionality.

These libraries are widely supported, efficient, and reliable for long polling and real-time communication needs.

How to implement long polling

To implement long polling, you can either write a script from scratch or implement it with libraries and frameworks in (for example) JavaScript or Java. The decision on how you implement it is ultimately based on your available tools and the environment in which you will host the long polling function.

The following examples are not exhaustive, but they are representative of popular options.

Long polling implementation with HTTP

Implementing long polling with HTTP is a from-scratch implementation in Python and is available in many environments. The script provided here is a basic implementation without the optimization and security features listed in the article. Keep in mind that this is only an example meant to demonstrate the simplicity of long polling and should not be used in production.

A few notes before you review the code:

The demonstrated connection is to the mediastack news server, which provides new headlines every ten seconds. If you copy-paste and run the script, changing only the API key, you should receive a list of events.

This script does not work with online Python sandboxes, such as Online Python Compiler or Python Tutor, because they do not allow responses from the server.

Before running, make sure you sign up for a free API key from mediastack and insert it before the API call. There are several methods for doing so; an environment call is discussed around 2:14 in this video by NeuralNine.

Long polling implementation with libraries and frameworks

You can also implement HTTP long polling using premade libraries and frameworks. Client-side and server-side libraries are available.

When you choose to implement long polling with libraries and frameworks, consider options for timeout management, reconnection handling, and error handling. Also, take into consideration which browsers (if any) are most likely used by your target audience. Both of these considerations can help determine which library you choose to use.

JavaScript offers two useful client-side libraries: Pollymer and jQuery.

Pollymer is a general-purpose AJAX library with features designed for long polling applications. It offers important improvements, such as managing request retries and browser compatibility.

jQuery was not specifically created to improve long polling, but jQuery’s AJAX capabilities make long polling easier to implement.

Server-side libraries in Go and Java are also available to assist you in the implementation of long polling. Golang Longpoll is a Go library for creating HTTP long poll servers and clients. It has features like event buffers and automatic removal of expired events, which lower the burden of the open connection. The Spring Framework has libraries and tools for long polling within Java applications, such as DeferredResult.

Let’s now discuss one important consideration of polling: security.

Security considerations for long polling

As with almost any communication protocol, there are security implications you need to take into account. Thankfully, if you follow good security practices, many of these concerns are easy to overcome:

Encryption: Use HTTPS to encrypt data in transit between the client and the server. This prevents potential eavesdropping or man-in-the-middle attacks and protects data integrity and confidentiality.

Access management: If the data has confidentiality requirements or if you are concerned about distributed denial-of-service (DDoS) attacks, you should implement authentication and authorization mechanisms. This limits the initiation of long polling requests to verified users, and it validates requests on the server side.

Rate limiting: This helps you protect your server from DDoS attacks and prevents resource starvation by limiting the number of connections per source per chosen timeframe (e.g. five connections from the same source per second).

These considerations are not the only good security practices for long polling. Consider the same precautions you would use for any other HTTP-enabled services, such as content limitations and validation of the transmission sources. Sometimes, the APIs themselves will require these or other security features.

Long polling in real-time applications

Long polling remains a useful technique in the toolkit of real-time communication methods, especially when newer technologies like WebSockets or SSE might not be feasible.

Long polling is the simplest and most widely supported solution for important real-time communication and update operations like business messaging and chat programs. You can use long polling as the primary method of communication, or you could use it as a backup to WebSockets or SSE. Either implementation ensures you have chosen a protocol and functionality that almost every information system supports.

Long polling is particularly beneficial for projects at a smaller scale or during the prototyping phase when immediate optimization isn’t the priority. However, you should always implement proper security measures and optimize your infrastructure to make the most of this (or any) communication method. Once you are certain that long polling is the way forward for your application, spend time getting to know the additional ways to make the system more secure and more functional.

When your business decides that the time is right to build in-app communication such as chat, calls, or omnichannel business messaging, Sendbird is ready to provide customer communications solutions - including a chat API, a business messaging solution, and fully customizable AI chatbot - that you can build on.

Sendbird is the trusted communications API platform powering industry‑leading customer experiences with in-app chat, calls, omnichannel business messaging, and AI concierges tailored to your brand.

Send your first message today by creating a Sendbird account to get access to valuable (free) resources with the Developer plan. Become a part of the Sendbird developer community to tap into more resources and learn from the expertise of others. You can also browse our demos to see Sendbird Chat in action. If you have any other questions, please contact us. Our experts are always happy to help!