How Sendbird approaches SDK testing for stability and performance

Sendbird's SDK performance is top notch.

As a leader in the in-app chat and messaging API market, the quality of our code releases is always top of mind. Maintaining a secure and bug-free environment — across iOS, Android, and JavaScript SDKs — is paramount for our customers.

With SDKs, unfortunately, Sendbird has no control over the installation of the updated code. It is up to the app user to install the new version. This makes the quality of our SDK even more critical.

In response, Sendbird has created thorough and fast testing procedures that ensure the stability of our products. This blog post describes our testing approach and drills down into a few of the methods we use to test and improve the stability of our platform.

Overall approach

The primary purpose of testing is to support iterative development, making it easier to develop and refactor existing code while ensuring that they work properly.

Using CircleCI and Fastlane, we test our SDK every time a commit is pushed to a branch that is associated with a pull request. This allows us to keep track of our commits and actively check to see if they are passing the test cases.

Every commit to the develop, main, and hotfix branches are also tested, to ensure that our production code is safe for deployment.

Organizing multiple test schemes

With hundreds of test cases to cover, we separate them into three unique schemes:

- Unit test: Tests each component separately

- Scenario test: Tests the SDK altogether

- Media test: Specifically tests the handling and integrity of media

We also perform regression tests, running existing tests with every change to the SDK. Test cases are rarely removed or modified, and we try to add more tests as we make changes to our SDK.

Considerations

When creating tests for our SDK, there are few things to keep in mind:

- SDKs have very complex architectures.

- SDKs can be used in various versions and platforms.

- SDKs can be used under different use cases and scenarios that are not always ideal.

- SDKs have some dependency on hardware and UI-related APIs.

Testing an SDK means that we have to test the core functionality of the SDK, assessing each component of the SDK as well as the overall architecture and flow to ensure that it works under diverse scenarios.

The only UIKit you need to build in-app chat.

Our approach

To ensure the stability of our code, we first make sure we have:

- A good number of test cases that represent our code coverage

- A variety of tests that can evaluate all of our features

Writing detailed tests

Test all code paths

To achieve really safe code means that we are confident in how our code is going to run under every possible circumstance. This includes implementing cases where the code fails, such as:

- Creating objects with invalid parameters

- Using invalid data

- Running a series of actions that are not acceptable under normal conditions

For example, to test a failed API call, you could mock your API client and specify a failing response like:```swift

let apiClient = MockAPIClient()

apiClient.response = FailResponse()

apiClient.run()

XCTAssert(apiClient.error == FAILURE)

```

Although such scenarios are unlikely to occur in a production environment, this allows us to ensure that errors are handled correctly.

This also opens the door for flexible refactoring; with such a broad test suite, we can be sure that the refactored design is perfectly compatible with the original design.

Track the code coverage

Tracking code coverage is important because it tracks how code coverage changes as we merge new PRs, encouraging us to make new test cases as new features are added.

Many test automation tools, such as Fastlane, come with pre-built features. Using Fastlane’s xcov and Xcode’s built-in code coverage feature, we calculate and deploy the code coverage for every CI check and post them on Slack. This helps us keep track of the code coverage and also reminds us to add more test cases as we develop new features.

Writing broad tests

Mocking

Mocking is important in unit testing because not every component is suitable for testing, so we need to mock some of the dependencies that a module might need.

Mocking replaces and simulates the behavior of original modules, enabling us to control the responses and behaviors of the modules as we test our SDK. Here’s a snippet of a module that mocks the API client:

class MockAPIClient: SendBirdCalls.APIClient {var response: Response?var error: SBCError?override func send<R: ResultableRequest>(request: R, completionHandler: R.CommandHandler?)

{// Some other business logic, skips actual network request

completionHandler?(response as? R.ResultType, error)}}

Instead of actually communicating with the server, we can do the following:

let apiClient = MockAPIClient()

apiClient.response = SuccessResponse()

let someOtherModule = MockOther(apiClient: apiClient)

With this approach, we can manipulate the behavior of `someOtherModule` and remove the need to communicate with the server.

Testing the SDK as a whole

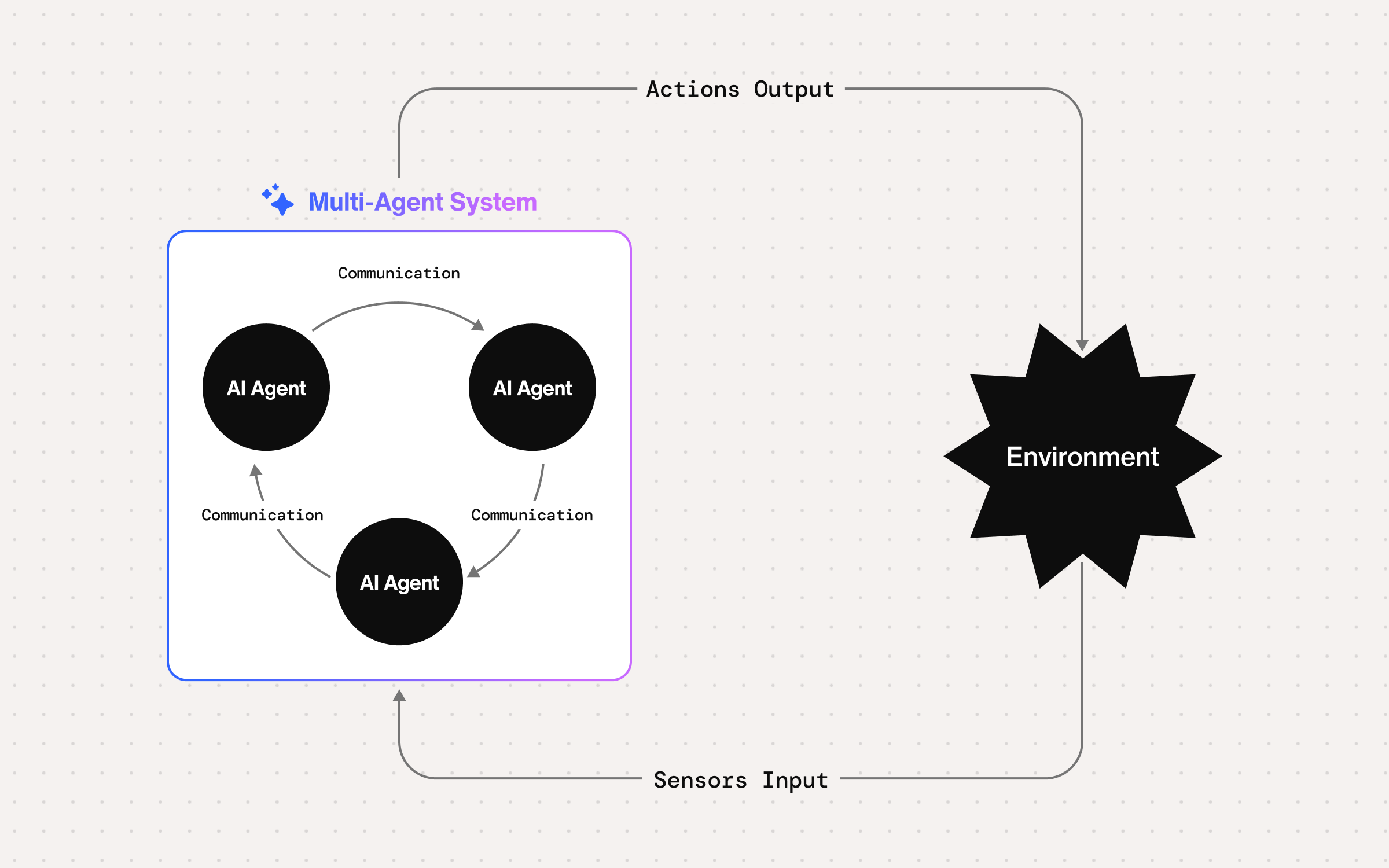

In addition to unit tests, we have scenario tests that evaluate the overall flow and usage of the SDK.

While the Calls SDK is accessible via a singleton pattern through the public interface, we decided to create a separate interface internally to make our code independently testable.

Testing a singleton instance is extremely difficult because multiple test cases may share a single resource together, creating side effects that may cause the tests to fail.

Therefore, we chose to expose our public interfaces and manage internal resources using an injection pattern, as shown below:```public class SendBirdCall {private static var main = SendBirdCallMain()public static func authenticate() {main.authenticate()}}

private class SendBirdCallMain {

...

}

```

As a result, we can create multiple instances of SendBirdCallMain and, effectively, multiple instances of SDKs. With two objects acting as a caller and a callee (adding more users as needed), we are able to simulate and test the overall flow of the SDK.

Planning your test design strategy before building your architecture is essential in order to avoid costly and time-consuming changes after the fact.

Testing media

Sendbird Calls is a feature product that enables users to make video and audio calls with other users around the world.

Because our SDK involves logic that handles both audio and video media, it is important to test such capabilities as well. However, without a physical device and a physical observer, it is difficult to test the integrity of the audio and video.

To solve this, we created a separate media test suite that tests the media handling of our SDK.

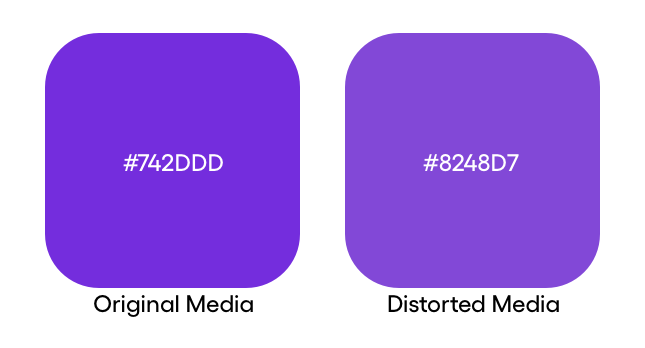

For example, to test the integrity of video quality, we inject a color-changing video into the WebRTC stream of a user and compare the colors with the recipient’s video. To correctly test pixels with the color degradation caused by codecs and compressions, we extract colors from each frame and convert them to CIELAB color space to compare the colors based on how the human eye recognizes color. For reference, see this relevant Stack Overflow thread.

Using more sophisticated methods to test the integrity and quality of our service ensures that the quality of our service does not degrade as we continuously modify our SDK.

Remaining tasks

Given the breadth of our platform, there are a few more areas we are planning to address in the near future.

Unavailable test interfaces

Because we run tests in a CI environment, we can not test features like push notifications. For example, we would have to use a physical device to test our SDK, or use other services that host physical devices for us, such as Firebase Test Lab.

We are also looking for ways to easily check if our customers have correctly integrated our SDK into their apps. With a few test scenarios that can be easily adapted into our clients’ test code, we should be able to diagnose the integration of our SDK.

Unreliable UI testing

In addition to the unit tests and scenario tests, we must also make sure that our SDK works well inside an app; something that can’t really be done automatically.

To address this, prior to deployment, we load our SDK into our own Quickstart Application and test our features.

Our SDK is then tested using a checklist that looks like this:

“`

### Audio Call

– **Caller** can `dial` a call & `hear` **callee**

– **Callee** can `receive` a call & `hear` **caller**

…

### Push Notification

– Push is received when the app is **terminated**

– Push is received when the app is in the **background**

“`

Quality assurance testing

With an SDK that provides multimedia communication support for our customers, we are constantly working to improve the quality of the calls that connect our end users.

Due to the complex nature of the visual and auditory systems of humans, it is extremely difficult to objectively measure the quality of video and audio calls. Nevertheless, we are currently researching and developing various methodologies that accurately evaluate the perceived quality of our video and audio services. By establishing such quality assurance measures, we will be able to continually improve our services.

Conclusion

This blog post described a few methods that the Sendbird Calls iOS SDK uses in order to improve the stability of our SDK.

In short, our testing methodology includes:

- Planning for testability during architecture design

- Mocking many modules to control the flow of the program

- Writing broad tests that evaluate end-to-end user flow

- Testing all code path

- Automating testing

As a result, the Sendbird Calls iOS SDK currently maintains a code coverage of around 90%. While not perfect, this allows us to rapidly develop our SDK, and address and prevent errors in the future. Further, having a good number of test cases allows us to avoid testing many of the features with real devices, and automate much of the tests instead.

While it may be difficult to write tests at first, writing good test codes allows you to deliver better and safer products.