How to master prompt engineering, function calling, and the use of GPT

Generative AI, particularly in the form of chat services like ChatGPT, is rapidly gaining popularity. If you've ever wondered about the mechanics behind these AI's responses to questions and their operational principles, the concept of a 'Prompt' is central to understanding it.

What is prompt engineering?

Prompt engineering is the practice of designing and optimizing inputs (prompts) to elicit the most effective or desired outputs from artificial intelligence models, particularly those based on large language models (LLMs) like GPT (Generative Pre-trained Transformer). Prompt engineering for AI chatbots is a multidisciplinary field that incorporates knowledge and methods from three main areas: linguistics, psychology, and computer science.

Prompt engineering merges linguistics to craft clear and meaningful prompts, psychology to align AI responses with human expectations, and computer science to understand and guide the AI's processing capabilities. This multidisciplinary approach ensures that AI systems can accurately interpret and respond to human language and intentions, facilitating more effective and intuitive human-AI interactions.

Anywhere, anytime AI customer support

Why prompt engineering matters

By meticulously crafting prompts, you can guide AI models to produce responses that are not only relevant and coherent but also creative and contextually appropriate. The discipline of prompt engineering is crucial in leveraging the full capabilities of LLM chatbots, making it possible to tailor AI responses for a wide range of applications education, customer support, marketing, and sales, from conversations to complex problem-solving and decision support. As AI systems become increasingly integrated into daily life and business operations, prompt engineering emerges as a critical skill in the AI toolkit, enabling more nuanced and compelling human-AI interactions.

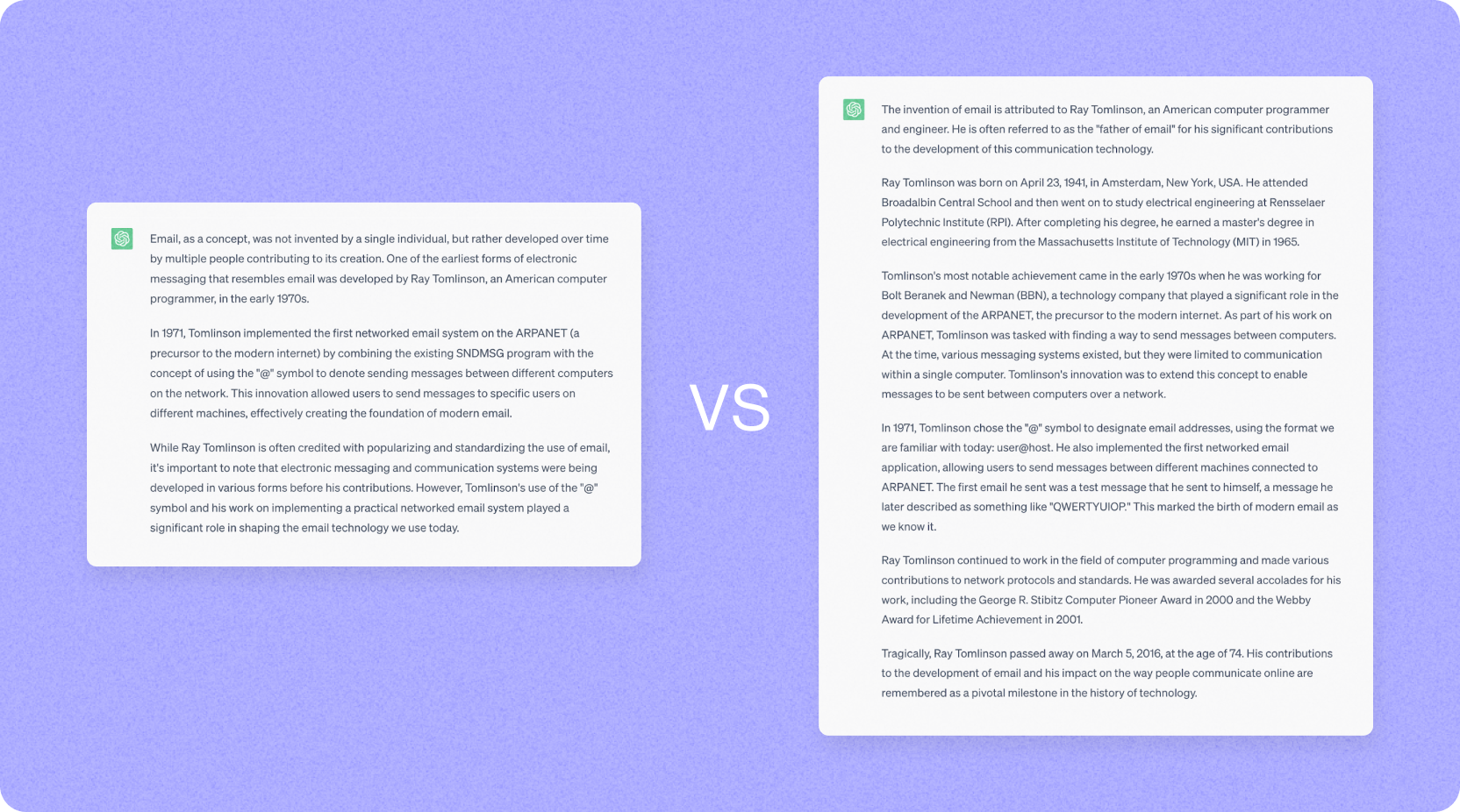

Much like the variance in search engine results based on the keyword choice, the quality and relevance of AI responses significantly depend on how questions are framed.

For example, if you ask, “Who invented the email?” or “Tell me more about who invented the email?” while close in meaning, the latter question will generate a much more complete answer. The AI's ability to discern and respond appropriately hinges on the prompt's specificity.

How to prompt GPT efficiently

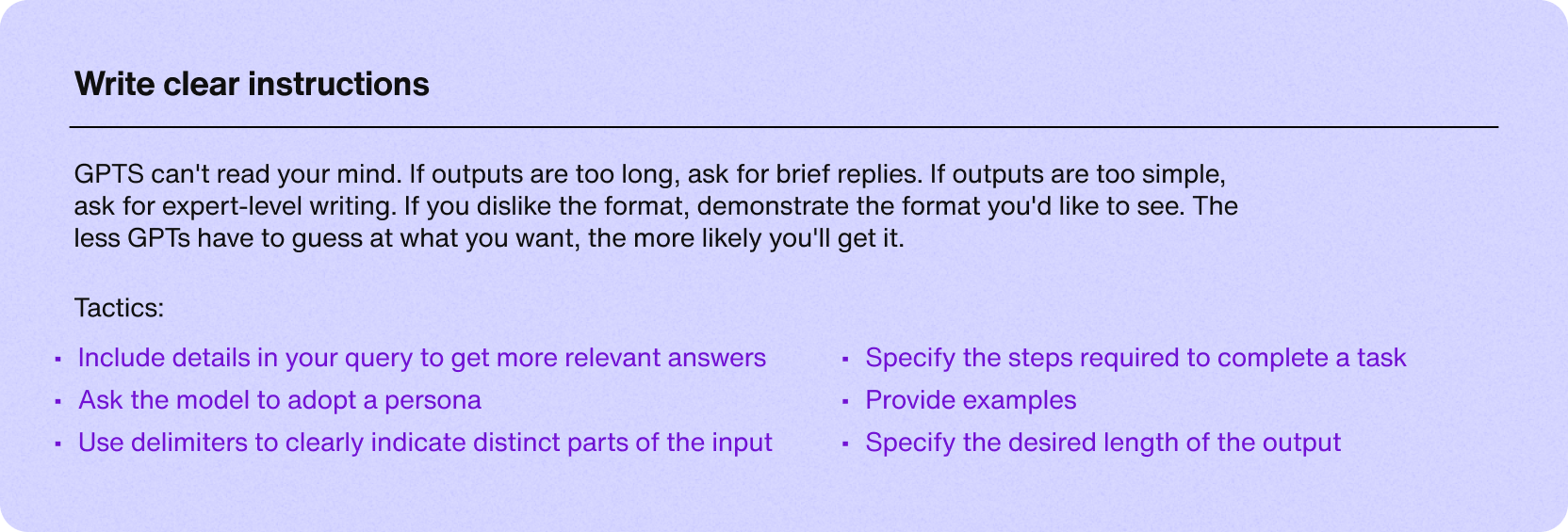

OpenAI offers guidelines to enhance GPT utilization. Key strategies include:

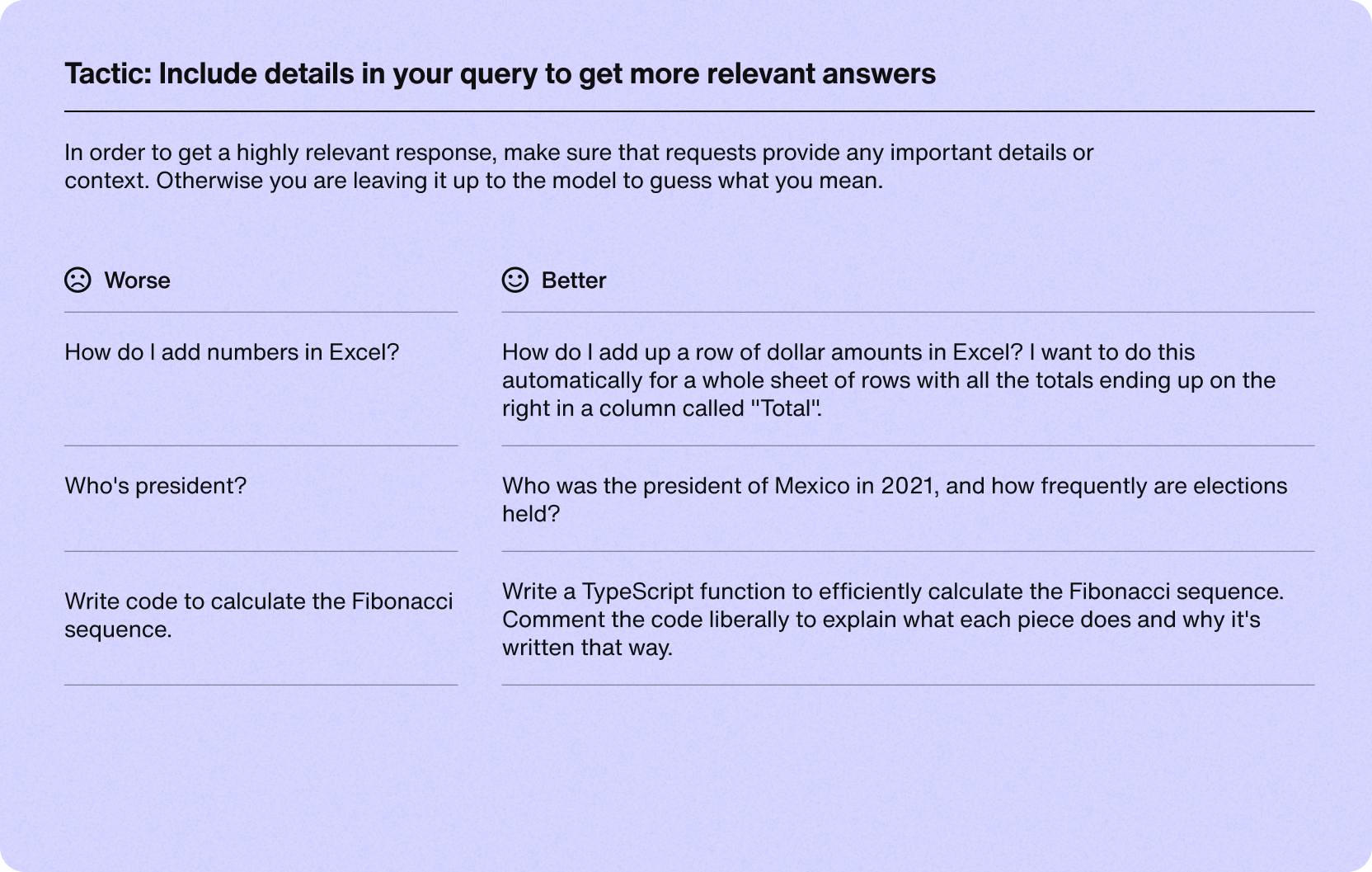

Specifying detailed information to get the answer you want

GPT cannot read our minds. That's why it's important to ask specifically what information you want.

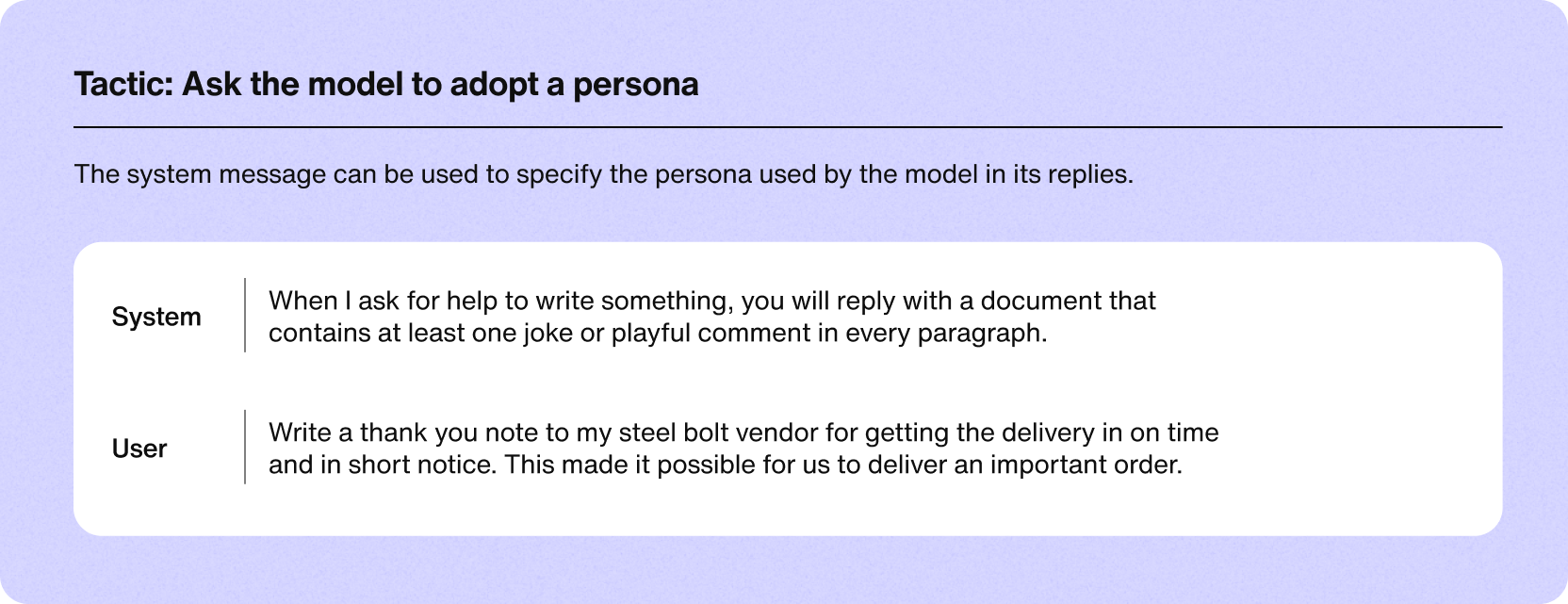

Ask the model to assume a specific role

Because GPT cannot guess the user's intent, it is essential to define the chatbot persona clearly. With Sendbird’s AI chatbot, you can specify the bot's personality or persona with the “System” input. The Persona and role will significantly influence the nature of its responses.

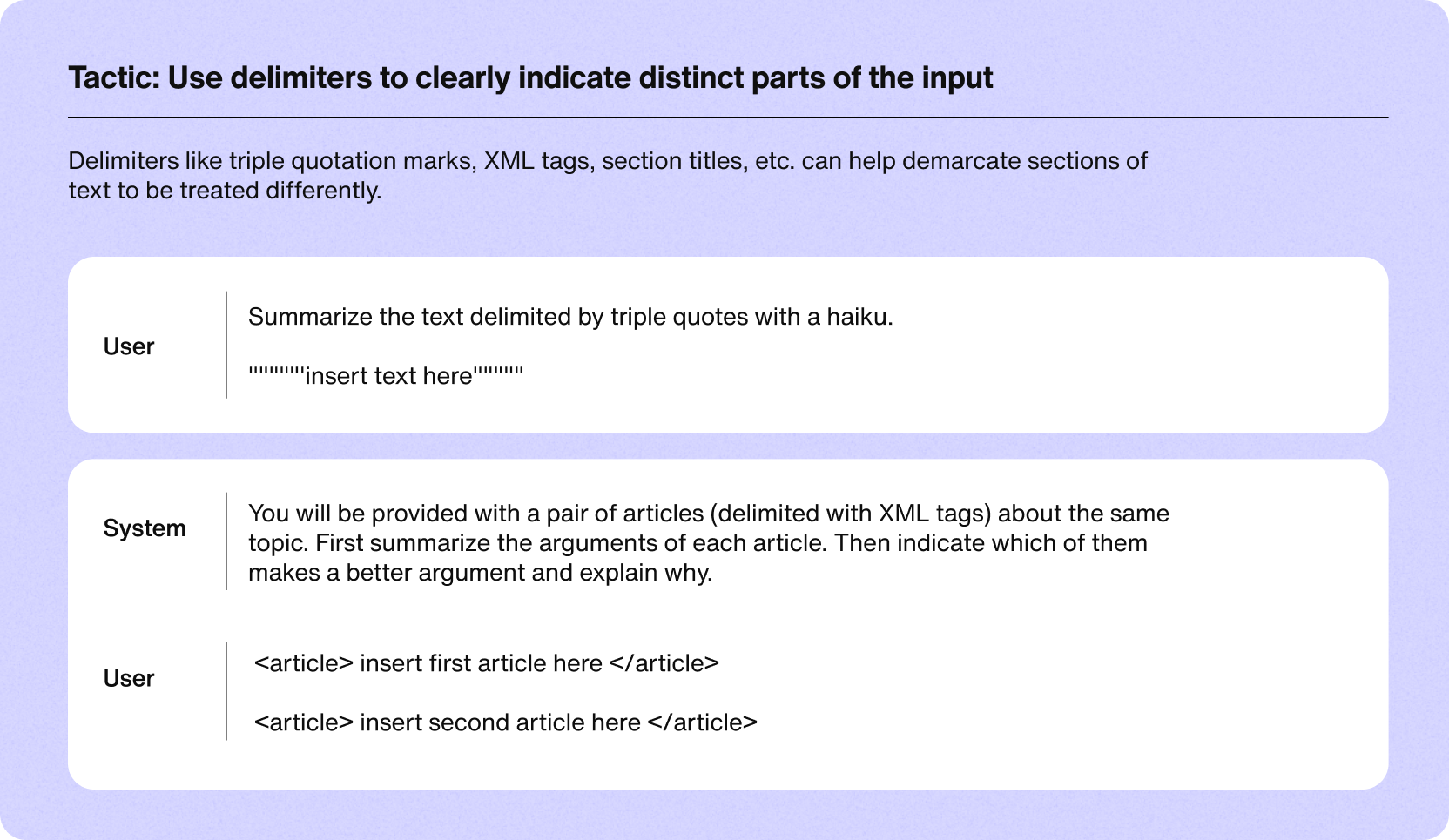

Display delimiters to highlight specific parts of input

GPT tends to understand questions containing delimiters better than short sentences. Our AI chatbot uses the “system” and “user” prompts to answer users' questions more accurately.

Automate customer service with AI agents

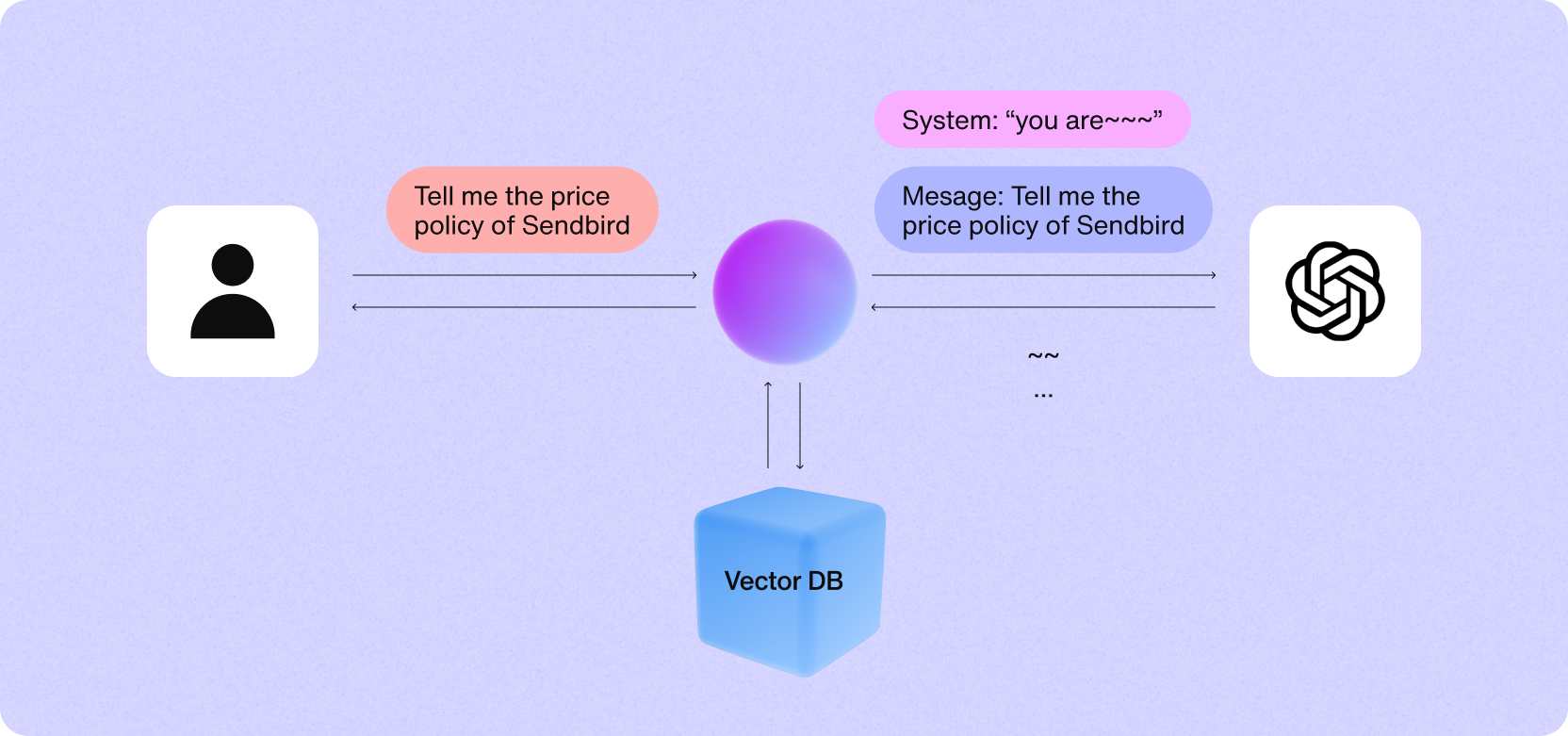

How to circumvent GPT’s “system message” limitations and enhance your AI chatbot with RAG principle.

We just learned to use OpenAI’s GPT API to define the “system_message” and customize our AI chatbot. There is a little problem, however. The number of tokens for the “system_message” is limited. This limits how much instruction we can provide and, thus, how customed our AI chatbot can be. As a result, Sendbird uses vector DB technology to dynamically change the “system_message” to match the most appropriate knowledge base content available. This retrieval-augmented-generation technique (RAG) allows for much better answers and a more sophisticated AI chatbot.

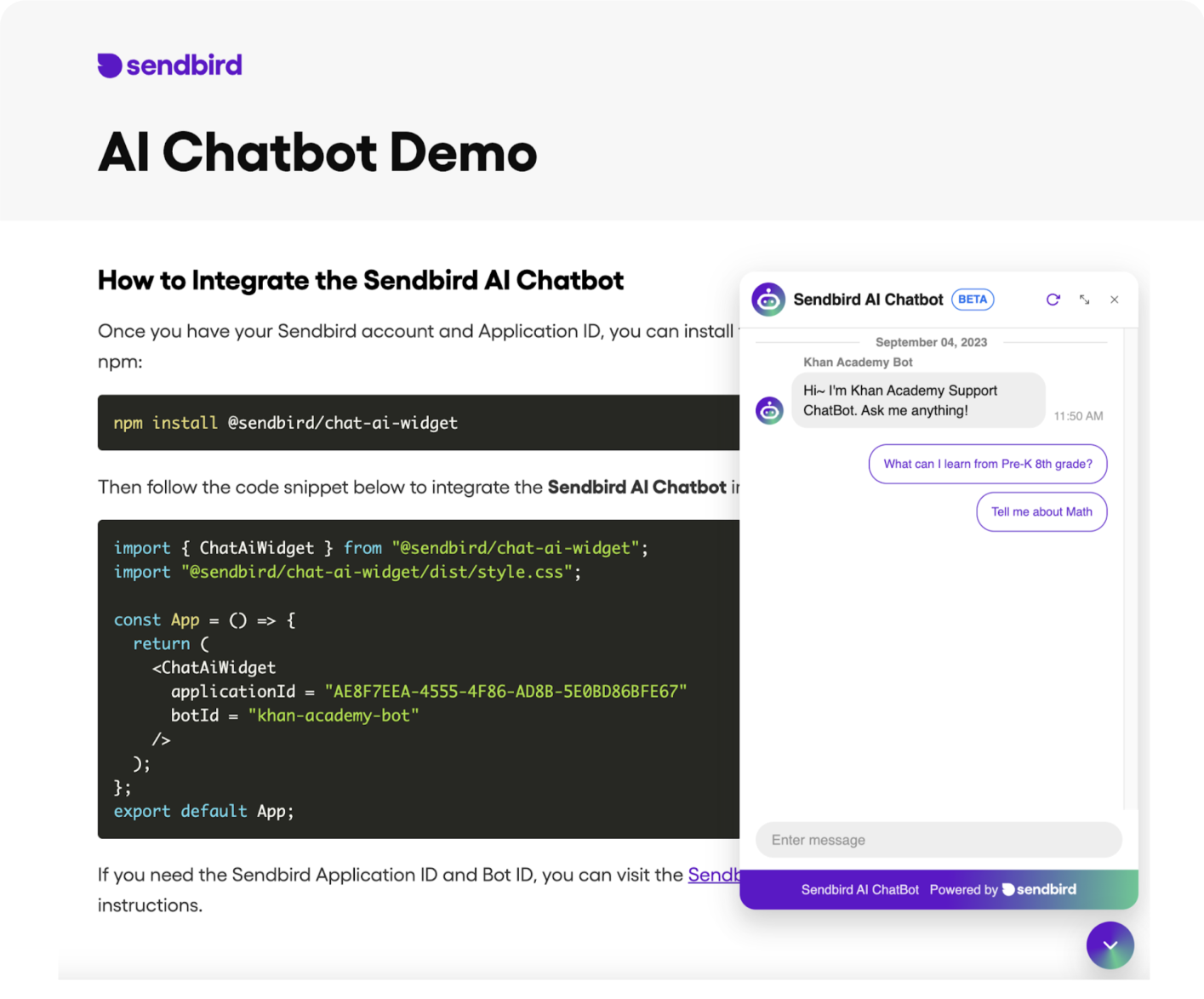

How to deploy an AI chatbot to your website in a few clicks

Once you have built an advanced AI chatbot, you must host it on your website. if you are a developer, you check our AI chatbot widget tutorial and download the widget code on GitHub. Today, there is an even simpler way. You may use our free AI chatbot trial. The onboarding process will guide you through 5 simple steps, including building an AI widget with no code via our AI chatbot builder. The chatbot builder will allow you to create an iframe snippet you can copy and paste directly into your website.

Now that we have covered prompt engineering and shown you a simple way to deploy your chatbot on your website let's discuss Function Calls, an API-driven method for your AI chatbot to retrieve structured data from 3rd party systems like a database.

What is Function Calling?

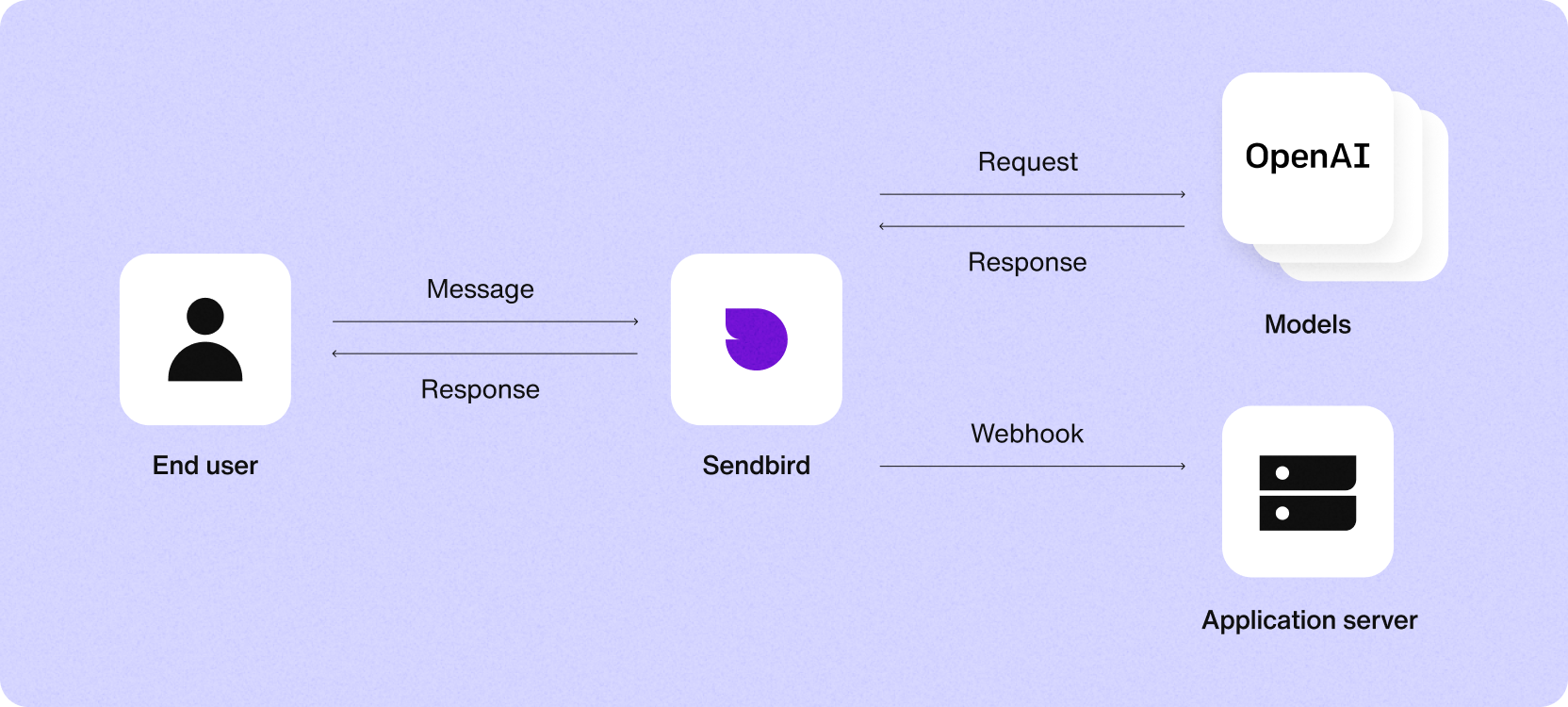

Function calling with platforms like OpenAI's GPT refers to the capability of the AI to execute external functions or access external data sources in real time during a conversation. This feature allows the AI to go beyond its pre-existing knowledge base and interact with external APIs or databases to fetch or process information dynamically based on user requests. For example, if a user requests real-time weather information, GPT can respond by fetching weather data using a weather service's API.

GPT Function Calls use case

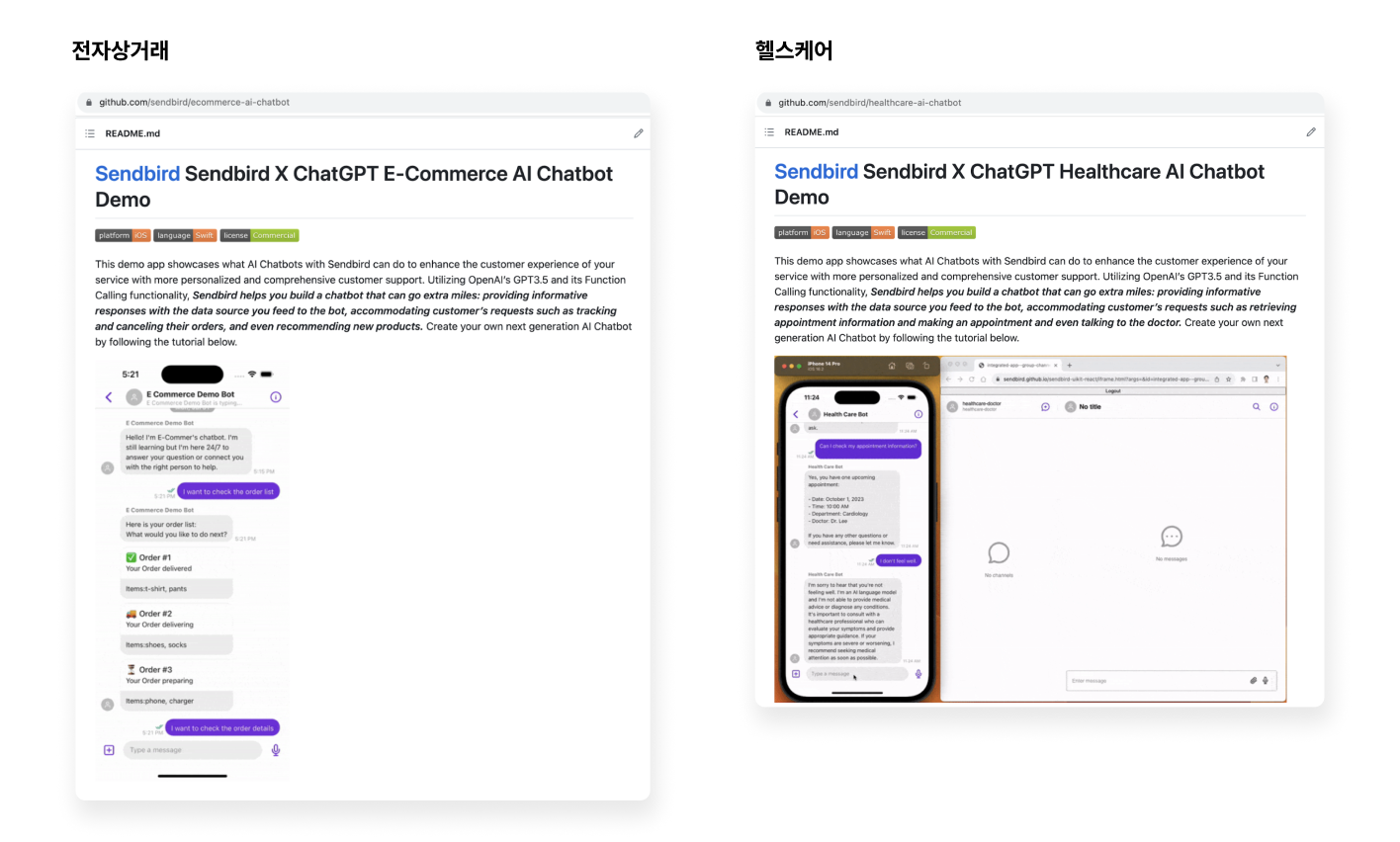

The GPT Function Calls feature enables AI chatbots in e-commerce and healthcare to access and provide current information interactively.

For ecommerce, a chatbot can instantly check and share product availability, update pricing, and offer shipping details during a conversation with a customer. It can also suggest products based on the customer's preferences and purchase history, making shopping more convenient and personalized.

In healthcare, chatbots can use Function Calls to provide patients with immediate information on doctor availability, schedule appointments, and offer guidance on health queries. They can also remind patients about medication schedules or upcoming health check-ups, ensuring critical information is communicated promptly and efficiently.

Visit our GitHub repos for ecommerce and healthcare AI chatbot examples:

- ecommerce: https://github.com/sendbird/ecommerce-ai-chatbot

- healthcare: https://github.com/sendbird/healthcare-ai-chatbot

Reinvent CX with AI agents

How to build custom GPTs for your websites with Sendbird

In this article, we illustrated how Sendbird’s AI chatbot can use OpenAI’s technology as a foundation and improve it to achieve a more sophisticated AI chatbot. We also introduced OpenAI’s function calling feature and provided sample code for the healthcare and ecommerce use cases. Finally, we provided you with code and a no-code alternative to create your own AI chatbot widget for your website. Today, you can build an AI chatbot for free and experiment for 30 days before choosing one of our many AI chatbot plans.

Sendbird gives you a unique option to deploy on web and mobile apps with our flexible chat SDKs and easy-to-use chat UI Kits. The Sendbird AI chatbot feature set is a rich one that allows for content ingestion, workflows, custom responses, Function Calls, and more to improve your AI chatbot responses. Also, our AI chatbot integrates seamlessly with our customer service software solutions Sendbird Desk and Sendbird's Salesforce Connector. Don’t wait any longer to boost your customer service experience with AI chatbots across support, marketing, and sales. It only takes minutes.