6 essential community guidelines for mobile content moderation

Mobile communities foster connection, creativity, and collaboration with unparalleled accessibility. But like any thriving ecosystem, keeping things in balance takes a bit of effort.

Building a thriving, branded, in-app mobile community takes content moderators, possibly a community manager, and an efficient content moderation system.

Read on for the six essential community guidelines to moderate content in your app.

Why do messaging apps need content moderation?

Content moderation makes messaging apps a go-to spot for users and advertisers. It's all about keeping things respectful and on-topic so everyone feels safe and has a good time communicating with each other.

By handling sketchy content, like hate speech or bullying, moderation ensures that users can hang out and share information without worrying about getting tangled up in negativity. And that potential advertisers don't see the space as volatile.

But it's not just to protect conversations. Moderation also helps messaging apps play by the regional rules, sticking to the laws and regulations of that area. This way, the platform keeps its reputation spotless and stays out of legal hot water.

Messaging apps that succeed at moderation are like magnets, pulling in more users and keeping them hooked.

Understanding the importance of user safety in-app

Happy users feel safe, valued, and engaged with your product. They know that when they send a message through your app, they can share and connect without worrying about trouble.

Many threats need to be addressed before you can run a vibrant and inclusive community space within your mobile app. Examples include:

Cyberbullying and harassment: Offensive language, threats, or targeted attacks against individuals can lead to a hostile environment, causing emotional distress and harm.

Hate speech and discrimination: Content that promotes or incites hatred, violence, or discrimination based on race, ethnicity, religion, gender, sexual orientation, or other protected attributes can be harmful and divisive.

Graphic violence and gore: Graphic images or videos depicting physical harm, accidents, or injuries can disturb users and trigger emotional distress.

Adult content and nudity: Explicit or sexual content can be inappropriate for certain users, particularly minors, and may breach community guidelines or local laws.

Misinformation and fake news: False or misleading information can have significant negative consequences, particularly in public health, politics, or finance.

Spam and phishing: Unsolicited messages, scams, or attempts to trick users into revealing sensitive information can compromise user security and degrade the overall app experience.

Promotion of illegal activities: Content promoting illegal activities, such as drug use, human trafficking, or extremist propaganda, poses a significant risk to user safety and may violate laws.

Privacy violations: Personal information, such as addresses, phone numbers, or financial details, should not be shared without consent to protect user privacy and safety.

Intellectual property infringement: Sharing copyrighted material, trademarks, or trade secrets without permission can lead to legal issues and harm original creators.

6 community guidelines for mobile content moderation

So, what does it take to build a safe and inclusive online community where everyone feels welcome? Here are six community guidelines that will help you build the foundations of a safer mobile community space:

1. Define and share community guidelines

Clearly define acceptable behavior and content within your app and communicate these guidelines to your users. Establish rules regarding offensive language, harassment, hate speech, and other inappropriate content.

Users should know the consequences of breaching moderation guidelines, such as content removal, temporary suspensions, or permanent bans. Consistent enforcement of these rules is also essential for maintaining a healthy community.

2. Put user safety above all else

Ensure user safety is the top priority when moderating content. This includes protecting users from cyberbullying, harassment, and exposure to harmful content.

Develop strategies for addressing user-generated content that poses a risk to individual users or the community. Implement privacy measures to safeguard personal information and educate users on best practices for maintaining their security.

3. Apply content filtering

Employ content filtering technology to identify and remove inappropriate content automatically. This can include text, images, and videos that violate your community guidelines.

Content filtering can be achieved using AI-powered algorithms, keyword blacklists, or a combination of both. Regularly update your filtering tools to improve their accuracy and effectiveness in identifying harmful content.

4. Use a moderation API

Implement a moderation API to automate and streamline the moderation process. A moderation API can help you detect and remove harmful content, identify spam and phishing attempts, and flag content for human review.

Utilize third-party services or develop your API to integrate with your app's backend for a seamless moderation experience.

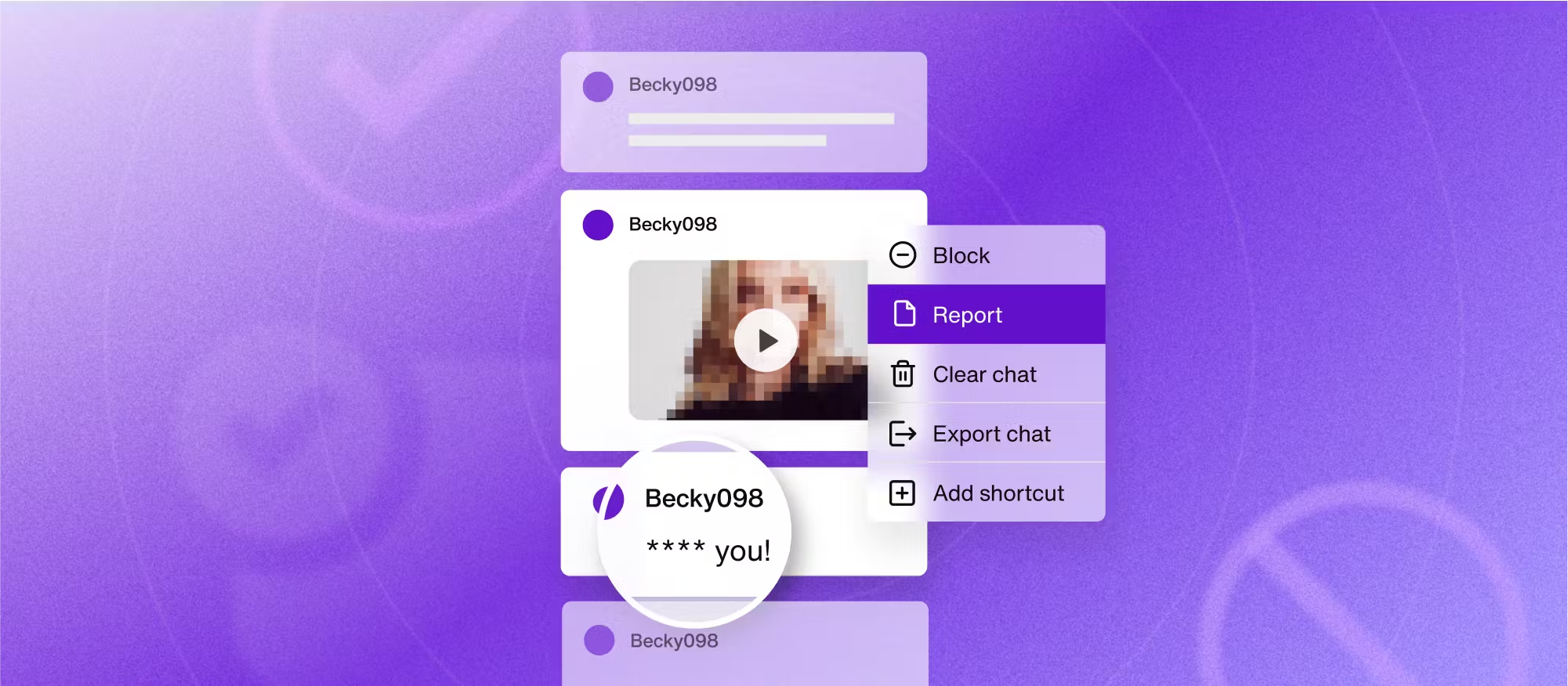

5. Enlist your community for chat moderation

Encourage your user community to actively participate in chat moderation by reporting community guidelines violations.

Implement precise report abuse mechanisms that allow users to flag inappropriate content or behavior. This crowd-sourced approach can help your moderation team identify and address issues more quickly and efficiently.

6. Support your moderation team

Provide your moderation team with the necessary training, resources, and tools to enforce community guidelines effectively.

Establish transparent processes for handling different types of content and user behavior. Encourage open communication among team members and offer support for dealing with content moderation challenges, such as exposure to harmful content or conflicts within the community.

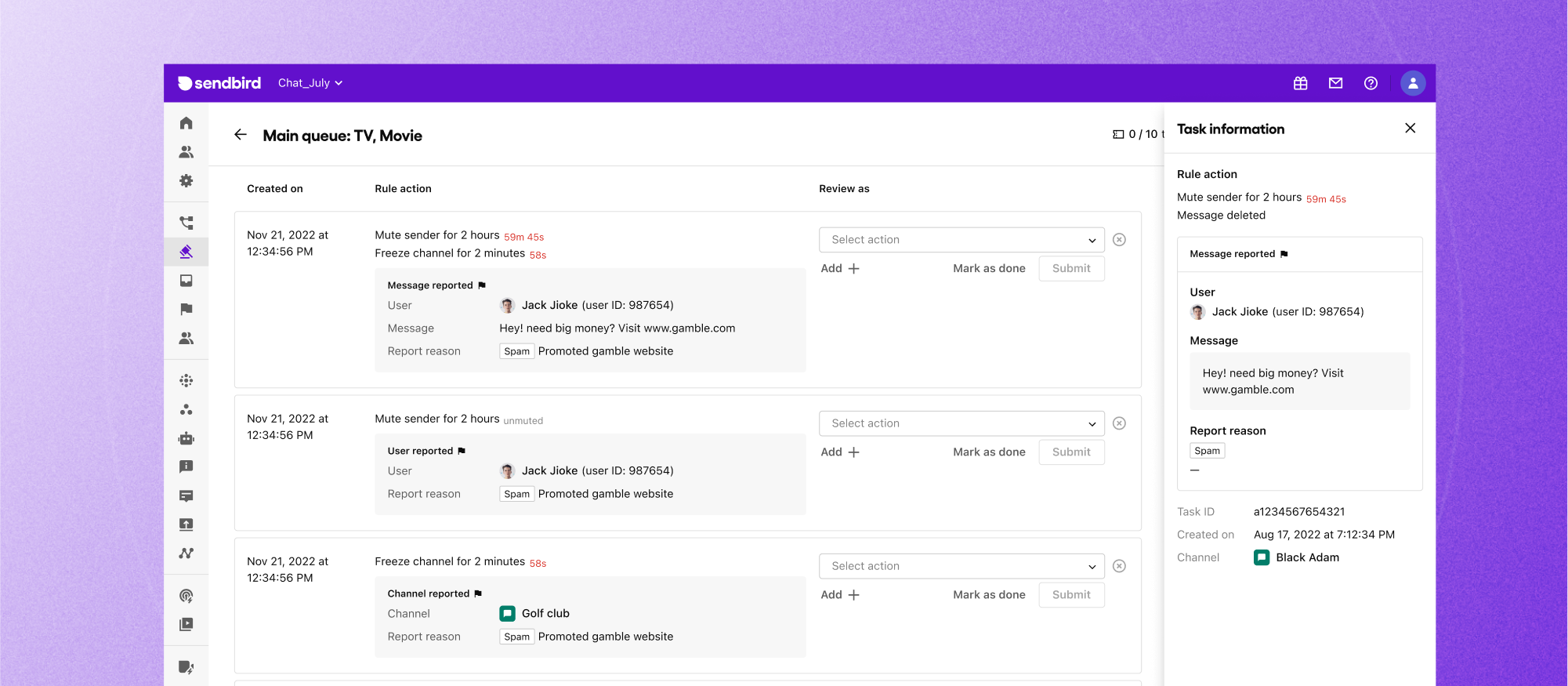

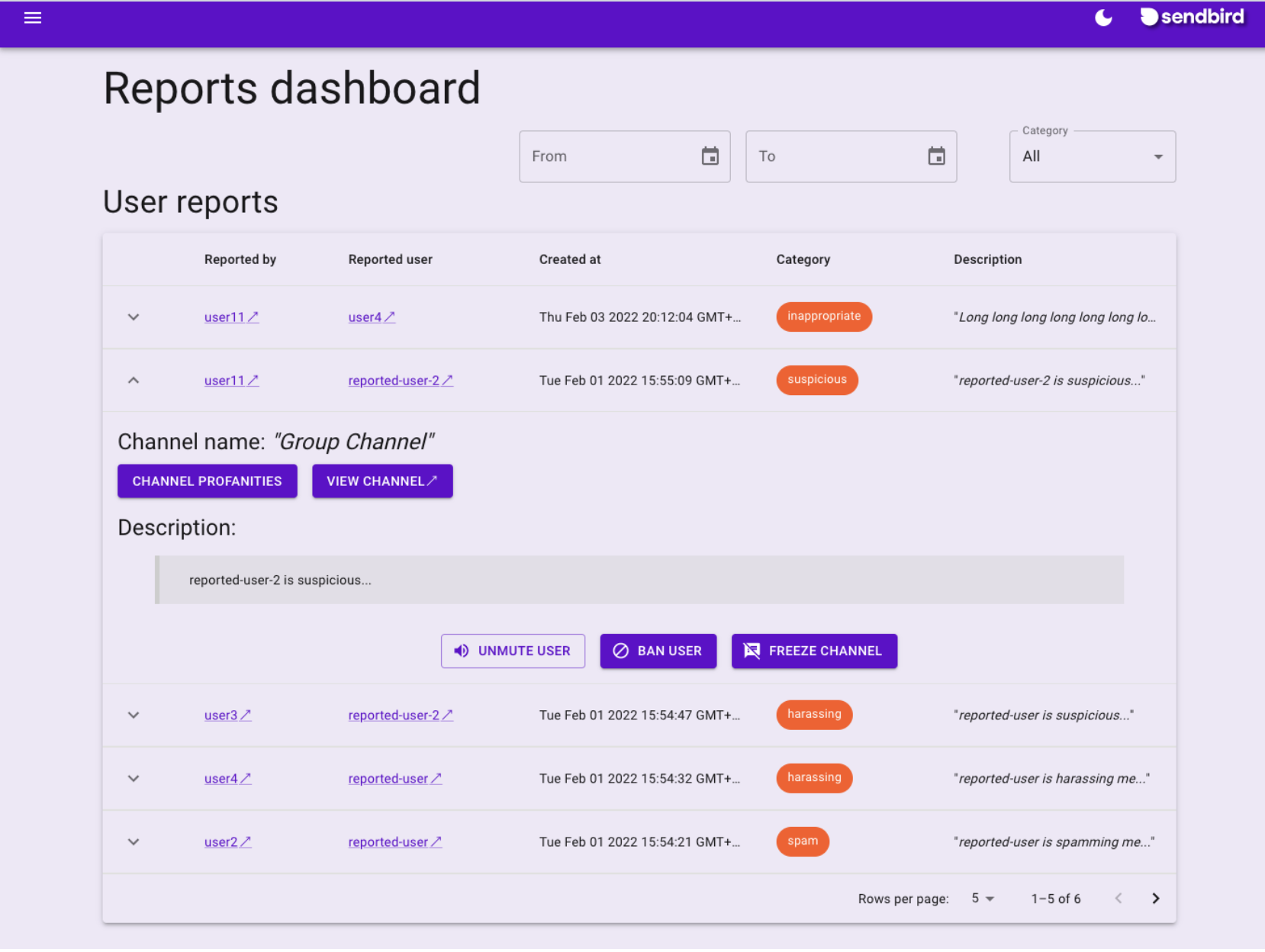

How to use the Sendbird moderation dashboard

Sendbird’s moderation dashboard aims to give businesses more control over their chat applications by providing the tools necessary to maintain a safe and respectful environment for all users. It’s offered as a part of our chat platform to help manage user-generated content.

Once you’ve created a new application and integrated it with the Sendbird SDK, log in to the Sendbird Dashboard, then navigate to the moderation section.

Within the moderation dashboard, you can configure various features such as the profanity filter, spam prevention, and automated moderation. Follow the instructions in the dashboard to customize these settings according to your requirements.

You can also use the dashboard to view and monitor chat activity in real time. You can see messages, users, and other information to monitor the chat environment. You can delete messages, mute or ban users, and report users who violate your community guidelines.

Want to learn more about how Sendbird’s moderation dashboard can help you create a safer mobile community? Talk to sales today.