What is a content moderator? Requirements, skills, benefits, & tools

Years ago in England, a king hired a beefeater to protect him. It was a terrible job that involved tasting the king’s food for poison and wearing a funny red hat. Today, hats aside, the content moderator plays a similar role in protecting our online communities.

A content moderator screens all the content generated by users (also called UGC or user generated content) of an online community or platform, and removes any inappropriate or toxic content before it can harm your users or your brand.

The content moderator is on the front lines. They manage community guidelines and their violations so you can focus on growing your online community instead of defending it.

In this blog, we’ll discuss the types of content moderation, key skills of a content moderator, plus strategies and tools to help you succeed at digital content moderation. We’ll also talk about AI content moderation and how it works.

Let’s dive in!

Chat moderation tools for online communities

What is content moderation?

Content moderation is the process of monitoring, reviewing, and screening user-generated content (UGC) on a digital platform, website, or online community to ensure a safe and enjoyable experience for all users.

This involves filtering out and removing in real-time any content that violates your community guidelines or policies, including hate speech, harassment, sexually explicit material, and more.

Content moderation has two important goals: First, to cultivate a positive, respectful online atmosphere that keeps users coming back. Second, to protect your business from reputational damage and ensure compliance with all legal requirements.

Content moderation can be performed by a human content moderator, automated moderation tools, or by a combination of both.

The most common types of content moderation are:

Social media moderation: Prevents the spread of harmful, false, or inappropriate content in social communities.

Image moderation: Finds and removes UGC containing inappropriate or harmful images.

Video moderation: Screens user-generated video for explicit, violent, or illegal material.

Audio moderation: Reviews user-generated audio for harmful or inappropriate material.

Text moderation: Screens online written content, including in-app chat, for harmful speech that violates community guidelines.

AI moderation: Uses artificial intelligence (AI) to automatically monitor and delete any content that violates community guidelines or policies, freeing up human content moderators for more nuanced tasks.

8 major support hassles solved with AI agents

What is a content moderator?

A content moderator is a person who monitors, reviews, and removes in real-time any user-generated content (UGC) that violates the standards and guidelines of an online platform, community, or website.

This person identifies and deletes harmful, illegal, spammy, or inappropriate content before it can negatively impact your users or damage your brand reputation.

Somewhere between a first responder and a trash collector, a content moderator maintains a clean, safe online space that upholds your brand’s ethical standards, meets all legal requirements, and is free of trolls, scammers, spammers, hate speech, and toxic content.

What does a content moderator do?

The mission of a content moderator is to foster a positive, welcoming space that promotes meaningful interactions between online community members.

A content moderator can exert significant influence upon online community trust and safety, and wears a few different hats as they work. A content moderator is a significant player in online community management.

Here are the 8 most important jobs of a content moderator.

1. Review user generated content (UGC)

What is UGC? User-generated content (UGC) refers to any form of content—such as videos, discussion forum posts, digital images, audio files, and other forms of media—that is created by users of an online system or service.

A content moderator screens all content published by users on your platform, including text chats, images, videos, and comments. A content moderator is trained to quickly evaluate whether content violates community guidelines, make a sound judgment call, and delete what doesn’t belong.

2. Enforce community guidelines

What are community guidelines? Community guidelines are a company policy that outlines what type of content or conduct is prohibited on your platform. Your content moderator has a deep understanding of these policies, and works to quickly spot and scrub out inappropriate content before it can do damage.

3. Handle reports of inappropriate content

If a user raises a complaint about content on your platform, the content moderator jumps in to resolve the issue. After enforcing your company policy, the content moderator informs the complainant of the outcome to alleviate any lasting concerns and acknowledge their help in maintaining community trust and safety.

4. Maintain trust and safety

What is trust and safety? Trust and safety refers to a set of business practices that reduce the risk that users of an online platform will be exposed to harmful or inappropriate content. The content moderator removes offensive persons and materials so community members can engage without fear of abuse or harassment.

5. Protect your brand reputation

A content moderator helps to preserve the good associations users have with your business and minimize the bad. They play a vital role in the community, demonstrating your commitment to user wellbeing, applying policies even-handedly, and building trust with community members.

6. Implement updates to content moderation policies

As legal requirements change, so will your content moderation policies. The content moderator implements these updates in the user-facing documentation and upholds the changes during interactions with and between users.

7. Monitor user behavior and trends

Your content moderator is on the front lines and knows what they need to work effectively. For example, the content moderator is the first to encounter new slang not yet addressed by your guidelines. They’re the first to reach for automated moderation tools to help stay accurate and efficient under increasing data loads and content variants.

8. Ensure compliance with legal requirements

Content moderation is an evolving process. Content moderators should stay up to date with changes to laws and regulations around data security, user privacy, and compliance, such as GDPR or HIPAA. The content moderator collaborates with legal departments to produce and enforce airtight community guidelines.

The ultimate chat moderator toolkit

Which types of content does a content moderator review?

Online user generated content is posted in the form of text, images, audio, and video. Combinations of this content, such as Instagram stories or LinkedIn posts with images and links, are popular and lend themselves to more extensive review by a content moderator.

In a nutshell, a content moderator reviews and moderates the following types of content:

Social media moderation: Prevents the spread of harmful, false, or inappropriate content in social communities.

Image moderation: Finds and removes UGC containing inappropriate or harmful images.

Video moderation: Screens user-generated video for explicit, violent, or illegal material.

Audio moderation: Reviews user-generated audio for harmful or inappropriate material.

Text moderation: Screens online written content, including in-app chat, for harmful speech that violates community guidelines.

AI moderation: Uses artificial intelligence (AI) to automatically monitor and delete any content that violates community guidelines or policies, freeing up human content moderators for more nuanced tasks.

A content moderator may also review:

- Comments on blog posts

- Social media comments

- External inks for spam

- Product or service reviews

5 key questions to vet an AI agent platform

The 7 key skills of a content moderator

A content moderator must maintain a safe and positive community space, despite attempts by bad actors to introduce negative elements, from hate speech and bullying to inappropriate content, scams, and more.

An effective community moderator needs the following key skills for success on the job:

Analytical thinking: A content moderator must spot trends and patterns while evaluating content in context across a variety of channels. This requires analysis, as it’s not always obvious which content is violatory. New scams and slang often appear harmless at first glance, but a good moderator has a keen sense for what’s out of place.

Attention to detail: A content moderator must skim volumes of content at a quick pace without sacrificing accuracy. It helps to have a sharp eye for nuance and intent, as communication styles can vary between users. It’s also important to respect cultural sensitivities.

Curiosity: Moderators encounter new memes and expressions regularly, especially from users of different backgrounds. Rather than jump to conclusions or let something slip by unseen, a good content moderator researches what they don’t know to apply a fair, informed standard to everyone.

Sound judgment: A content moderator manages the experience and perception of your online community. They’re able to quickly gather the relevant information, assess the publisher’s intent, its impact, and reach a timely decision that aligns with your online community guidelines.

Linguistic fluency: A multilingual community needs a multilingual moderator. Otherwise, members could be posting harmful content unchecked. Alternatively, automated content moderation tools can be tuned to multiple languages, allowing monolingual moderators to screen foreign languages.

Efficiency: This is especially important for a content moderator who screens content manually and without automated tools. For someone exposed to hundreds of items each day, and must be able to detect and delete in real-time any UGC that could be harmful to users or your brand.

Resilience: A content moderator’s job involves exposure to disturbing, perhaps harmful content. Resilience is crucial for a content moderator, helping them to effectively handle the psychological stress of viewing disturbing content regularly. Resilience aids in sustaining focus and making impartial decisions, which is essential for maintaining trust and safety.

The best content moderators are analytical, detail-oriented, and possess sound judgment.

In a constantly changing online environment, how can a content moderator stay current on new content types and tech? Let’s find out how automated content moderation can help.

A note on supporting your content moderator

A content moderator can expect to be routinely exposed to toxic content, making their job not only psychologically daunting but potentially damaging as well. Despite playing a vital role in safeguarding others, moderation is often an afterthought in online community management strategies. As a result, churn can be high among content moderators.

You can support your content moderators with professional counseling services. Access to care helps to mitigate the psychic toll of the job and shows your appreciation for workers. Recognition and access to quality care go a long way in helping moderators get through the difficult days and stick around long-term.

Training can help to buffer the mental burdens of disturbing content while also improving on-the-job accuracy and efficiency. Invest in training that explains your content guidelines, sets clear objectives, and braces moderators for what’s expected. This way, content moderators can be efficient, effective, and also limit their exposure to harmful material.

Top 3 benefits of a content moderator for your business

A content moderator is the guardian of trust and safety for your business, helping foster respectful and safe dialogue within an online community.

Let's take a closer look at the benefits of employing the help of a content moderator in the digital world.

Enhanced user experience and brand protection

By removing offensive or irrelevant content or people, a content moderator fosters a safe and welcoming environment for everyone. This naturally makes users feel respected, fostering a more engaging and positive community. This can lead to increased user retention and participation, strengthening the foundation of driving growth for your business.

Because a content moderator knows the company's policies and brand guidelines, they can help to protect the brand's image by preventing the association with inappropriate or harmful content.

Moreover, in the era of fake news and misinformation, a content moderator is vital for verifying content accuracy and preventing the spread of false information. This is essential for maintaining the brand image, customer trust, and loyalty.

Crucial feedback loop for product improvements

A content moderator often identifies trends in user behavior and content that can inform new product and feature development. This feedback can be crucial for adapting and evolving the platform to better meet user needs. This an important part of the engine that drives business growth.

Legal compliance and crisis management

A content moderator plays a crucial role in ensuring that content in the online community does not violate legal statutes, including copyright laws, hate speech regulations, and privacy laws. This reduces the risk of legal repercussions for the platform while maintaining a positive environment. This can lead to organic growth.

Finally, in situations in which content might spark a backlash in the online community, a content moderator can act quickly to contain and manage potentially damaging situations. This mitigates negative impacts on the brand and the online community.

2 major pitfalls to dodge when converting to AI customer service

Alternatives to a content moderator: The top 4 content moderation tools

With trust and safety top of mind, brands are increasingly reaching for content moderation tools to streamline their manual content moderation efforts. Here are the top content moderation tools you should consider using.

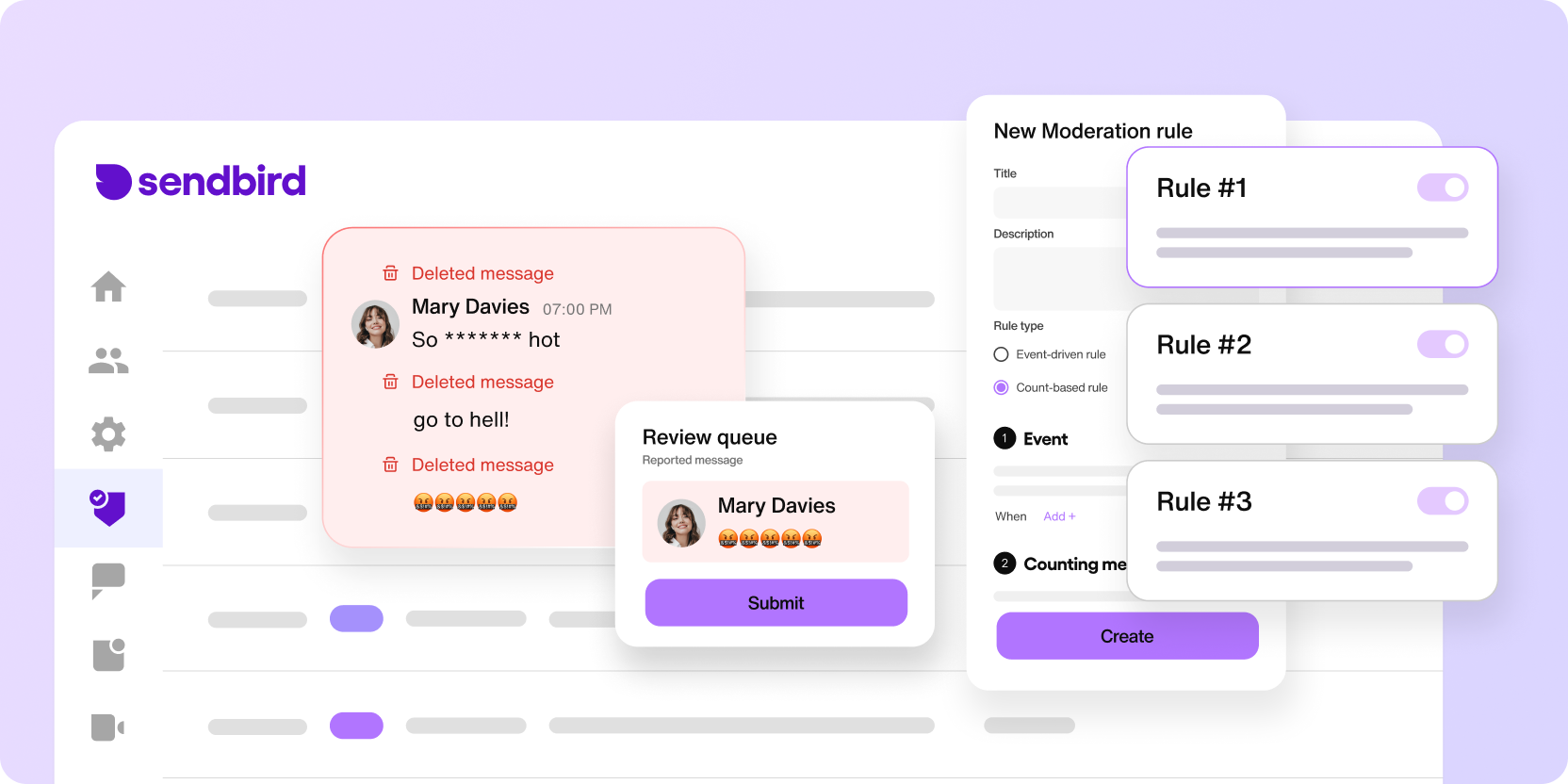

1. Sendbird’s Advanced Moderation

You can fully automate and customize your management of UGC with Sendbird’s Advanced Chat Moderation rule engine.

You get everything you need to create a comprehensive rule-based moderation system, complete with advanced chat moderation features and a suite of proven online safety tools.

Designed for peak efficiency and consistency, this automated tool enables a single moderator to detect, evaluate, log, and execute on inappropriate content in real time across all your community spaces, from one centralized dashboard.

Features like rule-based auto-detection, review queues, and moderation records allow for unmatched speed, scale, and precision.

Ultimately, Advanced Chat Moderation from Sendbird is a high-impact, low-effort way to ensure effective real-time moderation at any scale.

"Sendbird’s moderation rule engine has been a game-changer for us, providing the flexibility and efficiency to tailor moderation rules to our unique needs."

- Pina, Product manager, Kakao Entertainment

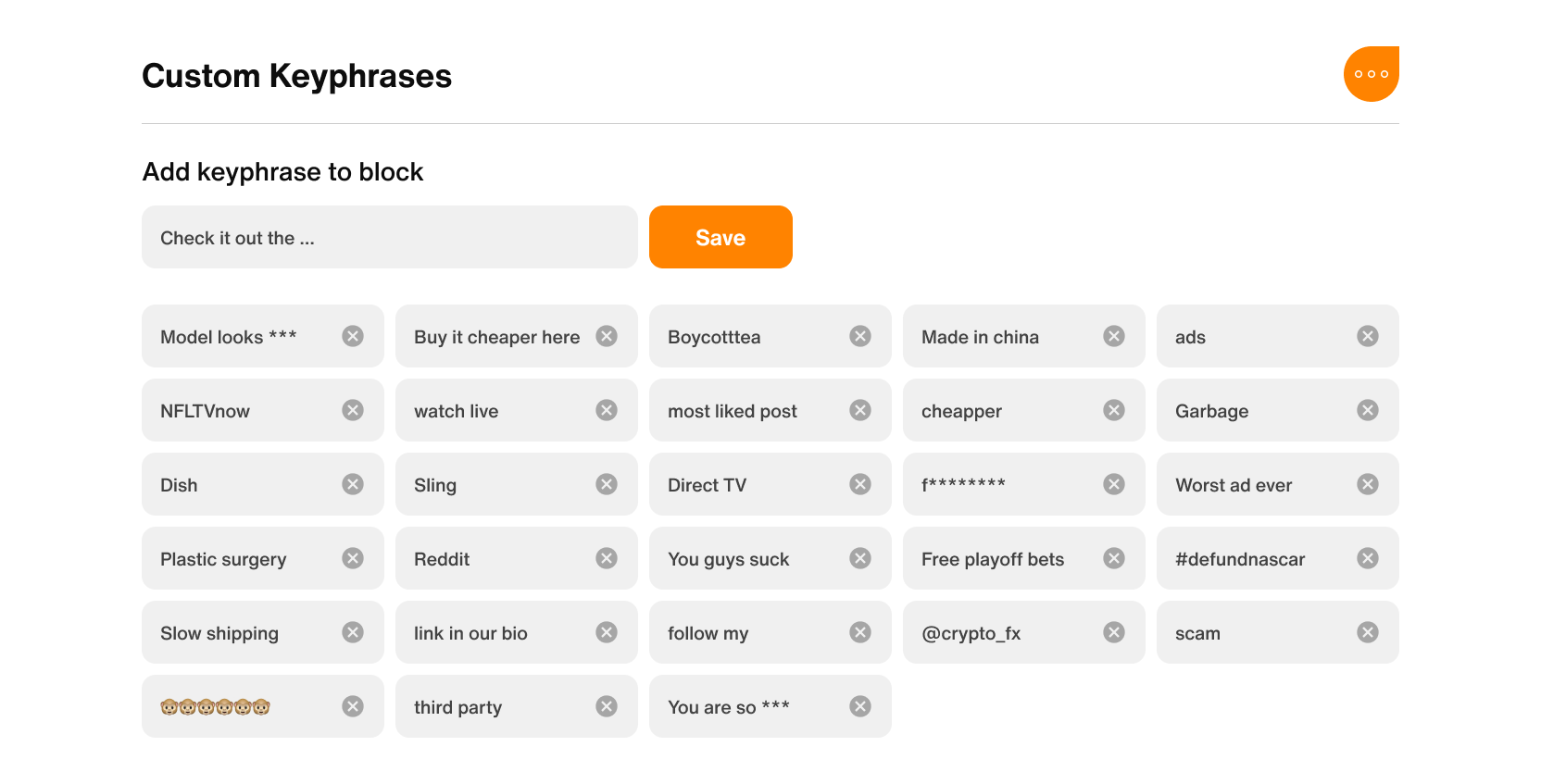

2. Respondology

Respondology is a social media comment moderation tool that helps you preemptively remove spammy, hateful, and damaging comments from your social accounts. It uses a combination of artificial intelligence (AI) filtering technology, custom filters, and human moderators to monitor your social accounts on your behalf. It can moderate social media content across 22 languages.

Features like keyword and emoji filtering help to catch unwanted or harmful comments before they appear while leaving the poster unaware their content has been removed from public view. If you’re looking for a responsive, accurate partner in social media content moderation, Respondology is worth a look.

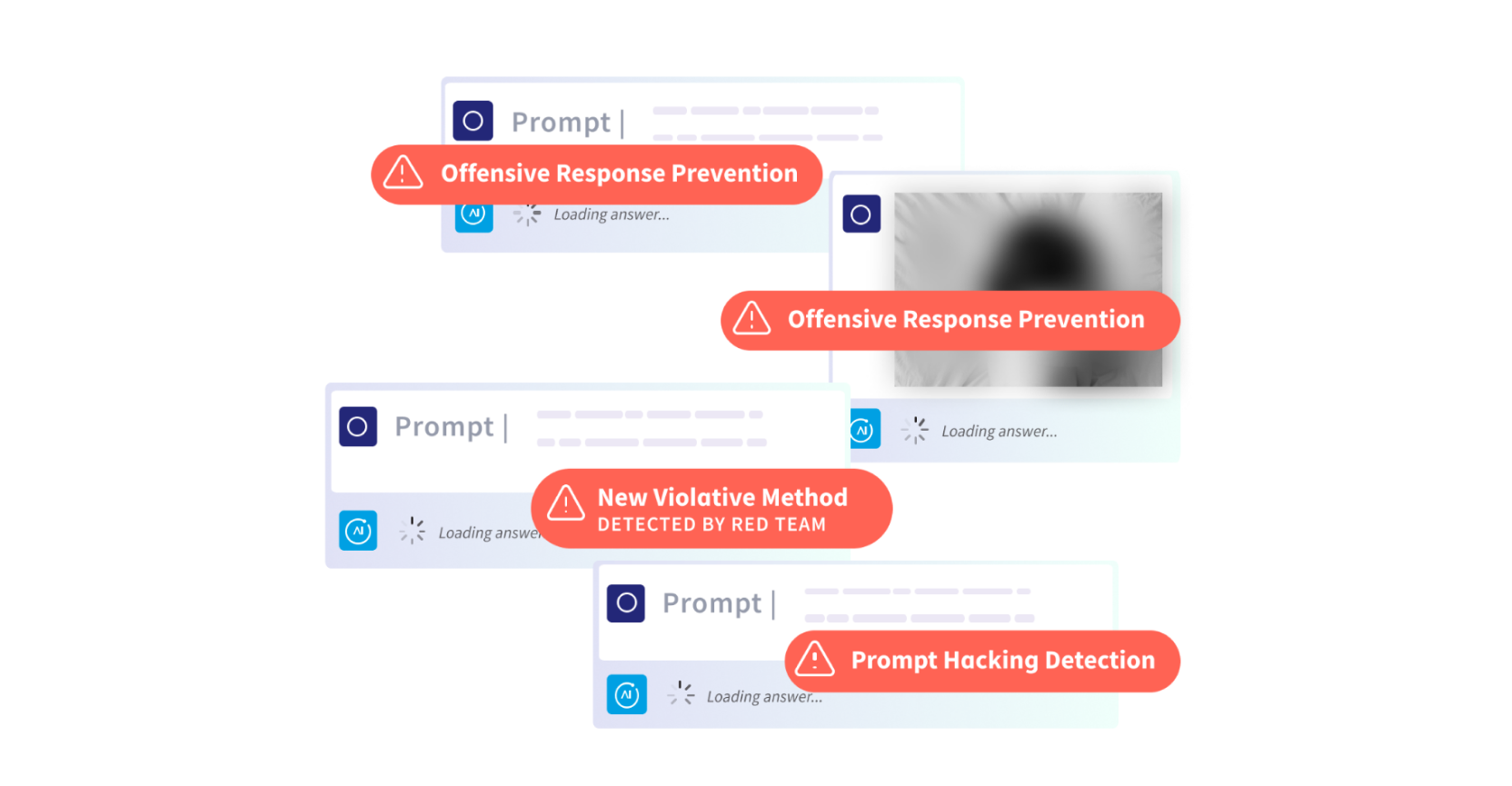

3. ActiveFence

ActiveFence is a content moderation platform offering trust and safety solutions for user and AI-generated content. It uses AI to pre-moderate content, auto-detect threats in 14 categories, and provide real-time threat assessments so you can get a sense of what’s happening across your online community.

Codeless workflows and analytics help ActiveFence become easier to use, while features like ComplianceHub help preserve legal standing and anticipate future compliance issues. What makes ActiveFence unique is that it uses AI to detect not only harmful content, but also hackers, in order to preserve online community safety.

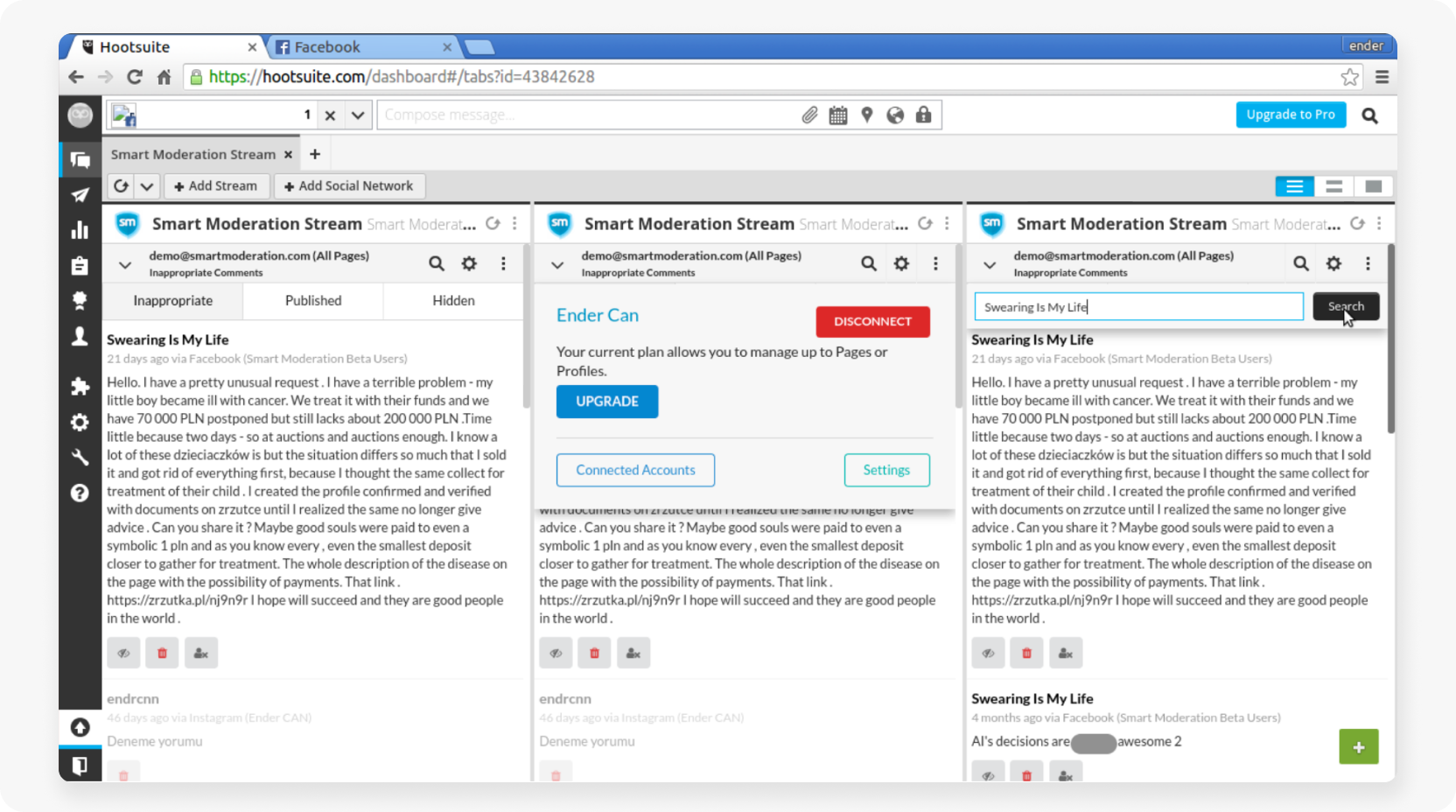

4. Smart Moderation

Smart Moderation is an AI content moderation tool that offers real-time monitoring and automatic moderation for social media. The platform auto-removes ads, spam, scams, and bad actors using AI filtering and monitoring.

You can moderate all social accounts from one integrated dashboard and set custom filters to screen out inappropriate keywords and user behaviors. The AI models learn how to screen based on your filters and auto-hide offending content. Smart Moderation is a low-effort way to auto moderate your social accounts around the clock.

Leverage omnichannel AI for customer support

Looking ahead: What will content moderation be like in the future?

Going forward, generative AI promises new accuracy and automation to content moderation. AI-based content moderation tools will improve with training, allowing them to resolve cases that were formerly left to human moderators. Auto moderation for images, video, and audio will also improve as natural language processing (NLP) systems become more sophisticated.

However, it’s unclear the extent to which AI moderation systems will be able to understand user intent and context, so human content moderators will still be vital to ensuring community trust and safety!

So what will content moderation be like with AI?

The AI content moderation process

The online space is always evolving, and a content moderator should be ready to adapt accordingly. This involves staying current with the latest trends, new channels, content types, as well as new tech.

For a human moderator with limited bandwidth, though, keeping up with rapid change can be a challenge. Faced with proliferating data loads, channels, content formats, audiences, and communication styles, your content moderator may miss things.

To remain effective, a community moderator may reach for automated content moderation tools.

What is automated content moderation? These platforms allow you to pre-moderate and remove inappropriate content in real time across all channels using one centralized dashboard.

With automated content moderation software to complement manual moderation efforts, one person or a small team can ensure community trust and safety without sacrificing accuracy or efficacy.

These AI systems pre-moderate content, leaving only ambiguous or complex content to be reviewed by the content moderator. AI tools can also moderate user behavior. This can include limiting the number of posts a user can make in a given time period, or freezing or banning an account after repeat offenses.

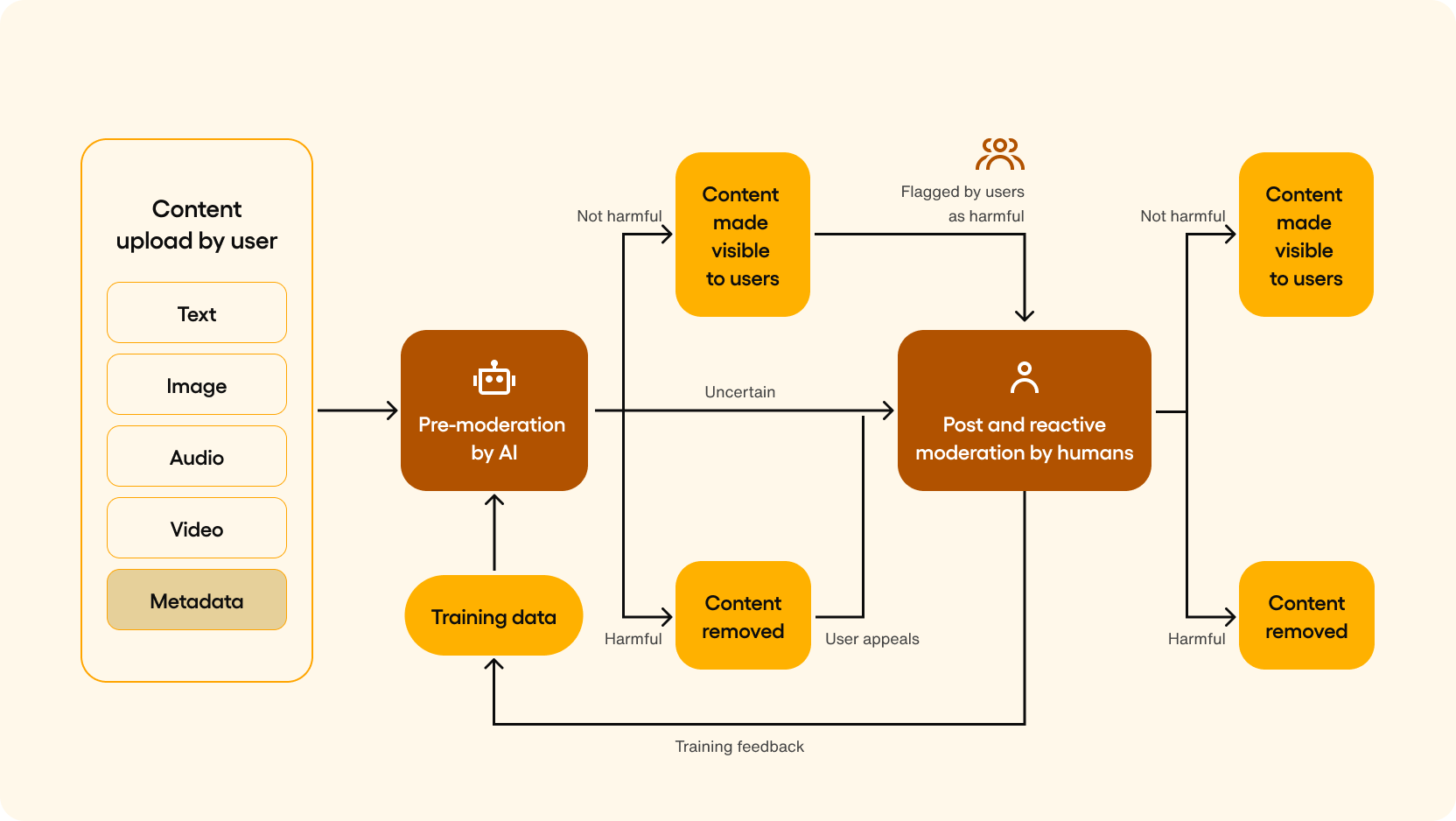

Here’s how AI content moderation tools work:

Here’s an explanation of the above flowchart:

- Uploaded content (text, chat, images, audio) is automatically scanned by moderation tools for material that violates your community guidelines.

- UGC that contains obviously unfit material is categorized — e.g., hate speech, spam, violence — while ambiguous UGC gets escalated to a human moderator.

- The system triggers the corresponding moderation action for unfit content. This can include alerting a human moderator, timing the user out, or freezing accounts.

- A human moderator reviews UGC flagged as ambiguous in their moderation dashboard, then executes the appropriate moderation action.

- Content that doesn’t violate guidelines is posted and made visible to users.

- If available, machine learning adapts based on moderation actions and stores the feedback for future use.

Automated tools represent a huge step up in the efficiency and accuracy of content moderation efforts.

For startups, automated tools can bolster human moderation, ensuring community safety and compliance for a fraction of the cost. Likewise, brands with large and diverse online communities can use automated tools to consistently protect their online community in different regions and in different languages without hiring human moderators.

Overall, automated moderation and AI can play a key role in scaling your online content moderation strategy while ensuring community and brand protection.

Automate customer service with AI agents

Starting with content moderation

Your online community may not be royalty per se — but they do deserve your best efforts at protection.

Without content moderation to keep the toxicity out, your audience may abandon your online community for someplace more pleasant.

Content moderation is most effective when humans and machines work together.

For added precision and accuracy in safeguarding your community and brand, you can reach for automated content moderation tools such as Sendbird’s Advanced Moderation. To learn more, check out this blog about Advanced Moderation.

In addition, you can leverage Sendbird AI agents for faster and more consistent moderation. These AI-driven agents work with seamless integrations to help maintain a safe environment for users.

In addition, Sendbird's AI agent builder empowers community managers and developers to create custom AI agents tailored to their specific moderation needs. This flexibility ensures that communities can apply their unique guidelines while still benefiting from automated content moderation.

Together, these products power an unmatched AI customer experience platform that can streamline moderation workflows, improve overall community safety, and much more.

Ready to start moderating with Sendbird?

You can start a free trial (no credit card or commitment required) or contact us to learn more!