8 best practices for an effective online content moderation strategy

Content moderation for online communities

In our digital era, platforms like marketplaces, dating apps, live streaming, and gaming apps have become vibrant hubs of conversation and debate and pivotal centers for social connection, entertainment, and commerce. They've woven themselves into the fabric of our daily social interactions. However, this surge in digital engagement brings the intricate challenge of managing conversations among a diverse user base with varying opinions, backgrounds, and cultures. How can you do this? With content moderation.

Effective user-generated content moderation (UGC Moderation) is more than a regulatory necessity; it is the cornerstone of maintaining healthy, engaging, and respectful online communities. This article explores eight approaches to creating a robust content moderation strategy, each aimed at striking a delicate balance between freedom of expression and community well-being while fostering a sense of inclusion and accessibility vital for sustained online engagement. We’ll also discuss what to consider when choosing a content moderation service. But first, let's define moderation.

8 major support hassles solved with AI agents

What is content moderation?

Content moderation is the review and management of user-generated content (UGC) on online platforms or applications to ensure it adheres to community guidelines and legal requirements. It involves identifying and addressing harmful or inappropriate content, such as hate speech, misinformation, or illegal material. Content moderation, enforced by community managers and content moderators, maintains a safe and positive online environment for all users and ensures community guidelines and standards are respected, enhancing trust and safety.

Top 4 reasons why content moderation is essential for community safety

In today's digital age, where user-generated content (UGC) is ubiquitous, content moderation is a cornerstone of community safety. Content moderation involves reviewing, evaluating, and, when necessary, removing UGC that doesn’t align with a platform's community guidelines and policies. Moderating user-generated content for a community manager or content moderator is not easy, but here are four reasons it is critical.

Uphold community guidelines

Effective content moderation upholds community guidelines, establishing acceptable norms and behaviors for platform interaction. These guidelines serve as a roadmap for content moderators, empowering them to make informed decisions about removing or retaining UGC. By enforcing these guidelines, a community manager can protect users from the potential dangers of harmful content, promoting a positive and secure online experience.

Prevent escalation to enhance trust and safety

Real-time content moderation is even more critical in live online chats. Content moderators, often called "chat moderators" or community managers, identify and address disruptive behavior before it escalates. This proactive approach helps to maintain order and civility, ensuring that users engage without fear of harassment or abuse. Content moderators employ various techniques, including keyword filtering, pattern recognition, and manual review. They also communicate directly with users, issuing warnings or taking disciplinary action when necessary.

Protect brand reputation and uphold community standards

The importance of content moderation extends beyond protecting users from harm and safeguards the reputation of online platforms. By proactively removing harmful content, platforms demonstrate their commitment to creating a safe and welcoming environment for their users. This, in turn, fosters user loyalty and trust, leading to increased engagement and long-term sustainability.

Safeguard intellectual property

Protects intellectual property: Content moderation plays a crucial role in safeguarding intellectual property rights by preventing the unauthorized distribution of copyrighted material. By identifying and removing infringing content, platforms can protect the creative works of artists, musicians, writers, and other content creators. This helps to ensure that creators receive proper compensation for their work and encourages continued innovation and creativity.

Now that we have the basics let’s discuss eight ways to create an effective content moderation strategy.

Your app is where users connect.

8 tips to create an effective content moderation strategy

Your content moderation strategy can be created and enhanced with the following tips! Read on to find out how.

1. Craft clear community guidelines & standards

The foundation of effective content moderation lies in establishing clear, accessible community guidelines. These standards should be more than just a list of dos and don'ts; they must reflect the platform's ethos and community's expectations. For instance, with its professional focus, a platform like LinkedIn will have different standards than a more casual social media site like Instagram.

The key is specificity and adaptability. For example, Reddit's community guidelines are detailed and evolve based on user feedback and changing online dynamics. This approach helps set clear boundaries and gain user trust and compliance.

For user-generated content across diverse platforms like marketplaces, dating apps, online gaming, and social apps, it's crucial to have a comprehensive set of conduct guidelines. These guidelines should be tailored to fit the unique environment and user base of each platform, but generally, they should encompass the following areas:

Personal information: Rules about sharing confidential or sensitive information, especially critical in dating and social apps where privacy is paramount.

Mentioning staff or users: Guidelines on referencing staff members or other users, particularly in a negative context. This is vital in marketplaces and gaming platforms where interactions with staff or fellow gamers are common.

Defamatory content: Prohibitions against content that could be slanderous or libelous are essential across all platforms to maintain respectful interactions.

Intolerance and discrimination: Policies against intolerant behavior or discriminatory remarks are crucial for creating an inclusive environment.

Language use: Standards for acceptable language are essential in online gaming and social apps where communication is frequent and varied.

Bullying and aggression: Rules against bullying, harassment, or insulting behavior are fundamental in competitive environments like online gaming.

External links: Guidelines on sharing external links are relevant to marketplaces and social apps where external content might be more prevalent.

Advertising content: Restrictions on promotional material, particularly in marketplaces and dating apps where unsolicited advertising can be intrusive.

Commenting on moderation: Policies regarding user comments on moderation practices are essential for transparency and trust across all platforms.

Clear, specific, documented community guidelines will help a community manager or content moderator enhance trust and safety while protecting the platform's reputation!

2. Balance proactive and reactive content moderation approaches

Balancing a proactive vs reactive content moderation strategy is crucial. Proactive measures include automated filters that screen for blacklisted words like slurs or spam. For example, YouTube uses sophisticated algorithms to pre-screen and flag potentially harmful content. However, these systems aren't foolproof and often require human intervention for context-sensitive issues.

On the flip side, reactive strategies, such as user reports and post-publication moderation, are equally important. Platforms like Facebook allow users to report content that they find offensive or harmful, which is then reviewed by human moderators. This approach helps manage content that slips through automated systems and empowers users to contribute to community standards.

It's imperative to establish a well-defined system of penalties for violations of the content moderation guidelines, especially in diverse environments like marketplaces, dating apps, online gaming, and social platforms. These sanctions should be communicated and consistently enforced to maintain order and respect within the community. Examples of such sanctions include:

Content removal: The deletion of content that violates the established rules, a common practice across all types of apps to maintain a healthy online environment.

Content editing: Modification of user-generated content to remove offensive or rule-breaking elements, particularly relevant in social and dating apps where interactions are more personal.

Temporary suspension: A time-bound restriction on access privileges, effective in online gaming and marketplaces where immediate consequences for rule breaches are necessary.

Permanent blocking: The ultimate sanction involving the indefinite revocation of access privileges, used in severe cases across all platforms to safeguard the community's integrity.

3. Emphasize transparency in content moderation practices

Transparency in content moderation processes is key to building trust. Platforms should openly communicate their moderation policies and actions. For instance, Twitter's transparency reports detail government requests and enforcement actions, providing users with insights into the platform's moderation practices. This openness helps in demystifying the moderation process and builds user trust. Here are four elements to consider when building your content moderation strategy:

Clear policy communication: Regularly update and communicate the platform's content moderation policies to users. Ensuring everyone understands the rules and expectations is fundamental to maintaining a transparent and trustworthy environment.

Transparency reports: Publish transparency reports periodically. These reports should detail actions taken in the moderation process, such as content removals or account suspensions, and any government requests. Providing such insights demystifies the moderation process and reinforces user trust.

Moderation action notifications: Inform users when their content is moderated and explain the reason for the action. This approach maintains transparency and educates users about acceptable standards, helping prevent future violations.

Appeals process: Clearly outline an appeal process for users who believe their content was unfairly moderated. This demonstrates a commitment to fairness and due process, and it gives users a sense of empowerment and respect within the platform.

4. Cultivate a culture of respect and inclusivity in UGC

Effective content moderation goes beyond removing harmful content; it fosters a culture of respect and inclusivity. When crafting your content moderation strategy, incorporate a series of etiquette guidelines tailored to the specific nature of your platform, whether it's a marketplace, dating app, online gaming, or a social app. The primary aim of these guidelines is to encourage positive interactions among users, focusing more on fostering good behavior rather than merely policing poor conduct. These guidelines could encompass general principles as well as specific advice, such as:

Promoting respect: Encouraging users to interact with respect constantly is crucial in all apps for maintaining a friendly and welcoming environment.

Communication style: Advising against ALL CAPS in messages, which can be perceived as shouting or aggressive, particularly important in social and dating apps where communication tone is vital.

Cultural sensitivity: Encouraging awareness and respect for cultural differences is especially relevant in global platforms like marketplaces and online gaming.

Constructive feedback: Guiding users to provide constructive and helpful feedback is critical in marketplaces where reviews and interactions can significantly impact businesses and user experiences.

Positive language: Encouraging positive and supportive language and fostering a supportive community is especially important in social apps and online gaming environments.

A community manager also plays a crucial role in setting the tone of conversations. For example, content moderators in online forums like Quora guide discussions, encourage respectful debate and discourage toxic behaviors. This approach ensures compliance with community standards and promotes a positive and constructive online environment.

5. Leverage technology with the human touch of content moderators

Combining auto-moderation tools and human judgment is vital in addressing complex content issues. While software rule engines or AI-based moderation solutions excel in handling large volumes of content quickly, human content moderators understand nuances and context better. A good example is Twitch, where AI moderation tools monitor chat streams for potential issues, but human moderators step in for nuanced decisions, especially in live streaming contexts. To further elaborate on this synergy, consider the following vital aspects when creating a content moderation strategy:

Adaptive moderation strategies: Adapt to evolving user behaviors and content trends by automating new deciphered rules by moderators to keep content moderation relevant in rapidly changing online environments.

Escalation protocols: Implement escalation protocols to ensure that human moderators systematically review content flagged by AI or Rule Engines as sensitive or ambiguous. This process guarantees accurate, fair, and considerate moderate decisions in the content context.

Feedback loop integration: A crucial aspect of this synergy is the feedback loop, where content moderators' insights continually refine AI algorithms and automated content rules. For this, a committee review of moderation logs ensures the continuous improvement of sophisticated auto-moderation tools to address tricky user-generated content.

6. Ensure better community engagement, online safety, and feedback loops

Involving the community in shaping content moderation policies is crucial. Platforms like Stack Exchange have community-elected content moderators, and policies are often discussed in open forums. This approach ensures the community's voice is heard and helps refine and update content moderation practices. Here are some key aspects and examples to consider to get the best results from your content moderation strategy:

Open policy discussions: Encouraging open forums for policy discussion, as seen on platforms like GitHub, fosters transparency and allows users to contribute to the rule-making process, building trust and a sense of ownership.

Regular surveys and polls: Conducting surveys or polls helps gather community feedback on current moderation practices and potential policy changes, similar to approaches used by LinkedIn for professional communities.

Feedback impact: Publishing reports that detail how community feedback has influenced moderation policies, akin to Twitter's transparency reports, but with a focus on community input, enhances transparency and shows responsiveness to community needs.

8. Continuously improve your content moderation strategy

The digital landscape is ever-evolving, and content moderation strategies must adapt accordingly. Continuous improvement, driven by data analysis and user feedback, is key. Here are some ways to improve your content moderation strategy:

Adapting to technological advancements: Continuously integrating the latest advancements in AI and machine learning into content moderation tools is essential. For instance, marketplaces can benefit from improved image recognition to detect counterfeit items, while dating apps can use advanced algorithms for better detection of inappropriate content or fraudulent activities.

Scenario-based training for moderators: Regular training sessions based on real-life scenarios can greatly enhance the effectiveness of human content moderators. This is particularly relevant for platforms like dating apps and marketplaces, where moderators need to understand the nuances of personal interactions and transactional disputes.

Benchmarking against industry standards: Regularly compare moderation practices with industry standards and competitors. This benchmarking can reveal gaps in moderation strategies and inspire improvements. For example, a dating app could look at how competitors handle user reports of harassment to enhance their own response protocols.

Crisis response planning: Develop and regularly update crisis response plans for handling sudden spikes in harmful content or coordinated attacks, which are potential risks in online gaming and social platforms.

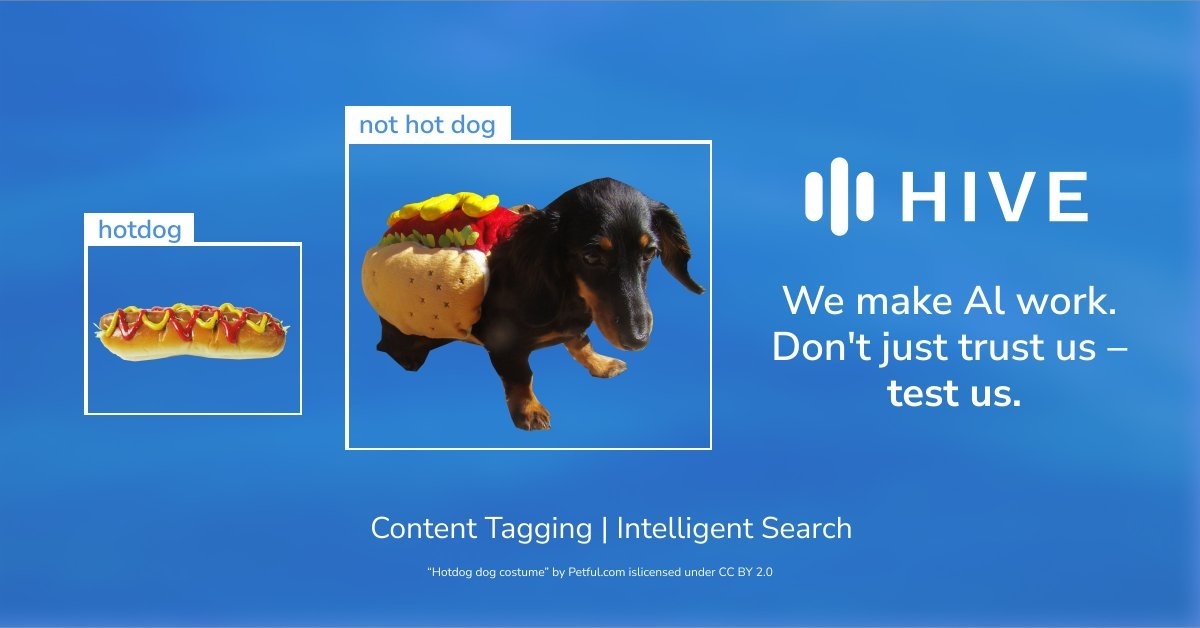

Why AI content moderation is an essential consideration for your content moderation strategy

AI content moderation has emerged as a transformative force in online safety. By leveraging the power of artificial intelligence, online platforms can effectively review and evaluate user-generated content (UGC) to ensure compliance with community guidelines and safeguard users from exposure to harmful or offensive material. Whether video content moderation, text moderation, or chat moderation, AI content moderation can understand the context better and detect subtle forms of harmful content. Beyond automated moderation relying on pre-defined rules and patterns, AI attempts to provide a substitute for human insight. Hive Moderation is a popular solution.

Like rule-based engines, AI algorithms can also efficiently scan vast amounts of UGC, identifying patterns, language, and imagery that indicate potential community guidelines violations. This automation will free human moderators to let them focus on even more complex cases requiring a deeper understanding of nuance and judgment. AI content moderation benefits various forms of online engagement, including chat moderation. As AI content moderation continues to evolve, its potential to enhance trust and safety in the digital landscape is promising.

Maximizing trust and safety with advanced content moderation

Effective UGC moderation is an art that requires a balance of clear guidelines, technological support, human judgment, and community involvement. By fostering a culture of respect and inclusivity and navigating the complex legal and ethical landscape, online platforms can create safe spaces enriching and representative of diverse perspectives.

As digital interactions continue to grow and evolve, so must our approaches to content moderation, ensuring they remain relevant, effective, and respectful of the global online community.

The next question is: How can you select an effective content moderation solution? At Sendbird, our Advanced Moderation tools embody many vital principles, offering a sophisticated solution for managing user-generated content across various platforms and web or mobile applications.

Sendbird’s chat content moderation tools combine fast and precise automation with invaluable human insights. Sendbird’s content moderation rule engine, review queue, live dashboard, and moderation logs form a comprehensive suite that addresses the diverse challenges of online community management across verticals.

Request access today or start a discussion in the Sendbird Community to learn more!