How to build chat moderation in your app (and why you should)

Chat moderation for digital communities

As online communities grow in number, engagement, and complexity, the potential for bad actors in online conversations grows as well. Therefore, building a safe and positive online chat environment becomes increasingly important. This can be achieved through chat moderation.

In this article, we’ll explore the concept of chat moderation, its significance in online communities, and how Sendbird's moderation tools can help you to maintain a safe and engaging space for users. Along the way, we’ll also cover:

After reading this article, you’ll have what you need to implement an effective moderation strategy tailored to your community's specific needs.

Note: Although this article focuses on content moderation related to in-app chat, the core concepts of content moderation discussed below apply to moderation across other digital spaces, such as social media and online forums.

What is chat moderation?

Chat moderation is the process of managing and regulating conversations within online chat platforms, forums, and communities. It plays a vital role in ensuring that interactions are respectful, safe, and conform to community guidelines.

Chat moderation is typically conducted by human moderators, community managers, and/or automated systems. Moderators work collectively to prevent the spread of spammy, harmful, and inappropriate content within the platform.

Why do digital platforms need chat moderation?

A review of the current content moderation landscape reveals some concerning statistics. For example, according to a study by Pew Research, 41% of American adults have been harrassed online, and more than 10% have been affected mentally or emotionally. 62% believe that online harassment is a major problem. Among the people surveyed, nearly 80% believe that online services should take action to implement effective moderation, and 13% decided to stop using an online service when they observed others being harassed. In fact, nearly three-quarters of consumers believe that security is the most important part of an online experience. It stands to reason, therefore, that users are more likely to engage with platforms that actively address and manage inappropriate behavior. It’s important to keep this in mind when building in-app chat, especially in the case of business-to-consumer communications.

Let’s consider key reasons to provide effective chat moderation for your app.

Maintaining a safe and positive environment: Chat moderation is necessary to ensure that all participants feel comfortable engaging in conversations within the platform. By actively managing and addressing harmful or offensive content, moderators can foster an environment where users feel safe and respected, and conversations are meaningful.

Upholding community guidelines and standards: Chat moderation helps enforce a community’s standards of acceptable behavior, ensuring that users are aware of the expectations and that they adhere to the established guidelines.

Protecting brand reputation and user retention: A well-moderated community, such as a moderated group chat, is more likely to attract and retain users, as people are drawn to environments where they feel safe and respected. Additionally, effective chat moderation can protect a company's brand reputation by preventing the spread of harmful content or negative interactions.

Fulfilling legal requirements: Depending on the jurisdiction, legal requirements related to content regulation and data privacy may necessitate effective chat moderation. Implementing a robust moderation strategy can help companies comply with these regulations.

Now that we have an understanding of the what and why behind chat moderation, we’ll shift our focus to the task of chat moderation itself.

Types of chat moderation

There are three primary types of chat moderation, and their differences come down to the type of content being moderated.

Text moderation

This involves reviewing and filtering text-based messages to prevent offensive language, spam, bullying, and other inappropriate content.

Image moderation

Image moderation can be done by scanning images for explicit or offensive material, such as nudity, violence, or other graphic content.

Voice chat moderation

Moderating voice chats involves analyzing voice-based communications, such as voice notes, to identify and block harassment, offensive speech, or bullying.

For all of these types of content, the process by which the chat moderation is performed can be either manual or automated. Let’s look more closely at the differences between these two.

Manual versus automated content moderation for in-app chat

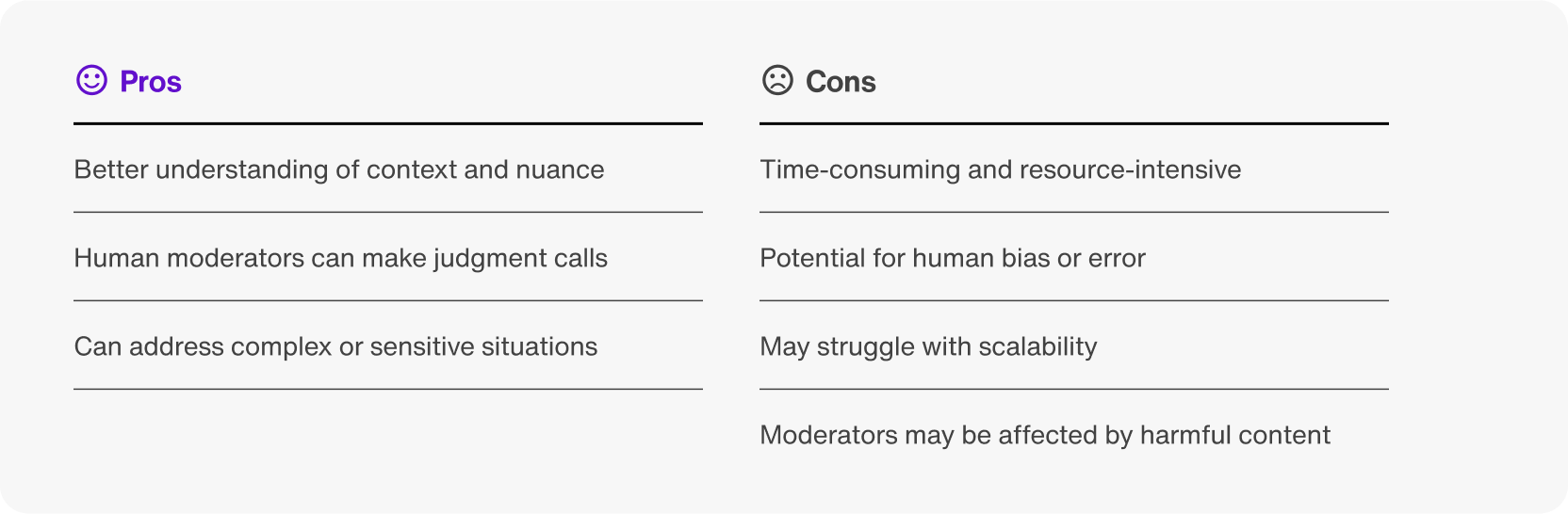

Manual content moderation requires human moderators—often employees or trusted members of the chat community—to review and manage content. These moderators are responsible for enforcing community guidelines and taking appropriate action when necessary. Manual content moderation is typically more accurate in detecting nuances and considering context, but can be time-consuming and resource-intensive.

Pros and cons of manual content moderation

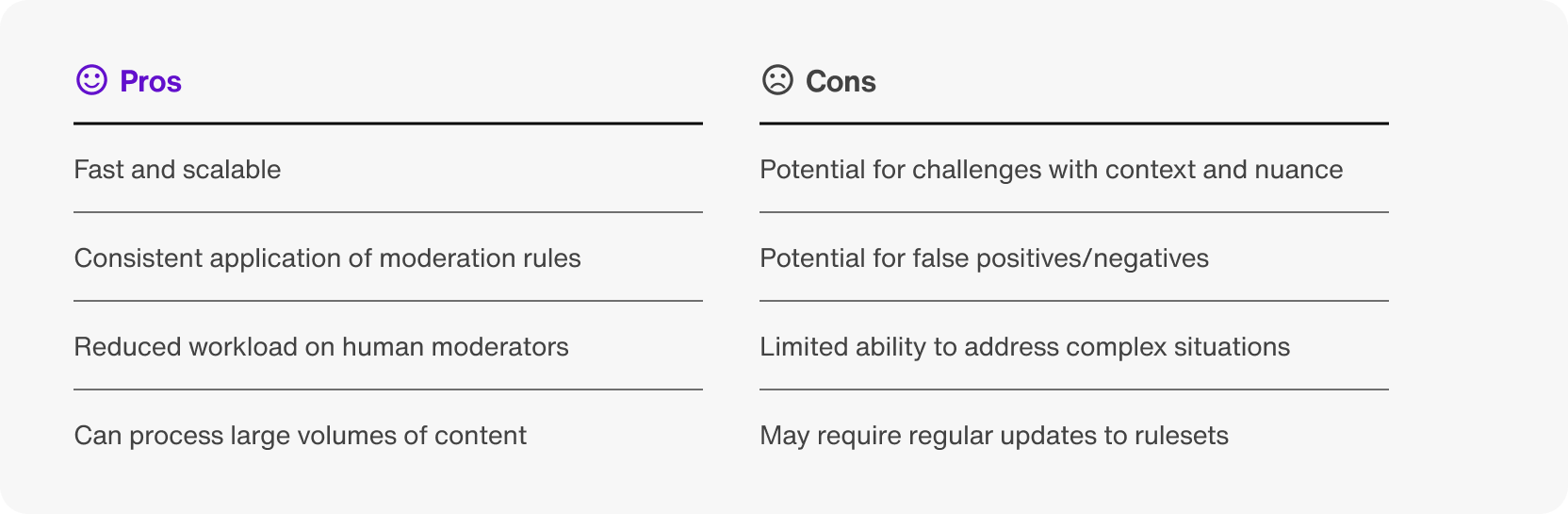

Automated content moderation, on the other hand, relies on rulesets, blocklists, and machine learning algorithms to evaluate messages for potentially harmful content. This type of moderation is faster and more scalable than manual content moderation, but may struggle with understanding context and generate false positives or negatives.

Pros and cons of automated content moderation

Both manual and automated moderation have their place. It’s worth noting that the most effective chat moderation strategy often involves a combination of the two.

How can you implement robust, effective, and scalable moderation for in-app chat? Consider using Sendbird, an in-app communications platform. Sendbird makes it easy to implement chat moderation with built-in moderation features.

Above, we have discussed moderation as it applies to multiple online communities, including in-app chat. Now, let’s discuss how to implement chat moderation within the context of in-app chat.

Built-in chat moderation with Sendbird

Sendbird is a chat platform that offers built-in moderation features to help manage user behavior and content. Let’s briefly go over the features that make moderation easy with Sendbird.

Message moderation

Message moderation tools let you filter the content of messages and block domains, profanity, and images. These tools help to prevent inappropriate content from being shared in your community. Image moderation— powered by the Google Cloud Vision API—can be applied automatically, and profanity and domain filters can act on all text messages. Triggered moderation actions can be selected based on users who send profanity to a channel. Customizable moderation settings enable you to tailor the level of filtering based on the specific needs of your platform.

Sendbird also makes it easy to implement customized content moderation solutions with third-party tools. For example, messages can be run through a toxicity analyzer from Perspective API, in conjunction with Google Cloud Platform. To do this, you would configure Sendbird’s chat settings to use webhooks whenever a message is sent to a group channel. From there, you can set a webhook URL to point to a custom backend implementation, such as a Node.js Express server endpoint.

Assuming you have obtained a Perspective API key, your backend implementation may look like this:

In the above code example, the /analyze_message endpoint receives a POST request from the Sendbird webhook call. The message is sent to the Perspective API for toxicity analysis. If the toxicity score, which ranges from 0 to 1, exceeds 0.5, then the banUser function is called.

User moderation

Sendbird’s user moderation features allow you to block, mute, or ban users, or freeze channels, giving you control over who can participate in your community and what they can do. Moderators can ban or mute users in channels, and admins can remove users from channels entirely. Additionally, users can block other users.

User moderation actions can also be performed programmatically with Sendbird’s SDKs. Continuing with our Node.js example from above, we can implement the banUser function as follows:

For more information about implementing chat moderation, check out our detailed tutorial.

User reporting

Sendbird’s reporting feature enables users to report content, users, or channels that violate community guidelines. In this way, community members can play an active role in maintaining a safe environment, while also helping your moderation team identify and address issues more efficiently.

Within your application, you can also build tools that leverage Sendbird’s reporting feature. For example, you may have a customized backend implementation that interacts with Sendbird via its SDK in order to facilitate the reporting of suspicious users. A server endpoint may look like this:

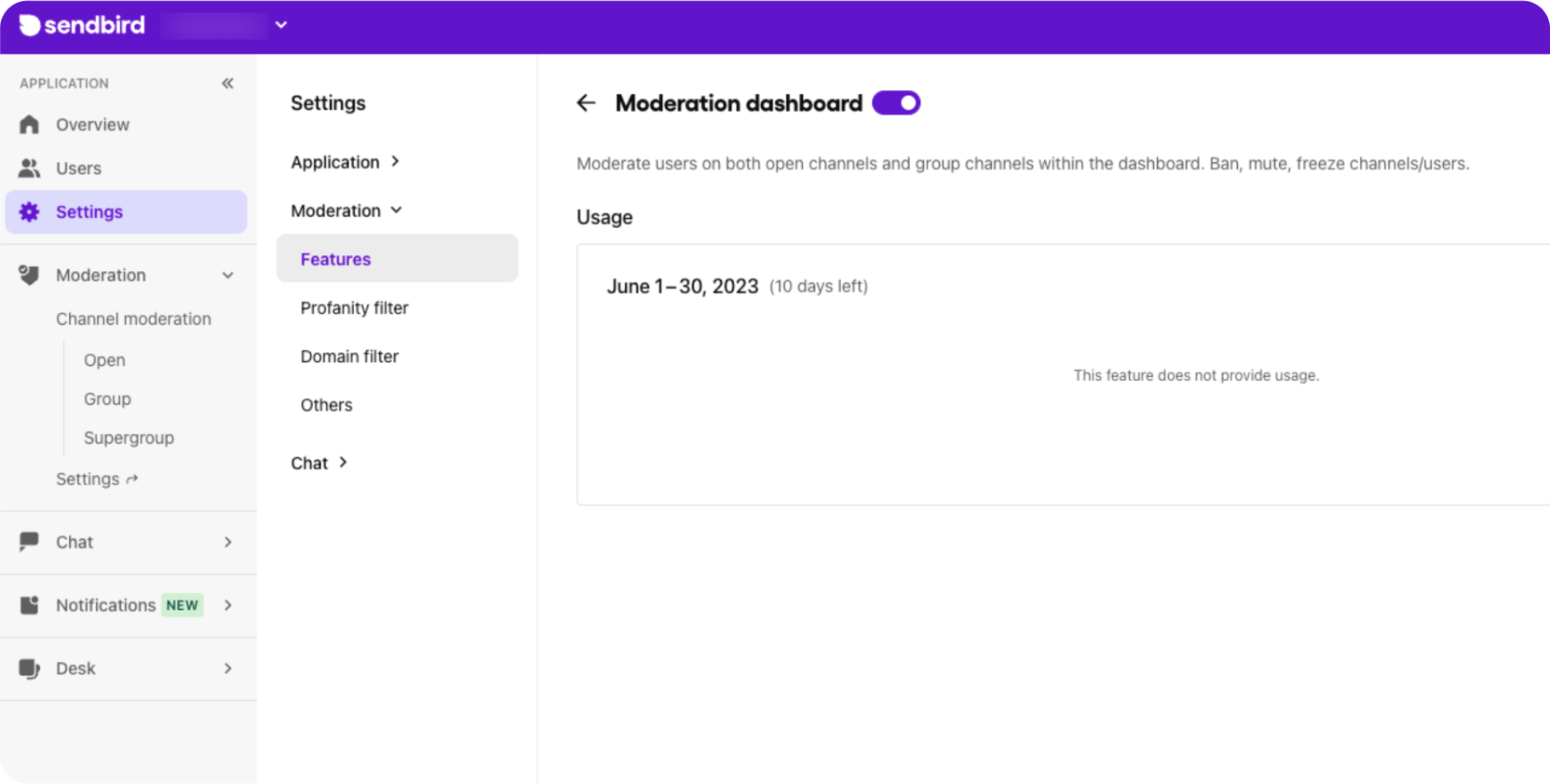

Moderation dashboard

Sendbird’s moderation dashboard offers a central location for managing moderation tasks, such as banning/muting users while monitoring users’ chat activities and configuring moderation settings. This dashboard simplifies the moderation process and provides valuable insights into user behavior and trends within your community.

For many use cases, these built-in features will be sufficient. However, when you need more advanced moderation capabilities, Sendbird can support those as well.

Advanced moderation features

Sendbird's APIs allow you to build custom moderation features and integrate them into your platform. By leveraging the power of the Platform API and webhooks, you can create a moderation system that is tailored to your community's specific needs and requirements.

Trigger automated moderation actions with webhooks

To begin with, you may want to refer to the report, profanity filter, and image moderation webhooks. These webhooks allow you to tap into Sendbird’s built-in moderation systems and extend them to other systems, allowing you to create automated flagging and escalation based on triggered events. This makes it easy to build upon Sendbird’s built-in moderation tools.

Build custom moderation dashboards

Next, you can build on top of the Platform API to create custom moderation dashboards tailored to your specific needs. This allows you to streamline your moderation process, improve efficiency, and gain a tailored understanding of your community's dynamics. A custom dashboard can include features like advanced filtering options, user analytics, and real-time monitoring of conversations. Additionally, you can build dashboards that present different views and capabilities to users with different levels of permissions (such as employees or non-employee community moderators).

Service integrations

Finally, for those looking to employ additional automated moderation, Sendbird supports integration with external moderation services. By leveraging advanced machine learning algorithms and AI, these services can further enhance your moderation capabilities. Integrating external services can help improve the accuracy and efficiency of automated moderation, while also reducing the workload on your moderation team. Because this integration is built upon Platform API webhooks, you’re never locked into a specific service. Nearly any third-party content analysis or moderation service can be used.

You can implement any of these more advanced features when the need arises, making Sendbird an extensible and flexible platform for your online community.

Chat moderation: An essential part of safe web and in-app communication

Chat moderation is essential for creating safe and engaging online communities. Sendbird offers a comprehensive suite of built-in moderation features, as well as advanced options for those looking to enhance their moderation strategies. By implementing chat moderation with Sendbird's tools, you can ensure that your community remains a positive and welcoming space that encourages its users to stay and engage.

With the right combination of manual and automated moderation techniques, you can create a scalable and efficient system that addresses the unique needs of your community. Whether you're focused on text, image, or voice moderation, Sendbird provides the tools and support necessary to build a safe and thriving online community.

Experience the benefits of effective chat moderation along with a host of other easy-to-use features by getting started with Sendbird today. You can also browse our demos to see Sendbird Chat in action. If you have any questions, please visit the Sendbird community or get in touch with us. We’re always eager to help!

Happy chat moderation! 🖥