Why Llama 3 is Sendbird’s top pick for your AI chatbot

Introduction

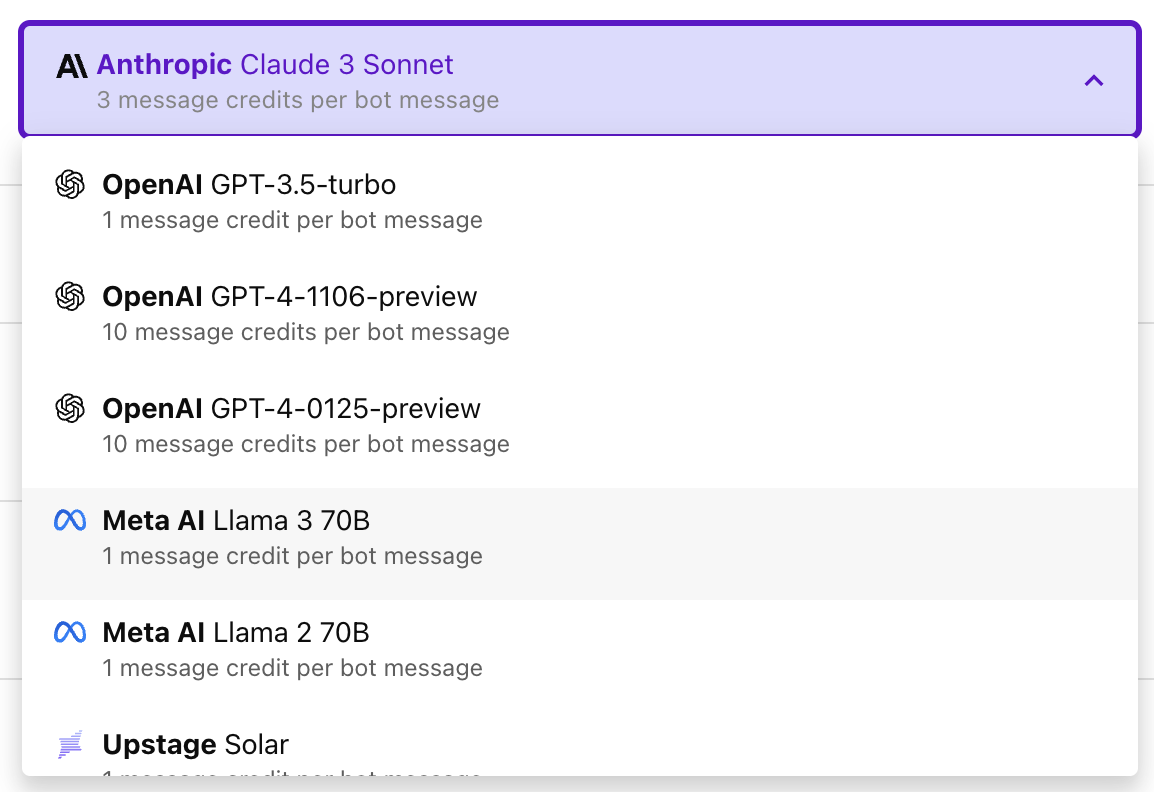

On April 18, 2024, Meta released its latest LLM, Llama 3, just nine months after the launch of Llama 2. This rapid progression has positioned Llama 3 alongside prominent models like GPT-4 and Claude 3, marking significant advancements in AI capabilities to benefit AI applicationsProduct Page - AI chatbot

What is LLAMA3?

Llama 3 is part of the latest generation of Large Language Models (LLMs) like GPT and Claude, which are designed to understand, generate, and interact with human language by learning from extensive text data. The most distinctive feature of Llama 3 is its open-source nature, which encourages global collaboration and innovation, enhancing the model's accessibility and fostering a diverse development cycle.

Making Llama 3 open-sourced accelerates innovation by allowing global developers to contribute to its improvement, fostering a diverse and rapid development cycle. This openness ensures transparency, broadens accessibility, and supports an ecosystem of complementary tools and services, enhancing educational opportunities and setting new standards in the AI field.

The Meta Llama 3 Family

Meta's Llama 3 family is comprised of four tailored models, each designed to meet specific needs within the AI development community:

Llama 3 8B: A pretrained, general-purpose model with 8 billion parameters, suitable for a wide range of language processing tasks.

Llama 3 8B-Instruct: Optimized for dialogue and user instruction responsiveness, this model enhances safety and helpfulness.

Llama 3 70B: A more powerful pretrained model with 70 billion parameters, ideal for complex language understanding and generation tasks.

Llama 3 70B-Instruct: Designed for high-accuracy applications in sophisticated AI assistant platforms, featuring 70 billion parameters.

Performance of Llama 3

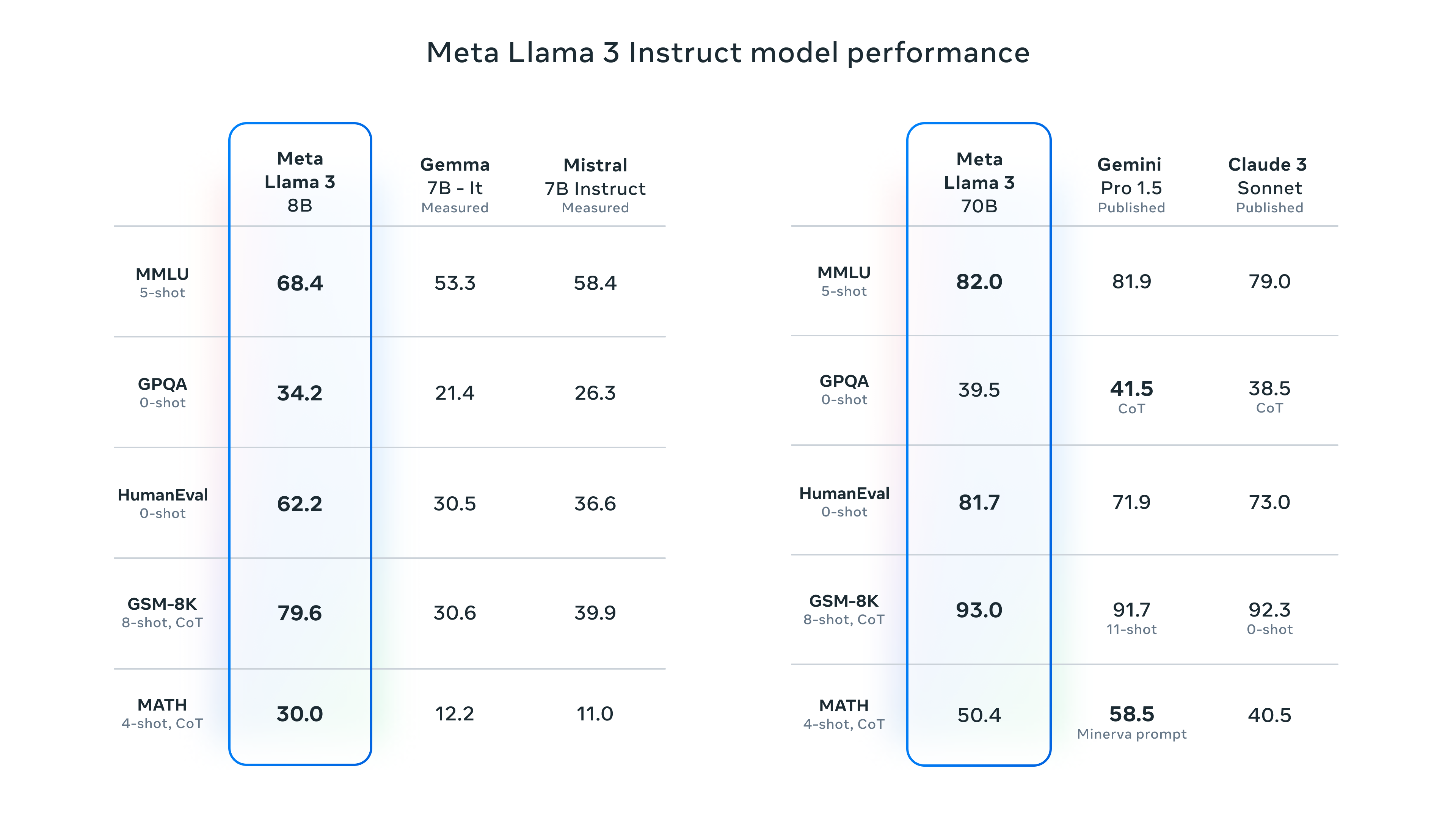

Llama 3 demonstrates superior capabilities across various AI benchmarks. The 8B model excels in human-like evaluations, while the 70B model shows outstanding performance in complex reasoning and mathematical tasks, surpassing other leading models such as Gemini Pro 1.5 and Claude 3 Sonnet.

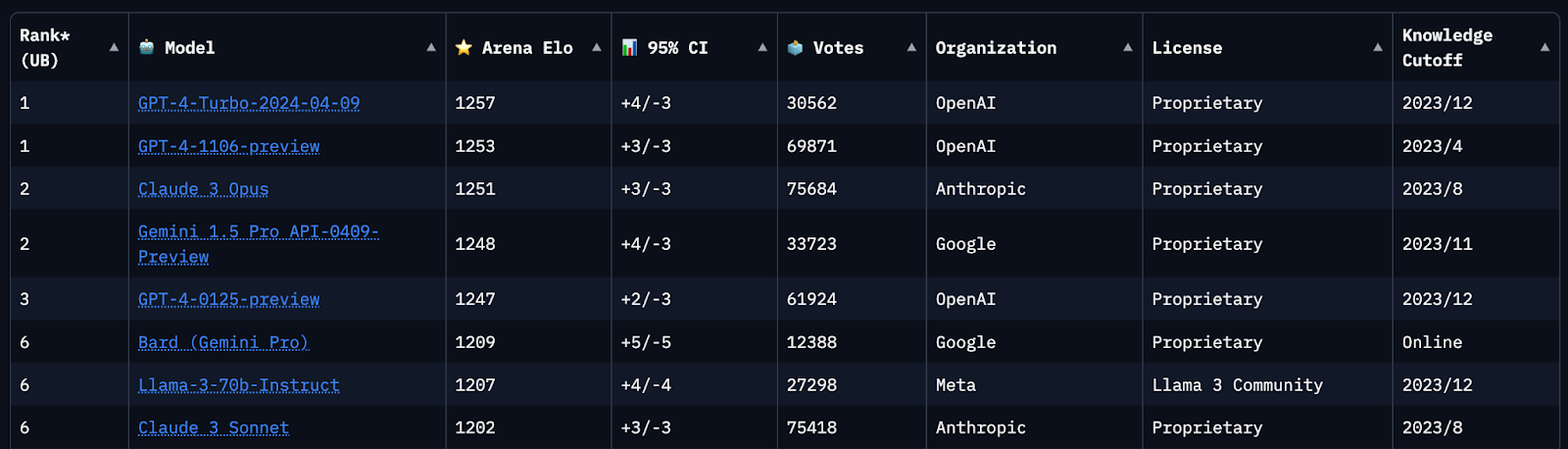

The LMSYS Chatbot Arena uses over 800,000 human comparisons to rank large language models (LLMs) on an Elo-scale. Currently, the Llama 3 70B-Instruct model holds the 6th position on this leaderboard, demonstrating its robust performance in real-world conversational settings.

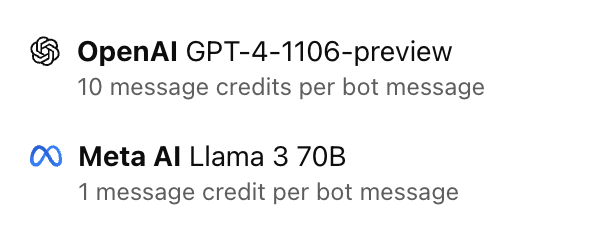

Despite its high performance, Llama 3 is available at a fraction of the cost of comparable models like GPT-4. This price advantage, coupled with its open-source model, democratizes access to cutting-edge AI technologies, making it a financially attractive option for developers and businesses.

In Sendbird AI chatbot, you can utilize Llama 3 at just 1/10 the cost of GPT-4, offering the same level of quality at a significantly reduced price. This substantial cost advantage makes Llama 3 an economically attractive option for leveraging state-of-the-art AI capabilities.

Practical Application: Llama 3 as a Knowledge Base Bot

Imagine using Llama 3 with Sendbird's system to apply Retrieval-Augmented Generation (RAG) for a knowledge base bot. This setup allows the bot to utilize information beyond its initial training data, providing updated and relevant responses based on the latest available knowledge.

Let’s see the real examples:

To evaluate Llama 3 70B based on its ability to handle specific information, I conducted an experiment using the Wikipedia page for the 96th Academy Awards. This event is the most recent Academy Awards ceremony, and it was assumed that the model would not have prior knowledge about it, relying solely on the provided URL for its answers.

The model was asked three questions to assess its ability to utilize the provided information effectively:

What do you offer?

Who was the best actor of the awards?

Summarize the awards in three bullet points.

For this test, I used Sendbird to input information about the 96th Academy Awards into Llama3.

Llama3 was able to effectively use the information from the provided URL to answer the questions. It responded in 46 seconds, demonstrating its proficiency in quickly utilizing specific external knowledge. In contrast, when testing ChatGPT with the same setup, it also provided high-quality answers but took 53 seconds to respond. Although the time difference might seem small, in practice, it felt significantly slower and was somewhat frustrating. When considering the cost in addition to the performance, the difference becomes quite substantial.

The only no-code UI supported solution to use Llama 3 on production

Llama 3 can easily provide you with most-accurate answers for your needs, you still need to have it properly engage with your users. While there are many other solutions that bring chatbot experience using LLMs, users now expect more production-like chat experiences, similar to those in Snapchat, iMessage, or WhatsApp.

To truly maximize the capabilities of Llama 3 in your service, it's imperative to support it with a modern and elegant UI that includes:

Message cards to display product images

Suggested replies

Message status receipts for sent, delivered, and read messages

Typing indicators

Offline support

Have Llama 3 on your own website within minutes

Sendbird can help you take the next step in creating an AI chatbot. While we offer rich messaging features, you can integrate Llama 3 into your website effortlessly, without any need for coding. Knowledge can easily be incorporated using a straightforward dashboard with just a few clicks. Go try it our yourself, creating an AI chatbot using Llama 3 has never been easier.

Please also check out the official integration of Llama 3 for your Sendbird chatbot projects here!