Comparing video streaming protocols: A comprehensive analysis

Live video streaming for your app: Getting started with video streaming protocols

Streaming data has been a part of our lives at least since the mid-90s when a game of baseball was broadcast live on the internet. Almost three decades later, streaming data has become a part of our daily lives with applications like Twitter Spaces, YouTube Live, Zoom, Google Meet, and Signal. Moving large amounts of audio or video data with minimal latency and high reliability - notably without a loss of quality - is a huge challenge that applications like these have solved.

Today, live streaming is an essential part of building a communication app. Live streaming allows users to get their message across in a rich video and audio format, enhanced by real-time, feature-rich, low latency chat. Fun or useful features like screen sharing, camera filters, and cloud video recording offer levels of engagement - and capture the magic - that traditional digital media cannot. After all, 80% of people would rather watch a livestream than read a blog!

This article will take you through the finer details of seven popular video streaming protocols so you can choose the best one for your application. But first, let’s have a quick refresher on what streaming protocols are.

What are video streaming protocols?

A video streaming protocol is a set of rules or standards that is used to transfer video files over the internet. A video streaming protocol defines the rules and methods used for a video to be broken up into smaller chunks before it’s transmitted and then reassembled into playable content on the other end.

Data moves across the internet in small chunks called packets, which are appended with metadata to rebuild data when it arrives at its destination. The data is transported from one device to another according to certain communication standards called protocols.

This holds true for live streaming as well, but with an extra challenge thrown in: the data in the packets must reassemble to appear as a continuous stream. In other words, audio and video should play online without any latency.

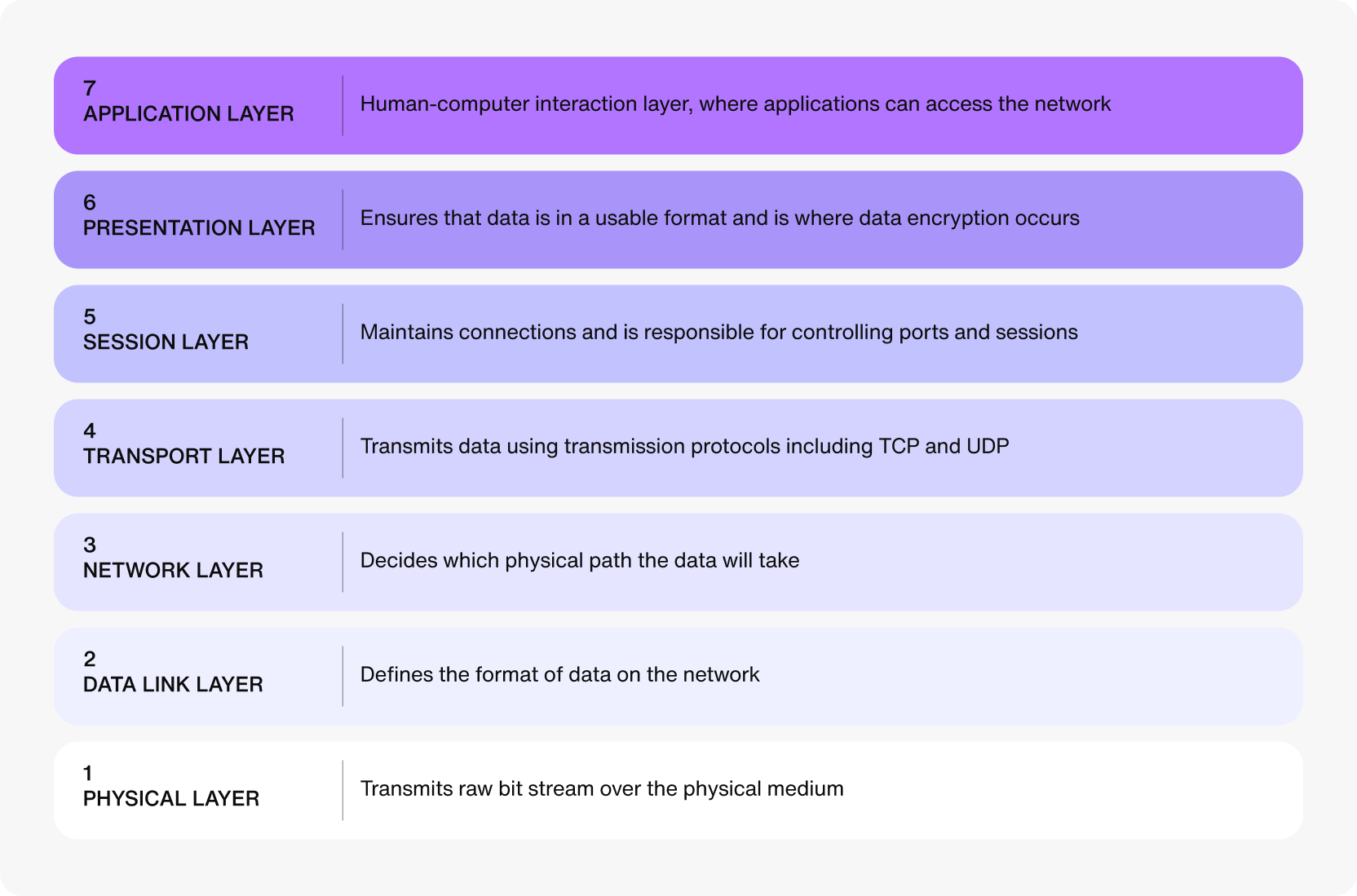

Video streaming protocols handle the challenges of scale, quality, security, and reliability that come with the smooth transportation of large amounts of data. They facilitate communication in the top three layers of the OSI model— session, presentation, and application.

Video streaming protocols are built on one of the following internet transport protocols or a mix of both:

TCP: A reliable data transport protocol that handles common networking issues such as duplicate packets, lost packets, and packets that are out of order.

UDP: A lightweight data transportation protocol that focuses more on speed than reliability, making it an excellent option to build on for streaming protocols.

TCP and UDP both work on the transport layer of the OSI model, below the session, presentation, and application layers. These protocols aren’t designed to handle video streaming on their own.

How to choose a video streaming protocol: 7 important considerations

There are several considerations to keep in mind as you’re deciding on a video streaming protocol for your application. This comparison analyzes seven areas of concern in particular:

First mile vs. last mile

Playback capabilities

Transcoding and codec support

Scalability

QoS and QoE

Latency

Security

First mile vs. Last mile

Users around the world can stream videos at the same time, which means your application needs a smart and robust infrastructure that minimizes the load on the network. The first mile of your video stream delivery is to get the encoding and formatting right before handing off the prepared stream to a geographical area that’s closer to your end users, also known as the last mile. The video streaming protocol you choose will most likely be stronger on one side of the equation than the other.

Playback capabilities

With so many operating systems and video playback applications out there, you can’t forget about your end users’ devices. Consider how you’ll support essential playback functions like playing from where a user left off, playback speed controls, and audio processing capabilities.

Transcoding and codec support

Streaming raw video isn’t a great idea—it consumes too much network bandwidth, and most end-user devices can’t handle it. If you want to stream lossless audio, that’s a different story. Although it bloats the file sizes, most media devices can still take lossless audio.

For large-scale and widespread video streaming consumption, you need a protocol that supports transcoding with various formats and bitrates. Netflix, for instance, converts all its content into several designs using different encoders and then chooses which would be best for the end user based on their device and network conditions.

QoS and QoE

Quality of Service (QoS) and Quality of Experience (QoE) are directly related, but they measure different things at different ends of the delivery spectrum.

QoS tells you about the technical aspects of the quality of the video streaming service. It monitors CDN metrics, like latency, throughput, and cache hit ratio. QoE tells you how an end user perceives that service in terms of playback experience, audio and video quality, startup time, buffering, and encoding.

Scalability

Video streaming can be very resource-intensive when it comes to storage, processing, and network. For example, if you need to store video files for end users because of constraints on their end, you need cost-effective storage and a network infrastructure that allows you to place the videos as close to the user as possible. Being able to scale directly affects the QoS and QoE of the application (in other words, the experience that you’ve promised to the end user).

Latency

Another thing that impacts QoE is latency. Low-latency video streaming is essential for gaming, sports betting, auctions, and security feeds, just to name a few content types. CDNs enable applications to have low latencies for video streaming and delivery, but different protocols handle latency differently.

Security

There are many streaming use cases where security should be the top priority. Think of live meetings, private webcasts, surveillance, and more. You should look for a protocol that handles stream security out-of-the-box or at least enables you to quickly implement security-related solutions on top of the protocol.

Comparing video streaming protocols

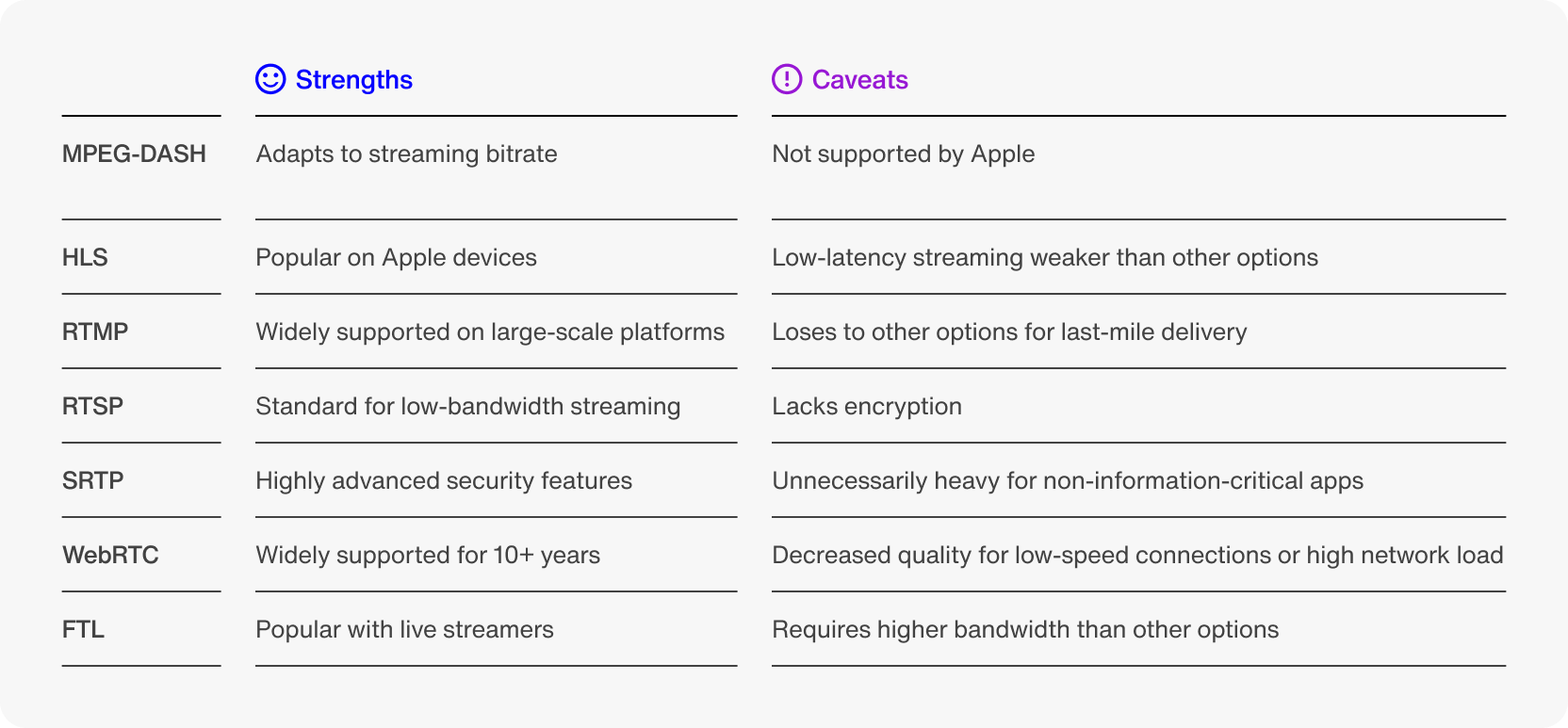

Now that you know what to look for in a video streaming protocol, and as you keep your own application needs in mind, let’s take a close look at seven of the most popular streaming protocols today: MPEG-DASH, HLS, RTMP, RTSP, SRTP, WebRTC, and FTL.

MPEG-DASH

One of the most popular video streaming protocols, MPEG-DASH (Dynamic Adaptive Streaming over HTTP), is used extensively by Netflix, Samsung, Google, and Adobe, among others. This standard protocol was built as an alternative to Apple’s HLS (HTTP Live Streaming), mainly to remove constraints such as high latency requirements. Although MPEG-DASH isn’t supported in the Apple ecosystem, it does support lots of applications and codecs.

MPEG-DASH breaks down your streaming video into small segments for encoding into different quality levels to cater to other end-user devices and network conditions. Backed by ABR (Adaptive Bitrate) streaming, this approach allows the applications to automatically switch to a lower or higher quality video based on network conditions.

A big practical advantage of MPEG-DASH is that it’s widely supported across devices and browsers. It also promises a standard format for video streaming, something HLS can’t promise because it only runs on Apple devices.

HLS (HTTP Live Streaming)

Like MPEG-DASH, HLS is an HTTP-based live streaming and video delivery protocol that uses different bitrate videos to cater to end users with various devices and network conditions. Apple created it so they could move away from Quicktime to solve streaming issues with the iPhone 3.

HLS breaks video streams into ten-second segments, which are then stored on an HTTP server to be delivered to the end user. The segments have a different file container format called .ts, which stands for Transport Stream.

Originally, HLS lacked the capability for low-latency streams like MPEG-DASH. This problem was solved with HTTP Live Streaming second edition, version 7 and above. HLS also supports video encryption with popular encryption algorithms like AES-128.

Compared to MPEG-DASH, HLS wins on Apple devices, but the low-latency video streaming option still seems more attractive in MPEG-DASH precisely because the products with the largest scale are already using it.

RTMP

Both HLS and MPEG-DASH are often compared to RTMP, a protocol that was created by Adobe over two decades ago. Adobe has stopped actively maintaining it, but RTMP is still the king of first-mile delivery. It’s widely supported, and it’s stood the test of time.

At one point, RTMP powered most of the internet’s video, and large-scale video streaming platforms like YouTube still support it extensively. However, HLS and MPEG-DASH beat it for last-mile delivery, and open protocols like WebRTC and SRT might eventually give RTMP more competition for first-mile.

RTSP

RTSP (Real-time Streaming Protocol) is another from the old guard. It’s become a standard for low-bandwidth video streaming. RTSP’s control features enable the stream to pause, fast forward, slow down, and rewind, which is handy for RTSP’s prime applications: CCTVs and other surveillance camera setups.

Note that RTSP doesn’t handle video stream transfer. It has to work with RTP, while RTSP handles the connection between clients and servers. Unfortunately, RTSP lacks encryption, which is why its applications are limited, but you can use SSL/TLS protocols with RTSP for encryption.

SRTP

SRTP (Secure Real-time Transport Protocol) is an open-source security protocol that offers end-to-end encryption using AES-128. That means only an authorized end user can receive a stream over SRTP. Another layer of security comes from the HMAC-SHA256 algorithm, which ensures that the video stream hasn’t been tampered with during transportation.

Because of these highly advanced security features, SRTP is popular with information-critical applications, such as news broadcasting and live events.

To ensure QoS and QoE, SRTP uses error correction and retransmission mechanisms, along with adaptive bitrate, to handle lost packets and low bandwidth situations. SRTP uses UDP instead of TCP to drive the transport.

WebRTC

WebRTC (Web Real-time Communication) protocol allows real-time communication between two or more end-user devices directly. An open standard for secure real-time video streaming, WebRTC uses SRTP under the hood. Unlike many protocols that stick to TCP or UDP, WebRTC supports both—TCP handles connections, handshakes, and teardowns, while UDP ensures secure data transport via SRTP.

This protocol has been around for over a decade. It was initially open-sourced by Google in 2011. Most popular video conferencing and calling applications like Google Meet, Zoom, Snapchat, Houseparty, Facebook Messenger, and GoToMeeting have used (and some still use) WebRTC as the backbone for their product. Sendbird Live, which is built with WebRTC, features sub-second latency video technology that enables interactivity for true real-time live events such as auctions, concerts, flash sales, sports events, and more. Because of its wide adoption, WebRTC enjoys widespread browser support across operating systems and platforms.

When compared to its contemporaries, WebRTC is in its own category: HLS is mainly used for on-demand video streaming; RTMP can handle both live streaming and on-demand video but lacks security features; RTSP is mainly used for low bandwidth use cases, such as CCTV cameras. WebRTC is designed for bidirectional traffic.

FTL

To address the issue of high latency, even for low-latency protocols like RTMP and HLS, the open-source broadcasting software company OBS (Open Broadcast Software) created an ultra-low-latency streaming protocol called FTL (Faster Than Light). Launched in 2018, it’s the latest addition to this list of popular video streaming protocols.

Consequently, the other players have a dedicated market share, as tens of thousands of applications are already using them. Partially for that reason, and partially because FTL requires higher bandwidth than other protocols, adoption of FTL has been slow.

Still, FTL is very popular with live streamers, who use OBS as their primary broadcasting software on Twitch, YouTube, and Vimeo. FTL, like RMTP, doesn’t have out-of-the-box encryption features, but it supports traffic over SSL/TLS.

Other video streaming protocols

We’ve covered the popular video streaming protocols; here are 2 more that are not as popular but packed a punch in their day.

First, Adobe HDS was the primary protocol used to power Adobe Flash applications. As Adobe announced the end-of-life for Flash in 2020, this protocol isn’t used anymore.

Microsoft Smooth Streaming has a similar story. With Silverlight’s end-of-life in late 2021, Smooth Streaming also went away. In both these cases, the most common replacements are HLS and MPEG-DASH.

Which video streaming protocol should you use?

Surveillance, live music, on-demand video, video conferencing…all of these video streaming use cases have their own priorities for delivering content to end users. Choosing the right protocol for your application requires you to have a solid understanding of the needs at both the server end and the client end. What do you and your users require when it comes to first-mile and last-mile delivery, playback capabilities, browser support, security, latency, low-bandwidth performance, scalability, quality of service, and quality of experience? Weigh your streaming protocol options based on these considerations in particular.

If you’re building a live video streaming app, try Sendbird Live, a last-mile sub-second video delivery solution for web and mobile applications. Sendbird Live uses RTMP for ingestion and WebRTC to deliver your content globally to up to 100,000 concurrent viewers. It boasts 6 AWS regions and 100ms sub-second WebRTC latency. Sendbird Live allows you to build your own custom live video streaming experiences for hosting real-time interactive chat and video streams on your own website and mobile apps. With Sendbird Live, you own your data and do not have to rely on third-party platforms. You can start building live video streaming with the Sendbird Live SDKs and UIKits for iOS, Android, and JavaScript! For in-depth guidance, see the docs!

If you have questions about Sendbird Live, check out the Sendbird Community or contact us! Our experts are always ready to help.

Happy live video streaming! 💻